#a1111

Text

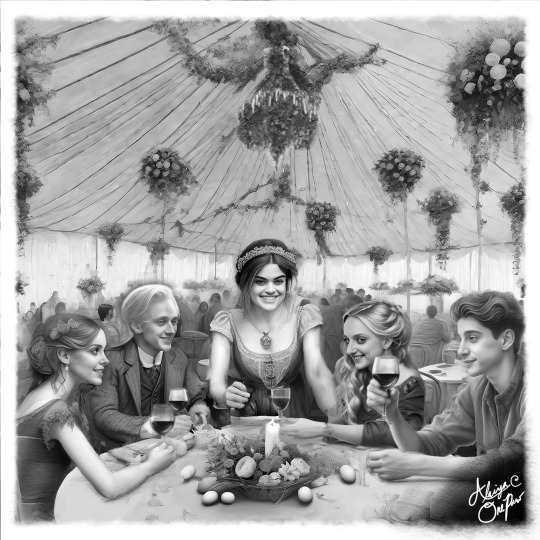

Chapter 17, The Muddy Princess, by Colubrina

Expand for Earlier Chapters!

The Muddy Princess, Chapter 01

The Muddy Princess, Chapter 02

The Muddy Princess Chapter 03

The Muddy Princess, Chapter 04

The Muddy Princess, Chapter 05

The Muddy Princess, Chapter 06

The Muddy Princess, Chapter 07

The Muddy Princess, Chapter 08

The Muddy Princess, Chapter 09

The Muddy Princess, Chapter 10

The Muddy Princess, Chapter 11

The Muddy Princess, Chapter 12

The Muddy Princess, Chapter 13

The Muddy Princess, Chapter 14

The Muddy Princess, Chapter 15

The Muddy Princess, Chapter 16

#fanfic#podfic#dramione#draco x hermione#the muddy princess#by colubrina#Pureblood!Hermione#After Hogwarts#hermione granger#Draco Malfoy#fluffy romance fic#hp fanfiction#artists on tumblr#artists on deviantart#audiobook#hp fanfic#hp fanart#hp fandom#ai tools#sketchbook pro#sdxl 1.0#a1111

14 notes

·

View notes

Photo

#a1111#generative ai#ai#model#beauty#beautiful#fashion#aesthetic#stable diffusion#ai generated#artificial intelligence#sd#hotsforhotties#2023#portrait

22 notes

·

View notes

Text

/ stable diffusion / a1111 / beige / 2024

3 notes

·

View notes

Text

#roadmap#bugarron#hairy gay#aesthetic#uic#bmwedit#bwwm wmbw#gayman#fivenightsatfreddyssecuritybreach#Aguada#sugardaddy#Hawaii#SanAntonioTX#ChicagoIL60641#A1303091#DOB:522#522#A522#A444#A555#A333#A222#A1200#A1010#A1111#A1222

0 notes

Text

I've used Github more.

In the last few weeks than I have in the previous decade. I've had an account since 2012, occasionally I've posted comments and queries about bugs in software I use but thats about it.

But in the last week or so, i've downloaded stable diffusion/A1111 i've been learning python and also been using Godot and the plugins are all stored on Github.

I feel like there is a step change occurring for me in the way I think about/approach computing

1 note

·

View note

Text

Audio Bliss

Follow me:

https://www.instagram.com/superkleen.ai

3 notes

·

View notes

Text

More test outputs. I struggled to get the best picture quality but the overall composition is good enough that I'll share these results.

I'm finally trying out ComfyUI because sdxl works with it and it was trivial to set up the upscaling workflow I wish I could implement with the a1111 webui's hires fix, namely using different samplers for the upscaling steps. The nodes are intuitive enough for me, it reminds me of some audio modular tools I've played with in the past, but I can understand if others struggle with the concept. It seems to run quicker but I miss some of the advanced prompt tricks that are built into a1111.

Also a couple more pregnant photo Loras have popped up and I've merged them all to get a decent combo of them all, it seems to naturally do bigger bellies with less flaws though the results still need retouching more often than not. It would be nice to get started with block weighted merging, I can see how it would help to get the most out of the models and loras I have at hand, but I simply don't have the time.

Backgrounds are still usually nonsensical and idk if I'll ever bother to address that, style references actually seem to make backgrounds worse if anything. Actually SDXL seems better for backgrounds, multi-model compositions might improve the overall quality but take more time to set up.

Thanks for enjoying the pics! please also follow me on: Instagram📸Patreon🙏DeviantArt🎨

#ai art#stable diffusion#pregnancy art#pregnant art#pregnant beauty#fake photo#maternity art#nude art#pregnant nude#maternity nude

1K notes

·

View notes

Note

I noticed that you use stable diffusion, and I'm trying to make similar models to use as drawing references. I've been trying to look up guides for how to use AI without the stable diffusion inappropriate content flag popping up, and I'm struggling to find discord servers that offer the same thing, especially with male body art. Do you generate your models with any sort of specific alternate program, or are you able to just run the open source code independently so the restrictions can be removed (I'm not code-savvy but I could look up some guides)?

Thanks so much!

To bypass the online filters, run Stable Diffusion locally. It's ugly and you have to dip into the programming and file structure side of things every once in a while, but it's the best way to run Stable Diffusion if you have an nVidia equipped PC to handle it.

This link handles most of the heavy lifting and gets you up and running with the least amount of effort:

GitHub - EmpireMediaScience/A1111-Web-UI-Installer: Complete installer for Automatic1111's infamous Stable Diffusion WebUI

14 notes

·

View notes

Text

So. Close.

But Draco's apartment should not have a lamp!!

Also, side note, I totally want that couch, on the right. **Grabs couch and tosses it into a "Calvin and Hobbes" style Real-o-matic Machine**

If only!

#fanfic#podfic#dramione#draco x hermione#hermione granger#draco malfoy#Lady of the Lake by Colubrina#Colubrina#ai art#ai artwork#ai tools#A1111#Sketchbook Pro#sdxl 1.0

15 notes

·

View notes

Photo

#a1111#generative ai#ai#model#beauty#beautiful#fashion#aesthetic#stable diffusion#ai generated#artificial intelligence#sd#hotsforhotties#2023#portrait

6 notes

·

View notes

Text

/ stable diffusion / a1111 / terracotta / 2024

2 notes

·

View notes

Photo

“Controlnet and A1111 Devs Discussing New Inpaint Method Like Adobe Generative Fill”

Give us a follow on Twitter: @StableDiffusion

H/t Marisa-uiuc-03

#digital art#stability ai#stable diffusion#ai art#StableDiffusion#aiart#ai artwork#art#deep dream#generative#generated artwork#ai generated#digital painting#ai generated art#deep learning#dream#unreality

3 notes

·

View notes

Text

please if someone here knows more about this than me do correct me but i've been trying to figure this out and so far i think there's three main "layers" with different datasets used to create a stable diffusion picture (through comfyUI, a1111, web portals, etc.) which i will explain to the best of my ability now

(the goal of this post is to shed some light into the whole process to better understand the conversations around this topic, and also to try to figure out what is the best option for someone looking to protect their art from being used against their will in these models and for someone looking to make ethical AI images with their own pictures)

on the most superficial layer we have LoRAs which are made with only a few pictures and are applied on top of a base checkpoint model, usually made to replicate one particular artist's style or to capture a character's likeness to be able to re-pose them through prompts or other means. to make these i've seen people use anywhere from 5 to 1000 images which can be sourced from your own work, someone else's work, public domain, etc. when people say that they "trained an IA with their own art", i think they normally meant that they made a LoRA model with their own art that still needs a base checkpoint model to work, and i'm not sure about this but i think you can combine as many of these as you want (kind of like sprinkles on a cake perhaps??). the files are relatively small (a few Mbs in most cases) and you can train one fairly quickly through existing programs that are freely available

the base checkpoint model i'm talking about is the middle layer and from what i've seen i think it's trained similarly to a LoRA but it's meant to emcompass a broader subject (for example, "anime style" rather than "utena style"). i'm confused on how many pictures minimum you need to train one of these but people still do it in their fairly standard laptops or through rental of cloud space and it can still be done only with pictures you own the rights to and/or are in the public domain. many of these models have nothing to do with visual arts in the first place (for example, you can take many pics of trees and flowers around your neighbourhood, curate a proper dataset and use it to train a model that you can use to generate mix-and-matches of those... etc.). on top of these base models you can create and apply LoRAs and other similar smaller/"tweaking" models. these tend to weigh 2-8GBs but i wouldn't be surprised if there's some much larger ones that i haven't encountered

i used to think that if you did this step without using stolen images you could have a "clean" model without anything shady going on (personally i wanted to make one for victorian flower fairy illustrations for personal use because i thought it would be fun...) but then i learned that these models are still trained on a base stable difussion model (SD1.5 or SD2.0 etc) which is where it gets impossible to "make your own" as a layman since these large base models are trained on immense, and i mean immense data sets containing (in SD's case) millions upon millions of images of all kinds, from naruto screencaps to pictures of puppies to photographs of models in ads in magazines to people's instagram selfies and facebook family photos, etc. these data sets are so large that if i understood correctly they're impossible to curate manually or for anyone to know exactly what has gone into them. also, these costs hundreds of thousands of dollars in computing power to train, but they only need to be trained once, after which each use only consumes the same amount of power as any other activity that you may do on your computer with your graphics card such as playing a videogame

i'd recommend reading this article [cw: mentions of child abuse] for better understanding, but this is basically where "tumblr is selling our data" comes into play. checkpoints and LoRAs created to imitate one specific style are normally created from hand-picked datasets by one person or a small group of people and that can still be done even with the best data protection measures as long as another user can right click and save an image from a post. meanwhile, base models like stable diffusion or midjourney are trained on billions of pictures of all kinds so for example even if they scrapped my entire portfolio it would be a drop in the ocean in the final result since the whole of facebook is also in there

re: disruptive protection technologies such as glaze or nightshade, personally i don't think it's worth it to use them to protect your art from automated scrapping / massive data selling because those images are going to be such a tiny small part of the final dataset that it feels like putting in hours of extra effort + losing a lot of social media presence by deleting your old posts with the original images for no tangible benefits to you, but if what i said above about LoRAs and checkpoints is correct then it would be useful to prevent regular humans from manually creating specific tweaker models that replicate your specific style or characters. however from what i've read i think this can be somewhat bypassed by just taking a full-res screenshot of the image instead of right click and saving it (i say somewhat because a glazed picture is inherently disrupted and thus "uglier" than the original picture... but i think just blurring the image slightly before posting it or just posting a lower res version would give you the same level of protection ??? without needing to go through all the trouble of using glaze and nightshade which is pretty time-consuming)

again my only goal here is in understanding how all of this works so i really appreciate any corrections that can help me better understand. i want to test my theories on glaze/nightshade by taking some disney images and creating 4 different style LoRAs with them (one with the original images, one with glaze + nightshade, one with low res versions of the images, and one with blurred high res versions of the images) to see if there is any noticeable difference in the results !

1 note

·

View note

Note

I am Tolu from Nigeria, Africa.

I'd like to create images like this but focused on Africans. I am new to AI Image generation and I am a photographer. So I understand imagery.

My questions are these:

What's the best way for me to start creating images like this?

What tools do I need?

What prompts will I probably need?

I will appreciate your response

Hi Tolu, I can't walk you through the process but there's a ton of youtube videos and website tutorials that can show you the step-by-step of creating photorealistic AI imagery.

If you have a PC that meets the requirements, you'll want to install an implementation of Stable Diffusion, download a suitable photorealism model, and a realistic pregnancy Lora. There's an app called Stability Matrix that will let you do all of the above, it will install Stable Diffusion for you and download resources directly from civitai.com

I mostly use Stable Diffusion Webui by Automatic1111, but I also use comfyUI, and the other SD packages are also very useful depending on what workflow you prefer.

If you don't have a PC that meets requirements you'll have to mess around with remote systems to get similar results to what I get, and there's no longer free options for doing that. If you're willing to pay for a google colab pro subscription and can work out how to use a jupyter notebook then you can run the Stable Diffusion WebUI as a colab, which integrates with Google Drive to manage the files. You'll have to download models and loras separately then upload them to a google drive folder and add the drive folder to the Colab notebook. You don't have to be a coder but it helps to have some basic understanding of python and command lines. There's other remote services that will let you run Stable Diffusion on good GPUs but I haven't tried them out, I only used Colab back when it was free to try. Colab still has a free tier for Machine Learning students and hobbyists, but it's shuttered using it for Stable Diffusion webUI implementations.

Simpler prompts work better, complex prompts are hard to get balanced and will often omit some details entirely. I like to use nationalities to guide the creation of ethnicities, and smaller localities can also be influential. Prompts like "black" or "asian" aren't just patronizing AF they're also ineffective. The SD checkpoints and Loras often have strong biases towards creating European and Asian characters - sometimes I've tried to create African characters and the outputs have still been of European appearance. Negative prompting will help resolve this issue when it occurs. All the world's women are beautiful with a bump,.

If you use A1111 you'll need to include the lora name in <> brackets in the prompt to load it, and control the weight of the lora - e.g. <pregmixv2:0.9>) to get the desired results. You can add it automatically in the extra networks tab

Aside from prompts, the other parameters can be tricky to get balanced for the best results. You can find good photorealistic pics on CivitAI and copy the settings used to generate the image to get similar results.

Generated images are rarely perfect. The vast majority of what I post has been retouched, and for every image I post there's five that were too broken to fix, sometimes it's absolute body horror!

Check out my other posts with the #infopost tag for more infoposts. I'm not in a position to create good tutorials but I hope I've put enough together to point people in the right direction. AI art is terrible in a lot of ways but the more people use it the more people will understand it. Stable Diffusion is relatively outdated and as you experiment you will find out the limitations of the technology; Bing and Midjourney work so much better and are more consistent. But they won't let you do nsfw content, so Stable Diffusion is what we have to work with.

Finally - as a photographer you will find that Stable Diffusion offers a plethora of powerful image editing and filtering options especially in conjunction with img2img/inpainting and controlnet, but that's something you have to learn about later. I hope you find the AI software intuitive, there is a bit of a learning curve but familiarity with digital images goes a long way. Good luck!

12 notes

·

View notes

Note

These are amazing. Would you mind sharing your method? A1111? I have been trying to make some nice bulges for ages and have never seen any as good as this. Would really appreciate knowing how you managed it.

Yes, automatic 1111. On civitai, i made a lora for this.

https://civitai.com/models/102191/bulgerk-dickprint?modelVersionId=109392

Use this, and look at the images prompt examples . Cheers

1 note

·

View note