#camera sensor

Video

youtube

SincereFirst CMOS IMX334 Imaging Sensor 8MP Camera Module

SincereFirst Embedded camera module, SincereFirst AioT Vision, SincereFirst Sincere is First!

#youtube#Embedded camera module#sincerefirst#aiot#artificial intellgence#camera module#camera sensor

0 notes

Text

instagram

#Google Pixel phones have been using the #Sony IMX363 #camerasensor since 2017. The new Pixel 7a is expected to use the IMX787 with sensor size 1/1.3 compared to 1/2.55 (smaller is better) of #Pixel6a and #Pixel4a. #Apple has been using #Sony #imagesensors in #Phones for 10+ yrs.

0 notes

Text

Mastering Pixel Binning

Introduction to Pixel Binning

Pixel binning is a technique used in digital cameras and smartphones to improve image quality, particularly in low-light conditions. It involves combining information from multiple adjacent pixels on a camera’s sensor to create one “super pixel.” This process reduces the overall image resolution but provides several advantages, such as improved low-light performance,…

View On WordPress

#Bayer pattern#camera sensor#camera technology#computational photography#digital noise#dynamic range#high-resolution#image processing#image quality#image resolution#ISO#low-light performance#megapixels#photo editing#Photography#pixel binning#Quad Bayer#RAW format#sensor size#smartphone cameras

1 note

·

View note

Text

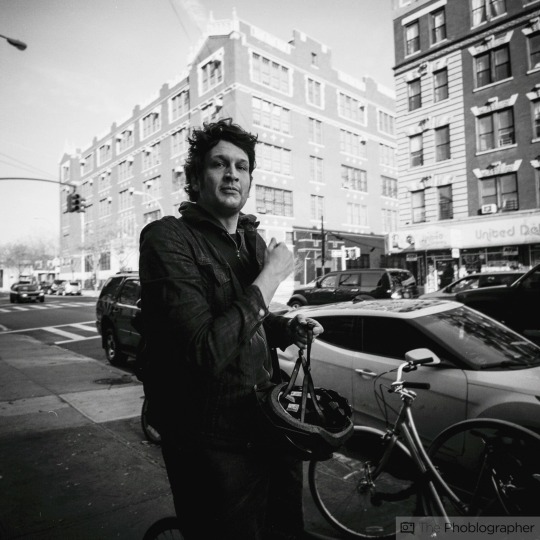

How Square Format Sensors Could Be Great for Photographers

We dream of squares.

Years ago, we reported on the possibility of Canon making a medium format camera with a square sensor. Today, I highly doubt Canon would do such a thing considering how conservative they are. While all the camera brands are busy running after content creators, they’re forgetting photographers in some ways. They’re also not realizing there needs to be cameras for photographers and cameras for…

View On WordPress

#camera sensor#Cameras#creating#fujifilm#hasselblad#nikon#square format camera#square format sensors

0 notes

Text

The Differences in Crop Sensor Cameras vs Full Frame

The Differences in Crop Sensor Cameras vs Full Frame

Looking through which camera to buy next is like walking down the cereal aisle at the grocery store. Brands, functions, lenses, features, etc. it can start to become a little overwhelming! One of these major features is the crop sensor cameras APSC vs full frame. But this doesn’t have to be a sticking point. Neither one of these sensors are the one-size fits all answer to your photography needs,…

View On WordPress

#aps-c#camera sensor#crop sensor#learn photography online#learn photography online for free#micro 4/3

0 notes

Text

I fell in love in rainy October, completely.

Waiting for Halloween. 11 days left!

#photography#digital camera#autumn#nikon#ccd sensor#aesthetic#fall#forest#day#rainy#rain#october#foggy#small town#fog

377 notes

·

View notes

Text

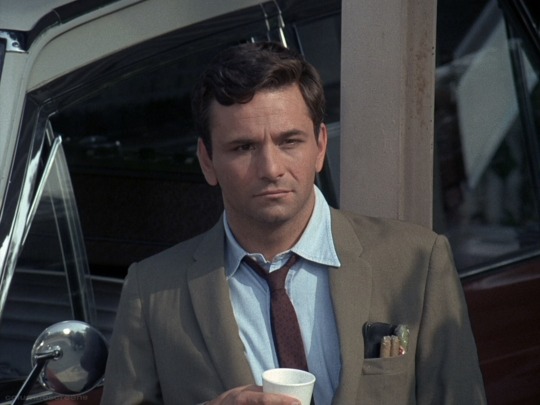

#columbo#season 1#prescription murder#i know we all love wrinkled raincoat Bumbo but. there's so much to be said for Cool Guy Slicked Back Hair Chambray Button-Down Columbo#standing around brooding freshly out of his 30s and if you gave him a pair of aviators he'd burn a hole into the camera sensor

126 notes

·

View notes

Text

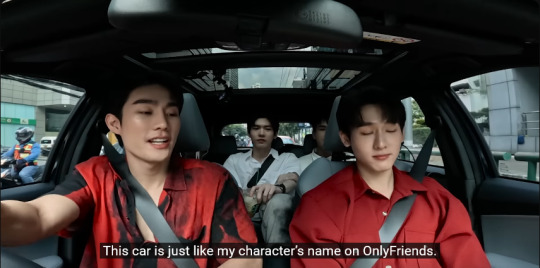

in yesterday's forcebook show real, they had to promote the car they were driving, and force brought up only friends to highlight that this sweet sweet ride is top-tier!

and they say more things like it fits all lifestyles! you can buy it for any purpose!

and the whole time they have neo in the backseat??? and his contribution to this conversation is:

and all i could think was: force my buddy my friend you have the opportunity to say the FUNNIEST THING EVER

#forcebook#only friends the series#like obviously toyota would NEVER okay this as promo material#but just picture force cheerfully turning to the camera like#that's right neo and the backseat on this baby is so spacious and comfy#even two grown-ass men can comfortably get it on back there#no more back pain or leg cramps!#and the suspension is so smooth you can use someone's lap as your personal bouncy castle and the vehicle WON'T be a-rocking#and then book chimes in#and the smart sensors in the car let you know if you've been wiretapped!#the yaris cross was made to accommodate all your needs!

23 notes

·

View notes

Text

Naked Ghost Caught On Motion Sensor Camera

What looks like to be a ghost of a naked woman walking across a garden has been captured on a motion sensor camera.

#Naked Ghost Caught On Motion Sensor Camera#paranormal#ghost photography#paranormal photography#spirit photography#creepy#ghosts#ghost#ghost photos#spirit

23 notes

·

View notes

Text

Based on a convo

Imagine if he can project media through those eyes of his

#twisted wonderland#twst#ortho shroud#twst ortho#i personally think his eyes are probably resin or glass or camera lenses or maybe even gems rich boy idia inlaid#but wouldn't it be funny if he can rick roll the other kids during a staring contest#i don't think he sees through his eyes#he probably has sensors and cameras elsewhere that help him see

40 notes

·

View notes

Text

I was having a bad day and decided to watch the newest episode of Percy Jackson. And lemme say. Watching that kid drive the cab and utterly fucking it up was Hilarious. Percy braking the car every 2 feet, the sense of fear before going down the ramp, him looking lovingly at Annabeth before ramming the car against barrier in the vicinity. I hadn’t laughed that long in weeks.

Sincerely, someone who has also scratched every single side of their car LMAO

#listen I am trying my best#god bless my new car that has sensors and a camera lol#percy jackson#pjo#my stuff

8 notes

·

View notes

Text

my phone gets drunk at least once a month (gets drenched in beer by accident and stops working for 1 to 2 days) which is incresibly inconvenient but also nice because i can disconnect from social media for a whole ass day!

#except one time a drop of water fell on it and it stopped working as i was leaving work#and i was going to meet my friend in the big plaza in the city#and i literally left work and couldn't call her or do anything at all with my phone#she called me and i couldn't take the call#and so i just walked around the plaza looking for her#and then miraculously found her somehow and like i couldn't even uber home or anything#now i'm using my old phone#but idk if he will be fine this time... pray for me#like it's been 1 day in rice and nothing#just so you understand the phone is fine#simply the screen touch doesn't work#i think it's a safety measure from samsung cuz the old one caught water in the camera and it didn't open#untjl after it fully dried the phone wouldn't open the camera#so i guess it has some kind of sensor to know if it's wet inside and not let you use it so it doesn't break for real bc of heating and stuff#but idk i hope he becomes ok bc i don't have money for a new phone nor a screen repair

7 notes

·

View notes

Text

As much as I complain about the (at its core, justified) backlash to corporate AI going in counterproductive-at-best directions here, I would like to take a moment to talk about what I would like to see done about the problem of corporate swarming all over AI as a moneymaking fad.

First, I must address the true root of the problem: as we all know, a lot of the types of people known derogatorily as techbros jumped ship from cryptocurrency and NFTs to AI after crypto crashed...multiple times. Why AI? Why was it the next big thing?

Well, why was crypto the previous? Because it was novel and unregulated. Why did it crash? Because of the threat of regulation.

It is worth mentioning, at this point, that the threat of regulation ended up doing massive harm to people who used crypto for reasons OTHER than speculative investment scams. This included a lot of people who engaged in business that is illegal but lifesaving (e.g., gray-market pharmaceuticals), and people who engaged in business that is technically legal but de facto illegal due to payment processors hating it (e.g., porn and other online sex work) - i.e., a lot of extremely vulnerable people. Stick a pin in this, it will be important.

AI is a novel and largely unregulated field. This makes it EXTREMELY appealing to venture capitalists and speculative investors - they can fuck around and do basically whatever they want with little to no oversight, and jump ship the moment someone says "all right, this is ridiculous you CANNOT just keep pretending it's a rare fluke when your beefed-up autocomplete chat bot makes up garbage information, and the next clown who decides that a probability function trained on the ableism and pop-psych poisoned broader internet is a viable substitute for trained mental health counselors is losing any licenses they have and/or getting fined into bankruptcy." They've always been like this - when technology is too new for us to even know how we SHOULD regulate it, the greedy capitalists flock to it, hoping to cash out quick before an ounce of responsibility catches up to them, doubly so when it's in a broader field that's already notoriously underregulated, such as the tech sector in the US right now.

That tendency is bad for literally everyone else in the process.

Remember what I said about how the crypto crackdown hurt a lot of very vulnerable people? Well, developers aren't lying when they say that AI can have extremely valuable, pro-human applications, from AAC (which it's already serving as; this is, imo, THE most valuable function of ChatGPT), to health and safety - while we absolutely should not entrust things like reading medical images and safety inspections to AI without oversight, with oversight it's already helping us find cancers faster, because while computers are fallible, so are humans, and we're fallible in different ways. When AI is developed with human-focused applications in mind over profit-focused ones, it can very easily become another slice of Swiss cheese to add to one of our most useful safety models.

It can also be used for automation...for better, and for worse. Of course, CEOs and investors are currently making a hard push for "worse".

That's why I find it very important to come up with a comprehensive plan to regulate AI and tech in general against false advertisement/scams and outright endangerment, without cutting too deep into the potential it has for being genuinely good.

My proposals are as follows:

PRI. VA. CY. LAW. PRIVACY LAW. PRIVACY LAW. As it stands now, US law regarding online privacy and data security - which is extremely pertinent because most of the most unscrupulous developers are US-based - is at best a vicious free-for-all that operates entirely on manufactured "consent", and at worst actively hostile to everyone but corporate interests. We need to change that ASAP. As it stands, robots.txt instructions (and other similar things, such as Do Not Track flags) are legally...a polite request that developers are 100% allowed to just ignore if they feel like it. The entire mainstream internet is spyware. This needs to change. We need to impose penalties for bypassing others' privacy preferences and bring the US up to speed with the EU when it comes to privacy and data security. This will solve the problem that many are counterproductively trying to solve by tightening copyright law with more side benefits and none of the drawbacks.

Health and safety audits and false advertising crackdowns. Penalties must be imposed on entities who knowingly use AI in inappropriate and unsafe applications, and on AI developers who misrepresent the utility of their tools or downplay their potential for inaccuracy. Companies using AI in products with obvious potential hazards, from robotics to counseling, are subject to safety audits to make absolutely sure they're not cutting corners or understating risks. Developers who are found to be understating the limitations of their software or cutting safety features are subject to fines and loss of licenses.

Robust union protections, automation taxes, and beefing up unemployment/layoff protection. Where automation can and cannot be used in the professional sector should never be a matter of law beyond the safety aspect, but automation rollouts do always come with drawbacks - both in the form of layoffs, and in the form of complicating the workflow in the name of saving a buck. The government cannot make sweeping judgments about how this will work, because it's literally impossible for them to account for every possibility, but they CAN back unions who can. Workers know their workflow best, and thus need the power to say, for instance, "no, I need to be able to communicate with whoever does this step, we will not abide by it being automated without oversight or only overseen by someone we can't communicate with adequately, that pushes the rest of our jobs WAY beyond our pay grade" or "no, we're already operating on a skeleton crew, we will accept this tool ONLY if there are no layoffs or pay cuts; it should be about getting our workload to a SUSTAINABLE level, not overworking even fewer of us". Automation taxes can also both serve as an incentive for bosses to take more time considering what they do and do not want to automate, and contribute to unemployment/layoff protection (and eventually UBI). This will ensure that workers will be protected, even when they're not in fields as visible and publicly appreciated as arts.

In conclusion, the AI situation is a complicated one that needs nuance, and it needs to be approached and regulated in a pro-human, pro-privacy way.

#not art#needless to say this plan ALSO involves linking fines in general to income/revenue#so that small time developers arent bankrupted by an honest mistake of forgetting one (1) line of code#and megacorporations can't just go ''ohhh teehee oopsie-woopsie we made a fucky-wucky'' and pay 10% of what they earned by breaking the law#after their mining bot goes on a rampage and kills 7 human miners and blows up a national monument and poisons a reservoir serving 50k#because they took out a critical sensor and went ''its fiiiiiine the ai can operate on just cameras~''

26 notes

·

View notes

Text

there's the question of how the polar lords' army does the un-fire thing, but I want to know how the Wulfenbach forces are detecting the epicenter of that effect. I thought IR cameras and similar get very confused in any notable precipitation

#girl genius#page react#sneaky gate is sneaky and we like it that way#I've not had enough access to IR cameras to say with 100% certainty but I really thought they were a bit nonspecific though fog#if anyone knows how that turns out feel free to let me know because it really is the easy explanation for their sensors knowing the center

8 notes

·

View notes

Text

April Morning.

#chillcoffeeash#ccd sensor#nikon#photography#April#spring#early morning#morning#forest#nature#cottagecore#clouds#aesthetic#digital camera

22 notes

·

View notes