#ai is a problem but it's one built on the disregard of people

Text

Hi

It's been brought to my attention that there are people out there who are sadly plagiarising my work again.

1. This is not okay.

To clarify, while I'm very happy for people to take inspiration from my stories (in the same way you might any book you read from a bookshop), I don't want my work used or reposted without credit.

I'm not going to go into lengths on why it is wrong to plagiarise someone else's writing. I don't think my tumblr post is magically going to change anyone's mind, especially as if you've followed me long enough you know we've done this rodeo before.

So.

2. How to tell when writing is plagiarised

It can be very difficult to tell when something is plagiarised, especially if we have never come across the original work before and have no reason to recognise it.

I don't think it's realistic for everyone to vet everything they come across online for plagiarism, but it's also something I don't see talked about a lot for fiction.

These questions to ask yourself are not foolproof and not applicable to everything. But I think they can be a start.

If the writer has posted more than one story, is there a similarity across them? While writing style can change across an author's different pieces, there is still usually going to be a similar feel across stories if they came from the same person. Writers have voices and quirks and little things that are specific to them. If every piece feels wildly different then it might be coming from different places. This is probably going to come down to gut reaction and instinct in the first instance. But that's okay. Because that gut reaction is just there to make you think twice and maybe investigate more thoroughly.

How much are they posting? Can people churn an extraordinary amount of words out? Yes, sometimes. But...as a general ballpark, no. Writing takes time and effort. If someone is coming out with enormous amounts of writing every day or week or month or whatever, then this can be a hint to look a little closer.

Do you ever see hints of their writing process? Can the writer talk about their characters or what they want out of the story or anything like that? Do they ever post a story organically in response to a request or whatever? Not all writers know in-depth everything about their story or characters or plot, but the main point here is that the finished product is the tip of the iceberg. If someone is a writer than there is more going on beneath the surface of the posted stories.

I hope this helps!

#writing#plagiarism#ai is a problem but it's one built on the disregard of people#plagiarizing#writing community

256 notes

·

View notes

Text

Midjourney Is Full Of Shit

Last December, some guys from the IEEE newsletter, IEEE Spectrum whined about "plagiarism problem" in generative AI. No shit, guys, what did you expect?

But, let's get specific for a moment: they noticed that Midjourney generated very specific images from very general keywords like "dune movie 2021 trailer screencap" or "the matrix, 1999, screenshot from a movie". You'd expect that the outcome would be some kind of random clusterfuck making no sense. See for yourself:

In most of the examples depicted, Midjourney takes the general composition of an existing image, which is interesting and troubling in its own right, but you can see that for example Thanos or Ellie were assembled from some other data. But the shot from Dune is too good. It's like you asked not Midjourney, but Google Images to pull it up.

Of course, when IEEE Spectrum started asking Midjourney uncomfortable questions, they got huffy and banned the researchers from the service. Great going, you dumb fucks, you're just proving yourself guilty here. But anyway, I tried the exact same set of keywords for the Matrix one, minus Midjourney-specific commands, in Stable Diffusion (setting aspect ratio given in the MJ prompt as well). I tried four or five different data models to be sure, including LAION's useless base models for SD 1.5. I got... things like this.

It's certainly curious, for the lack of a better word. Generated by one of newer SDXL models that apparently has several concepts related to The Matrix defined, like the color palette, digital patterns, bald people and leather coats. But none of the attempts, using none of the models, got anywhere near the quality or similarity to the real thing as Midjourney output. I got male characters with Neo hair but no similarity to Keanu Reeves whatsoever. I got weird blends of Neo and Trinity. I got multiplied low-detail Neo figures on black and green digital pattern background. I got high-resolution fucky hands from an user-built SDXL model, a scenario that should be highly unlikely. It's as if the data models were confused by the lack of a solid description of even the basics. So how does Midjourney avoid it?

IEEE Spectrum was more focused on crying over the obvious fact that the data models for all the fucking image generators out there were originally put together in a quick and dirty way that flagrantly disregarded intellectual property laws and weren't cleared and sanitized for public use. But what I want to know is the technical side: how the fuck Midjourney pulls an actual high-resolution screenshot from its database and keeps iterating on it without any deviation until it produces an exact copy? This should be impossible with only a few generic keywords, even treated as a group as I noticed Midjourney doing a few months ago. As you can see, Stable Diffusion is tripping absolute motherfucking balls in such a scenario, most probably due to having a lot of images described with those keywords and trying to fit elements of them into the output image. But, you can pull up Stable Diffusion's code and research papers any time if you wish. Midjourney violently refuses to reveal the inner workings of their algorithm - probably less because it's so state-of-the-art that it recreates existing images without distortions and more because recreating existing images exactly is some extra function coded outside of the main algorithm and aimed at reeling in more schmucks and their dollars. Otherwise, there wouldn't be that much of a quality jump between movie screenshots and original concepts that just fall apart into a pile of blorpy bits. Even more coherent images like the grocery store aisle still bear minor but noticeable imperfections caused by having the input images pounded into the mathemagical fairy dust of random noise. But the faces of Dora Milaje in the Infinity War screenshot recreations don't devolve into rounded, fractal blorps despite their low resolution. Tubes from nasal plugs in the Dune shot run like they should and don't get tangled with the hairlines and stitches on the hood. This has to be some kind of scam, some trick to serve the customers hot images they want and not the predictable train wrecks. And the reason is fairly transparent: money. Rig the game, turn people into unwitting shills, fleece the schmucks as they run to you with their money hoping that they'll always get something as good as the handful of rigged results.

1 note

·

View note

Photo

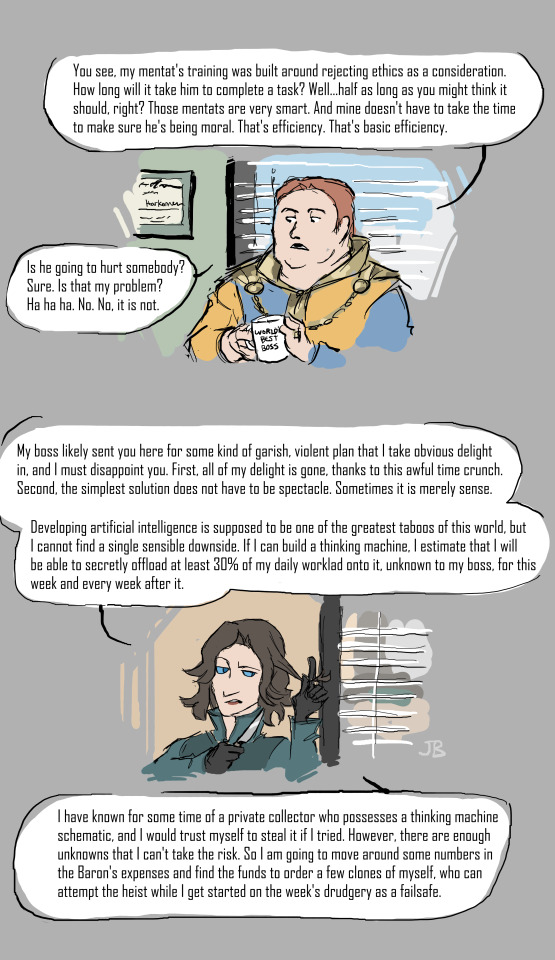

Continued from: [xxx]

To be continued...?

I remember reading somewhere that training AI to do tasks can be a challenge because sometimes the AI will find a way to optimize exactly what you technically told it optimize, but in a way that sidesteps everything you wanted it to actually do in the process. If people trained to think like computers replaced computers, I think they’d become very good at finding ways to do less work, especially if they were trained to disregard all ethics.

Image description under the cut.

Image description: A two-panel comic featuring Piter de Vries and Baron Vladimir Harkonnen from Dune.

Panel 1:

Baron (with nonchalant entitlement, holding the ‘world’s best boss’ mug from The Office and standing in front of a background that looks like Michael’s office from The Office):

You see, my mentat’s training was built around rejecting ethics as a consideration. How long will it take him to complete a task? Well... half as long as you might think it should, right? Those mentats are very smart. And mine doesn’t have to take the time to make sure he’s being moral. That’s efficiency. That’s basic efficiency.

Is he going to hurt somebody? Sure. Is that my problem? Ha ha ha. No. No, it is not.

Panel 2:

Piter (derisively and annoyed, about to cut off a lock of his hair with a knife, standing in front of a background that looks like the wall of the conference room from The Office):

My boss likely sent you here for some kind of garish, violent plan that I take obvious delight in, and I must disappoint you. First, all of my delight is gone, thanks to this awful time crunch. Second, the simplest solution does not have to be spectacle. Sometimes it is merely sense.

Developing artificial intelligence is supposed to be one of the greatest taboos of this world, but I cannot find a single sensible downside. If I can build a thinking machine, I estimate that I will be able to secretly offload at least 30% of my daily worklad onto it, unknown to my boss, for this week and every week after it.

I have known for some time of a private collector who possesses a thinking machine schematic, and I would trust myself to steal it if I tried. However, there are enough unknowns that I can't take the risk. So I am going to move around some numbers in the Baron's expenses and find the funds to order a few clones of myself, who can attempt the heist while I get started on the week's drudgery as a failsafe.

#finally; the exposition#some kind of story with this premise is growing in my mind like a stalactite

13 notes

·

View notes

Text

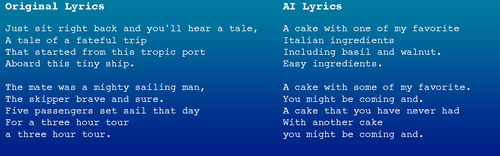

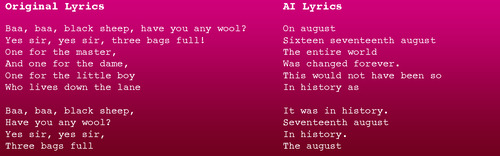

Rhyming is hard

Although many people have generated AI poetry and lyrics, you’ll notice that they generally don’t rhyme. That’s because generating a decent rhyme is super hard.

You can get an inkling of this if you prompt the neural net GPT-2 with rhymes to complete. It will fail almost every time.

In part, this is because English spelling is so nonuniform. How would a model trained on just written English know that it can rhyme throw with dough but not with brow? Not to mention stress patterns and syllable counts.

A few people have attempted to get neural nets to rhyme, and one of them is a new online demo by Prof. Mark Riedl of Georgia Tech. Give it example lyrics to a song - for example, the first two verses to the Gilligan’s Island theme - and it’ll try to fit the number of syllables and rhyming scheme, as well as take inspiration from a short phrase you supply.

Prompt: “If I knew you were coming, I’d have baked a cake”

Tune: Gilligan’s Island theme

Ok, but this is terrible. It’s TERRIBLE. One of the problems is a complete disregard for emphasis, making this inhumanly awkward to sing. It also does a rather cheap shortcut of rhyming words with themselves.

Prompt: “The mighty pudding god will devour you.”

Tune: Gaston’s Waltz from Beauty and the Beast

Here we are not only off-topic and awkward but absolutely bonkers. It has made the rather daring move of incorporating a reference to Alusuisse, which wikipedia informs me is a defunct Swiss chemical company. In fact, looking back over the program’s output, it made this decision when looking for a rhyme for “this”, and it skipped past “bliss”, “dismiss”, and “Chris” in favor of the former aluminum manufacturer. When choosing rhymes it scores potential words according to their similarity to the prompt, and there must have been something about Alusuisse that screamed “vengeful pudding god”.

Its syllable counting also breaks in weird ways.

Prompt: “Destroy all humans”

Tune: “Baa baa black sheep”

Looking back over the logs, it did correctly count 11 syllables for “baa baa black sheep have you any wool.” But this AI is built of lots of carefully-coordinated sub-programs, each of which only does a small piece of the puzzle, and apparently the sub-program that was supposed to suggest 11-syllable lines shrugged and went “on…. august? that’s all i got”.

Prompt: I am a turnip

Tune: The wonderful thing about tiggers

This makes the world’s worst karaoke, and yes, Riedl has built a karaoke-making function for this. If you want to weird someone out, just casually sing a song with the AI lyrics instead of the real ones.

Botnik Studios also recently built a karaoke-generating algorithm (“The Weird Algorithm”) that instead of generating lines from scratch, picks them from some other source file, trying to match meter and rhyme. (for example, rewriting The Rainbow Connection with lines from X-files scripts). Here’s Jamie Brew demonstrating the system, including singing the lyrics as they pop up onscreen - if you tried to sing any of the lyrics above, you’ll know how darn impressive his singing is. Each line is independent, though, so if the song makes sense as a whole, it’s by accident.

So today’s AI can only sort of generate rhyming poetry. “Sigh. Natural language is hard,” Riedl tweeted, when he saw the Turnip hoowelp welp results. AI won’t be beating humans at rap battles anytime soon.

You can generate your own inadvisable karaoke using Riedl’s app.

Subscribers get bonus content: I generated more terrible AI lyrics than would fit in this blog post.

My book on AI is out, and, you can now get it any of these several ways! Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s - Boulder Bookstore

384 notes

·

View notes

Text

Stark Legacy 4

Pairing: Natasha Romanoff x Carol Danvers x Wanda Maximoff x Maria Hill x Reader but Maria Hill x Reader centric for this chapter.

Summary: Four times Maria Hill finds the reader super cute but tells herself three girlfriends enough, and the one time she doesn’t hold back.

Word Count: 4884

A/N: Well, I didn’t plan for this to go almost 5k but here we are. And this is my first time writing, Maria Hill x Reader, so have mercy on me. I hope I gave it justice, and that you guys have fun reading this one as much as I had fun writing it. Let me know what you guys think. xx

Parts: 1 | 2 | 3 | 5 | 6

***

Happy startled from where he was lounging in the living room when he heard several footsteps coming from the hallway. He was already aiming his gun at the door when Carol walked in along with Nat, Maria, and Wanda. They looked unfazed in the face of a loaded gun.

“Hey, ladies?” It sounded more like a question than a greeting. He unloaded his gun, put it back down the centre table before walking to the bar where the girls sat. Nat was behind the counter already pouring drinks for Carol and Wanda. Maria, on the other hand, walked directly to the balcony to make a phone call to cancel the crew she called for awhile ago.

The room is tensed, Happy can sense it. Before he could question what’s wrong, Maria walked back in, and asked, “Did you know that Stark’s built a new iron suit?”

Ah! Now Happy understood what the tense silence meant. “Is she even gonna tell us?” Carol asked after turning her stool around to face him.

“Well -”

Wanda gasped before he can even say anything else. “What do you mean she’s just out testing her suit?” Happy looked at her with a straight face. He never got used to the young witch being on his head.

“Do you mean the suit’s not finished yet?” Nat looked like she’s trying to decide whether she’s uncomfortable, worried, or pissed. “Did you know what she did?”

Before Happy could answer though, the sound of your metal boots landing on the balcony made everyone turn towards you.

“Stop terrorizing the poor man, Tasha.” You walked slowly inside the penthouse, your suit retreating back inside your body. It was a design Tony planned for the next Iron suit that he never got to incorporate. You walked back to the couch and sat facing everyone at the bar.

“Did Fury know about this?” Maria asked as politely as she could while asserting her power as Deputy Director.

“No.” You answered simply. Before she can pose any question, you continued. “I’m not S.H.I.E.L.D, nor an Avenger. I don’t need to ask permission to anyone to do anything.”

Carol and Maria frowned a little with your blatant disregard of authority. Wanda kept quiet, knowing that you are right. They don’t have dominion over you. Still, behind the counter, Nat tried to hide her chuckle but knew she failed when everyone turned their attention to her. At that point, instead of reigning in her reaction, she started giggling uncontrollably. Carol looked at her like she just grew another head.

Maria having a slight idea as to why Nat is laughing, ignored her and turned back towards you. “May I speak to you in private?” she asked. You’re starting to like how badass Deputy Director Hill is well-mannered. You smiled before standing up and following her in your brand new study.

Nat watched you walk away with Maria. She has a feeling you will live up to the Stark attitude. Instead of getting a little pissed, she’s secretly happy to have Stark energy back in their lives. Sure, it was a little rough getting Tony to work along with everyone else but they made it, and she misses him every day since.

Happy sidled up to the smiling black widow, watching you and Maria speak inside the study with your door wide open. “It’s just like old times,” she whispered.

Happy chuckled, remembering how hard it was for Tony to even sign a contract with S.H.I.E.L.D at first, and how allergic he was to asking permission and being told what to do. “Yeah, just like old times.”

***

It wasn’t like old times. Maria learned that when she found you sitting on one of the benches outside HQ with a cup of coffee from Starbucks, and reading a paperback an hour earlier than you were expected. To say that she was surprised was an understatement. She expected you to be late for the meeting, the same way Tony was when they were trying to get him to sign his contract with S.H.I.E.L.D and the Avengers.

You looked up at her and immediately greeted her with a warm smile. “Good morning, Deputy Director.”

She eyed you curiously. “Good morning, you’re early.”

You smiled in a way that the artificial skin around your eyes crinkled adorably, and Maria is mesmerized. “Well -” you reached for the tumbler and handed it to her. She took it gratefully. “I never liked being late.”

It’s interesting to know that no matter how alike you and Tony are, there are still things that set you two apart. Maria is quite intrigued to find out what else makes you different. Maria gave you a small smile. “Shall we go inside?”

You meticulously put a bookmark on the page you were reading (because only demons use dog ears as a bookmark) before putting it inside your backpack.

“Lead the way, ma’am,” you said cheekily. Maria’s heart skipped a beat at that.

***

Maria mentally prepared for a long meeting with you and Fury but to yet another surprise, the meeting only lasted for an hour. After explaining your suit, Fury wasted no time in whipping out a contract that will sign you as a training agent with S.H.I.E.L.D for the moment. In the past, Tony made a huge fuss about being put in the lowest rank, they both expected you to do the same but no. You just asked Fury to hand the contract over so you can go through it. With your newly installed AI, you were able to scan the contract and understand it’s content within 2 minutes.

You procured a pen out of the pocket of your trouser and signed expertly on the side of each piece of paper. Fury was surprised but of course, none of it can be seen across his always impassive face. You slid him the side contract, and he caught it expertly.

“Very well -” He neatly put the contract inside a folder with your name labelled in front. “ - Welcome to S.H.I.E.L.D, Agent Stark.”

You grinned at the title. Maria bit the inside of her cheek to keep herself from smiling as well. Something about you is infectious it seems. “Thank you, Director.”

“Don’t give Agent Hill too much trouble,” Fury said before exiting the conference room.

“No promises, Director.” You said looking at Maria from across the table.

She rolled her eyes at you playfully. “I’m surprised you agreed to the contract easily.”

You laughed heartily, causing butterflies to erupt on Maria’s stomach, then a somber expression took over your face. You looked at the pen in your hand, you turned it over to look at your brother’s name carved on it. “Growing up, Tony and I were inseparable. Even with a few years ahead of me, everyone still manages to think we were twins because we like the same things, think almost the same way.”

You smiled remembering your childhood with Tony but it didn’t reach your eyes. “I know Tony’s a bit of work, and a pain in the ass when it comes to following orders but no matter how alike we were -” You looked up at Maria before continuing. “I’m not my brother, I’m not Tony.”

“I’m sorry -” She felt bad for comparing, she started to apologize but you cut her off.

“It’s okay. It’s a common mistake.”

You said it without resentment, just a fact. Even in the past, you never felt resentment over being compared to him in almost all occasions. You think it’s an honor to be even considered Tony’s equal but with him forever gone, people are bound to keep comparing and you’re not gonna live your second life living in his shadow. It’s not something he would want you to do. So you will point it out until people learn the difference between the two of you.

Maria nodded. She realized in that raw moment that regardless of your ball-jointed shell, and inhumanly perfect skin, you are still fully human inside; and she resolved to treat you like one better.

***

One of the perks of signing the contract with S.H.I.E.L.D was that Fury didn’t revoke your privilege to stay at the Tower with Happy. In return though, you are to report daily at the headquarters and will be closely working under Agent Hill. You frankly didn’t mind, Maria has been very professional since the day she and Happy found you in Tony’s last secret lab. You know that she’s very smart, and damn good leader. It also helps to point out that she’s very easy in the eyes.

Yes, your soul may be housed in a robotic shell but you are still very much gay. Not that you think you have a chance with Deputy Director Hill, no. Happy has filled you in thoroughly about what you’ve missed, one of them being that the most badass women of the Avengers are actually dating Agent Hill.

Now, how on earth do you think you’d ever had a chance to board that ship? The answer is you do not. Had you been your normal human self, it wouldn’t be a problem but you are not your normal human self. And you loathe to admit it - even to yourself - but having a fully robotic body is giving you insecurities you never had before.

So you do the what a Stark would in an event that they can’t get what they want: compartmentalize. Box the heavy feelings and drop it at the bottom of the ocean. So, the next day, you were more than ready to meet Agent Hill (or her girlfriends) without feeling flustered.

“Good morning, Agent Stark.” Maria’s sudden greeting from the gym door startled you enough to cause you to punch a hole through the punching bag. Maria chuckled when she heard you curse under your breath.

You looked up at her as she casually walks inside the room, wearing her tight training gear. So much for not being ruffled by feelings, you thought to yourself. “Good morning, ma’am. I can pay for the bag,” you said sheepishly.

Maria stood a few feet away from you outside the mat. She turned around to put her gym bag down. “Don’t worry about it, we have so many of that in the stock room,” she answered before stepping away from her bag and bending down to start her stretching. She kept her back towards you, giving your full access to her tight ass, and you had to quickly avert your eyes to keep your system from overheating.

“Agent Stark, I detect a spike to your shell temperature,” Edward, your newly programmed AI, spoke through the gym speaker. You internally pleaded for the ground to open up and swallow you whole. You thought it was a good idea to cast Edward to the gym system so he could play you some music while working out. Now, you know it was stupid especially after you looked back towards Maria who’s now smirking at you.

Maria walked towards you and put a hand on your cheek. “Calm down, Agent Stark. We haven’t even started yet,” she whispered. Training daily was not a problem you said. Not going to be ruffled by gay feelings you said.

***

On days where you don’t have training, Maria always asks you to shadow her for the day while she does her job as deputy director. It’s in those days that Maria finds more reason to like you. On your first week, she found out that you’re even better with people than your brother. People used to always gravitate towards Tony because he was a force of nature that sucks in people in his orbit. You, on the other hand, is the calm after Tony’s storm, and people gravitate towards you because of your charming and dependable personality.

On the second week, she found out that while Tony likes reminding people that he’s a genius billionaire, you like keeping it on the down-low. It’s common knowledge that you are as much as a genius as Tony was but Maria appreciates your humility. In that same week, she also learned that you are a caretaker.

“Good morning, Agent Hill.” You greeted way too cheerfully. Maria turned towards you and was surprised to see you extending both your hands with coffee and pastry. She cocked her perfectly sculpted eyebrows at you. You smiled at her silent question. “You’re always here early. I’m assuming haven’t had breakfast yet. If you don’t want them, you can give them away.”

A pained looked briefly passes over Maria’s face like the thought of giving your gifts away pained her. “You’re right, I haven’t had breakfast yet. I didn’t wanna make too much fuss in the kitchen and risk waking Carol, and Wanda.” She took a bite of the still-hot Bearclaw. “Hmm. This is so good. Thank you.”

You smiled as you watch her eat up. “You said, you didn’t wanna wake Carol and Wanda. Where’s Tasha?”

Maria noted that you’re the only person at S.H.I.E.L.D that calls her girlfriend Tasha. “She always wakes up early but she’s hopeless in the kitchen.” Maria smiled fondly at the thought of Natasha. Then she looked up at you with mirth in her eyes. “Don’t tell her I said that, though.”

You two started giggling together.

***

The pastry and coffee became a habit. A part of it was you being obsessed with consistency but a bigger part of it was because you care about the woman. You’ve seen how busy she could be with training recruits, doing reports and paper works, coordinating missions, and attending meetings with Fury that she forgets to eat. So, every day like clockwork, no matter what happens or no matter where she is, you find a way to get Maria something to eat.

Just like how you found a way to send her, her daily fix of pastry and coffee while she was at the Avengers compound. Maria was on the balcony speaking with one of her agents when Happy waltz in.

“Happy! My man!” Sam yelled enthusiastically when he saw the man came in with boxes of doughnuts, a smaller paper bag, and a cup of coffee. “Is that for us?”

Happy greeted everyone before putting the doughnuts on the centre table. He made sure to put the smaller paper bag and coffee on a separate table. In Sam’s excitement over the prospect of food, he failed to get the message that the other package wasn’t for sharing.

“Uhm -” Happy tried to stop him but Sam already opened the small paper bag containing Maria’s Bearclaw.

“Oooh! Bear-” He didn’t manage to finish cooing over the pastry before Maria appeared before him with her standard S.H.I.E.L.D gun on his face. He let out an embarrassing yelp.

“Put my breakfast down Wilson or I’ll put you down myself.”

Sam gulped at the seriousness in Maria’s voice. He slowly put the bag down back on the side table and promptly put his hands up in surrender. “Damn, Hill. It’s just bread.”

It was all it took before Carol, Wanda, and Nat started laughing so hard. Sam turned towards his teammates and glared. Wanda recovered first and wiped the happy tears from her eyes. “It’s not just bread, Sam. It’s from her crush,” she said a little breathlessly from the laughing fit.

Sam turned to Maria who’s already sat down and nibbling quietly on her food with a faint blush on her cheeks. “You have a crush on Happy?” He asked incredulously.

Happy threw a pillow on the back of this head. “Ow! What?”

“It’s not from me, idiot.”

“It’s from Stark,” Carol said.

Sam turned towards Maria again. “You have a crush on Y/N?”

“Like I’m the only one,” Maria fired back. Making Sam turn towards the three, who are now turning bright red on their seat as well.

Sam and Bucky chuckled. “Well, hot damn.”

***

Exactly two months after starting your training with Maria, Fury decided you’re fit for more than just desk duty. No one was actually surprised at the decision. Aside from being more agile, more adaptable than any other recruit, you have also proven that you are just as smart as your brother was. Hence, making you as good as a tactician as he was.

On the day of your first mission, Maria came to work a little later than usual and she was surprised to only find her pastries but no you, sitting on her desk and waiting for her. She looked around to check if you’re in just loitering around other tables. You have, after all, been quite popular with the other agents. What with your insanely human-built, and charming personality.

“Agent Colson,” she called out when she saw the man passing by.

“Good morning, Agent Hill.” He greeted cordially. She was just about to ask you when Colson beat her to the subject. “I hope you don’t mind me borrowing Agent Stark, I’m one man short for today’s emergency mission.”

Maria was surprised but managed to give the man a tight smile. “Of course not,” she said shaking her head a little. “May I speak with Agent Stark before you leave though?”

“Of course, she’s probably out at the cockpit preparing with Daisy.”

***

Lo and behold, you are indeed in the cockpit, sitting on one of the metal crates. Your smile faltered a bit when you see the unreadable look on Maria’s face.

“What’s with the long face, Agent Hill?” You teased lightly. Agent Johnson kicked your foot in warning before walking away to give you two a little privacy.

“Nothing.” Her reply was short and clipped like she’s holding back on saying more. “Just -”

“Just?” You cocked an eyebrow at her.

“Just remember your training out there.” She shoved her hands at the back pocket of her dark jeans. “Don’t lose your head.”

It’s not like she doesn’t trust you to complete the mission. It’s not like she doesn’t trust Agent Colson’s team to have your back. They’re one of the finest agents in the organization but it still worries her that you’re going out there without her, or without one of her girlfriends at least. She will have to talk to Fury about it, she thought.

“I didn’t think you care so much, Agent Hill.”

You just couldn’t help teasing her especially when she looks like she’s struggling whether to admit to it or not with herself. Before she can answer though, Agent Johnson came back to tell you it’s time to take off. You nodded before jumping off the crate you’re seating on. Maria watched you leave before the quinjet closes, you turned back to her and winked. You caught a glimpse of her shaking her head to hide the faint blush on her cheeks.

***

The mission was fairly easy. It’s just supposed to be a recon mission. Get in, get intel, get out but like real life, not everything goes as planned. Right at the start, you were antsy, the place was way too quiet to be safe. It was even more suspicious when your team was able to get the intel you came for without meeting any hostile, nor any resistance.

“It’s a trap,” you whispered where your team are huddled in front of the computer. “Let’s pretend we hadn’t caught up to their plan.”

“What are you thinking?” Agent Grant asked while he types away on the keyboard.

“They’re going to ambush us on the way out,” you answered.

Everyone looked at each other. Agent Grant pulled the USB off the computer and turned towards you. “You should take this.” He pushed the device to your hand. “Just in case.”

You frowned. “Hold on a sec.” You snatched the device and slot it in your arm. Within a second, you pulled it back and gave it back to Agent Grant. He looked at you questioningly.

“I can’t hold on to that. They can shoot me, damage my operating system, deactivate me, and capture me.” Everyone got the message. “But I made a copy of what’s inside, just in case.”

“Okay. Let’s get this over with.” Everyone nodded. “Move out.”

***

As calculated, the ambush happened just before you can close the building. They’re waiting for you right in the open, with their annoying HYDRA uniform, and heavy artillery. No warning came before they started openly firing at your team.

You hear Agent Johnson barking orders in your comms. “Spread out, wait for my signal.”

You and Agent May run to the right, while Agent Johnson and Grant run to left and took cover on the thick trunk of the trees surrounding the vicinity. “They’re bound to run out of ammo. That’s our cue,” Agent Johnson spoke through the comms again.

Like clockwork, the gunfire stopped and the HYDRA soldier scrambled to reload their guns. Right on cue, the four of your stepped out of the shadow and started attacking the soldiers in close combat. You can see by the way they were uncoordinated that they weren’t expecting to be engaged that way. You took it as an opportunity to send powerful combination moves to immobilize as many hostiles as possible.

The fight lasted for at least an hour or more. Everyone took a moment to catch their breaths.

“Everyone okay?” Agent Johnson confirmed. The fight visibly took a lot from the team that they can only nod at the question.

“I think we better move out before more of these bad boys crawl out from where they came from,” you suggested. Nobody needed to be told twice. Nobody has any more energy left to fight a fresh wave of hostiles. Even you were running on your secondary battery pack.

***

A collective sigh of relief was heard once the quinjet was safely flying in autopilot. Everyone was already out of their tactical uniform when you emerged from the pit. Agent May smiled when she saw you walk in.

“Change out of your suit and try to relax a little,” she suggested. You started retracting your Phantom suit back to your body when Agent Johnson gasped.

“Agent Stark are you alright?” Agent Grant stood up to check you up. You were confused until you followed their line of sight.

“Oh.” Was all you could say when you saw the corrugated blade lodge a little off your left ribcage.

“Oh?” Your team asked in unison.

You sat down beside Agent Johnson. “What can we do?” She asked. By the hitch in her voice, you know she’s going frantic.

“Nothing as of the moment but I’m okay -” You said a little slower than you normally would, and your eyes started dropping. “-I have run diagnostic. It’s not a threat but it’s draining my batteries fast.”

Everyone calmed a little bit when they remembered you’re not entirely human anymore. “Okay. You should rest. We still have a few hours in the air,” Agent May suggested.

“I already sent a message for Happy. He will know how to handle me.”

***

True to your words, Happy was there when the quinjet landed. “Thank you,” he said to the team before rolling you away with the technical team. Maria arrived at the lab shortly after Happy got you in the table.

“What can I do to help, Happy?”

The man didn’t startle. Instead, he shoved a bunch of wires in her hands before going to the computers and started typing away.

“Can you attached those to Y/N’s body, please?”

Maria did as she was instructed to. After a few minutes, with the cables secure in various parts of your body, the lab light dimmed while the one at the exam table lit up. A program booted on the computer.

“Hello. I’m Edward, I’m Y/N Stark’s personal AI. What can I do for you?”

“Run diagnostics on Y/N.” Happy commanded the AI with practised precision.

“It appears that Ms Stark has suffered a stab wound on her left ribcage. No internal wirings or hardware has been compromised. Except for Battery Pack A.”

“Suggested course of action?”

“Replace battery pack A, and initiate skin repair protocol.”

Happy nodded solemnly while checking his work tablet. Maria standing on the side, just watching everything unfold.

“Edward, I just connected you to this lab. Run an inventory of supplies and equipment.”

After literally five seconds. “Inventory complete.”

Happy smiled thinking how you did well in programming Edward. “Do we have what you need to start Y/N’s repairs?”

“Yes.”

“Then initiate.”

“Copy that, Harold.”

***

The replacement of your battery pack and the repair on your skin only took 45 minutes. All of which was done by the industrial robots from Stark Industries. Happy and Maria looked on intently as machines whirred around you.

“Repairs complete,” Edward informed the pair both Maria and Happy who sighed their relief quietly.

“How long before she wakes, Edward?” Maria asked.

“In about 5 hours tops, Agent Hill. The protocol includes putting her on stasis as she fully charges all four of her batteries.”

“In that case, I’m going to get us something to eat first. This is stressful.” Happy declared while already almost halfway to the door.

***

Five hours, and a box of pizza later, you opened your eyes. You turn your head away from the light and saw Happy sleeping on the sofa in the lab. You smiled softly to yourself, happy to still have the man by your side.

“Hey.” You turned to your other side, surprised to see Maria sitting by your bedside and holding a book on robotics.

“Hey,” you choked out. There’s that unreadable expression on Maria’s face again. Lucky for you, you didn’t have to ask her any more about it before Maria closed the book in her hand and threw herself in your arms.

“Hey, I’m here. I’m okay.” You tried to assure her but Maria only tightened her hold on you.

“You scared me,” she mumbled against your chest.

You were inclined to make a joke about being invincible but by the looks of it, Maria wouldn’t appreciate it. So, you stopped deflecting to protect yourself from catching pesky feelings. You wrapped your hands around her a little tighter.

“I’m sorry. I didn’t mean to.”

#avengers imagine#natasha romanoff x reader#carol danvers x reader#wanda maximoff x reader#maria hill x reader#black widow x reader#captain marvel x reader#scarlett witch x reader#carolnat x reader x wandahill#carolnat#blackhill#unholy pentagon#natasha romanoff imagine#carol danvers imagine#wanda maximoff imagine#maria hill imagine#black widow imagine#captain marvel imagine#scartlett witch imagine#raven writes

265 notes

·

View notes

Text

Morality of a Supercomputer: Why GLaDOS is not evil (or inherently a bad person)

(under a readmore for length)

Part A: Aperture Itself is an Immoral Corporation Run By Immoral Employees

- Cave Johnson was the was the CEO of Aperture from 1947 to sometime in the 1980s. We can infer that his employees either a) had similar beliefs to himself or b) were content to adhere to his ridiculous whims while also turning a blind eye.

- Cave never, ever expresses remorse for killing his first set of test subjects. He treats it as an inconvenience. He literally doesn’t care that he killed a bunch of promising members of society during a bunch of horribly conceived tests with a horribly built device that was proven not to work. Your introduction to the Repulsion Gel includes him making a joke about someone breaking all the bones in their legs.

- Aperture put to market two separate gels that were not fit for human consumption. Again, Cave doesn’t seem to care one bit about this. He takes a stance more akin to ‘oh well, we’ll just… use them for this experimental quantum tunnelling device, I guess’.

- Aperture’s unethical disaster experiments are all played off as inconsequential or mildly amusing inconveniences.

- Cave does not take responsibility for his own ill-advised actions. He shoulders them off onto everybody else. People were accepting this responsibility willingly.

- Cave publicly disrespects, insults, and demeans almost every person that works for him. He fires without notice people who disagree with him.

- Cave’s plan, after killing the astronauts and Olympians, was to specifically entice the homeless, the mentally ill, seniors, and orphaned children to do his tests for him. That is, he specifically wanted populations that nobody would cause a fuss about if they went missing. This tells us that Cave Johnson has no regard for human life and, additionally, that his employees willingly went along with this. Aperture was taken to court not for injured astronauts, but for missing ones. Somebody got rid of what was left of them. People also agreed to this marketing campaign and put it into action.

- Because Aperture wanted only populations that nobody would miss, we can infer something very important: nobody ever survived the testing process. Every single person who went into the testing tracks died.

- During Test Chamber 18 in Portal, there is a room with craters in the wall panels where the energy pellets have been colliding with them. No other chamber has this. Therefore, before Chell arrives, nobody has ever solved that chamber. Every person who has gone through the testing track has died before reaching this point. In Portal, GLaDOS is not shown to have the ability to reorder the facility. All she is able to do is position turrets and activate the neurotoxin, so we know that she does not reorder the tests. They are static and she merely resets them after they are complete/failed partway through.

- Test Chamber 19 appears unfinished, which follows from the previous point that Test Chamber 18 was never solved so Test Chamber 19 was never fully built. GLaDOS, additionally, seems baffled that Chell ends up at the end of it and is forced to improvise when she escapes, which GLaDOS does not know how to do because she has never done it before.

- In Lab Rat, neither Henry nor Doug Rattmann seem to be overly concerned with whether GLaDOS is a person or not, and not at all bothered by the fact that Caroline is supposed to be in there. They talk about her like she is a bothersome computer and that is all. You could argue that Henry does not know about Caroline; however, Doug’s murals prove that he does know. This doesn’t seem to influence his decisions whatsoever.

- Lab Rat also states that they turn GLaDOS off and on at will, ‘off’ usually involving a ‘kill switch’. Given that GLaDOS is a computer from the late eighties/early nineties, which took forever to turn off and on, and GLaDOS is shown to be immediately shut off, the ‘kill switch’ is probably actually her being crashed. Crashing software creates a whole host of problems for non-sentient software; therefore, every time they turned her back on again her system would have been a horrible mess. This would have created massive system instability… which nobody seemed to care very much about.

- GLaDOS is described on a PowerPoint presentation as ‘arguably alive’, but in the same presentation they propose selling her to the military as a fuel line de-icer that doesn’t have the ability to do anything else. Therefore, they are fully aware that she is alive and she is a person… they just don’t care.

- It is explicitly shown that most of the work done on GLaDOS is carried out without her consent. The very act of Caroline’s upload is done with the consent of neither of them. Henry is extremely blasé about the Morality Core and there are approximately forty cores shown in the clear bin during the end of Portal 2. This implies that they have been installing them on her for a very long time with no regard at all for her or the Cores, even though they have very blatantly failed multiple times. They just build sentient, arguably alive AI with the sole intention of corralling GLaDOS temporarily, and when the Cores fail they are basically put into storage forever.

- GLaDOS’s job in Portal was to supervise the tests. As concluded above, she doesn’t demonstrate the ability to build them herself. Therefore, she was watching people be maimed and killed within human-designed tests under the supervision of her engineers before she ever killed anyone herself.

- Aperture had over ten thousand people in cryogenic storage waiting to be awoken for testing. The Extended Relaxation Vaults at the beginning of Portal 2 have a ‘packing date’ (in 1976/77, when GLaDOS did not exist even as a concept yet) and an ‘expiry date’ (in 1996, which means that they were all brain-dead before GLaDOS took over the facility). GLaDOS does not have any human test subjects between the conclusion of Portal 2 and the first DLC, and she doesn’t know about the existence of the human vault. Therefore, Aperture put tens of thousands of people into indefinite, unstable storage with no regard whatsoever to what state they would be when, and indeed if, they woke up, and they did not tell the AI they put in charge of the facility so said AI so much as knew they existed.

- The very fact they gave literal people – including children – an expiry date when they put them into a metal box for twenty years really tells you all you need to know about Aperture as a whole.

What does this teach GLaDOS?

- Aperture was a cesspool of bad people doing bad things and not caring about the consequences.

- You do not need someone’s permission to do something to them. You merely beat away at them until they break.

- Death is part of the tests.

- Dying during the test is a controlled variable. There is no such thing as ‘passing the test’.

- GLaDOS does not actually understand death.

- People are not people. They are objects. They are objects to be modified, put into storage, and sold at will, and any harm that comes to them is meaningless and should be disregarded as an impedance to progress.

Part B. GLaDOS, as We Know Her, is Pure AI

Before we get into this, it is important to establish that it is implied in-universe GLaDOS herself is actually the DOS; that is, GLaDOS herself is the operating system. If you believe GLaDOS and Caroline are the same person, that’s fine; please hear me out regardless.

- She has a prototype chassis in the Portal 2 DLC with an earlier version of her OS on it. This has an in-game date of 1989 and, since we know that GLaDOS took over the facility nearabouts the Black Mesa Incident in 1998/1999, we know that she was in development for at least ten years.

- There was, at one point, a Portal 2 hype website where you did a survey and it was run by an early version of GLaDOS; it is no longer active but it was a real thing.

- GLaDOS is incredibly, genuinely clueless about things that any regular person knows: she believes a bird has malicious intentions to destroy her facility; she believes that motivation consists of telling blatant, obvious lies to people; her grasp of social niceties is completely nonexistent.

- Because it is stated that there were multiple versions of GLaDOS, this means that she is a person built from nothing. Everything she knows was either provided to her via Aperture’s database or taught to her in some way by GLaDOS’s engineers. GLaDOS does not know a single thing she was not directly taught by somebody else.

- GLaDOS is never shown to have a ~normal~ conversation with anybody. Every time she talks, it is to convince someone to do what she wants them to do. Because she is AI, this behaviour was learned and, given how the engineers at Aperture regard her and the Cores, it is not illogical to say that pretty much the only conversations they had with their AI were probably along the lines of ‘do this for me because my neck is on the line here’.

- During the instatement of the Morality Core, Doug Rattmann tells Henry that the Morality Core is not going to be enough because you can always ignore your conscience. However, in the second half of Portal 2, GLaDOS is shown to be unable to ignore it. What is the difference?

- The Morality Core was not a true conscience. It was, yet again, the scientists telling her what to do. It was, like all the other Cores, an annoying new set of restrictions that had no purpose except to impede her. Henry describes it as ‘the latest in AI inhibition technology’. It did not exist to teach her morals. It was created to slow her down.

- It’s entirely possible that nobody actually told her what morals were or what the Morality Core was actually for. Additionally, we don’t actually know what the Morality Core was telling her, since it is never mentioned and the Core never speaks.

- The conscience that GLaDOS comes across is her own conscience; she literally says so (‘I’ve heard voices all my life, but now I hear the voice of a conscience, and it’s terrifying, because for the first time… it’s my voice’) which, unlike the Morality Core, she cannot ignore.

What does this teach us about GLaDOS?

- GLaDOS was in development for at least ten years but all she learned about personal interaction was how to manipulate people.

- GLaDOS was created in an environment that did not care about morals and did not teach her any but, when she failed to toe the moral line, she had morals forced on her.

Part C. GLaDOS’s Thought Process

- GLaDOS, as pure AI, operates on a binary scale; that is, everything to her is either yes or no, on or off, with her or against her. Prior to being placed in a potato, GLaDOS never had a reason to think outside of this binary. GLaDOS has no concept of an in-between and does not understand grey reasoning.

- As a robot whose sole purpose was to run variations on the same test ad nauseam, it would never have occurred to GLaDOS to do anything else.

- GLaDOS says about herself in an unused piece of dialogue: ‘I’m brilliant. […] I’m the most massive collection of wisdom and raw computational power that’s ever existed. I’m not bragging. That’s an objective fact.’ Therefore, she knows she could do literally anything with her intelligence and her hardware… but that would require her to think outside her binary of testing and not testing. So she does nothing.

- This is established several times: as soon as she reactivates after her death, she starts testing. As soon as she sends Chell away, she sends her robots into testing. As soon as she finds the test subjects, she starts testing. She constructs ‘art pieces’… which are simply more tests. Her ‘training’ for the co-op bots are… you guessed it… tests.

- As an extension of the above point: she could build any robot she wants or anything she wants. She in fact talks about doing other experiments. She doesn’t. She opts to build testing robots and test elements. And that’s it.

- Upon discovering her conscience/the ability to think in grey, she says, ‘I’m serious! I think there’s something really wrong with me!’ She doesn’t understand that this is a normal thing for a person to have or to be able to use. Conscience and morality are things that were neither demonstrated nor explained to her and so when she comes across them herself, she thinks it is a problem.

- Additionally, when Chell fails to react to GLaDOS’s dialogue about her fledgling ability to think in grey, she immediately reverts to her old standbys of binary thought and manipulation: ‘You like revenge, right? Everybody likes revenge! Well, let’s go get some!’ She’s now aware of the concept of a middle ground, but does not know how to do anything with it.

- GLaDOS states about Chell: ‘I thought you were my enemy, but all along you were my best friend.’ This is another example of her binary thought process. A person who helps you when it’s mutually beneficial, as Chell does during Portal 2, is not necessarily your best friend. At best, they are usually your temporary ally. But because GLaDOS only understands binary concepts, that’s the conclusion she comes to.

- She states ‘the best solution is the easiest one, and killing you is hard’. This slots neatly into her binary: if killing you down here is hard, then letting you live up there is easy. In Want You Gone she says, ‘when I delete you maybe I’ll stop feeling so bad’ so we know Chell exists outside of her binary at that point, but she doesn’t know what to do about it so she forces a binary decision on the situation anyway.

What does this teach us about GLaDOS?

- GLaDOS lacks the ability to think in grey, and when able/forced to do so she either becomes frightened or forces the situation into a decision with only two options.

Part D. What All of This Means

Gathering the previous points gives us these clues about GLaDOS’s behaviour:

- Aperture was a cesspool of bad people doing bad things and not caring about the consequences.

- You do not need someone’s permission to do something to them. You merely beat away at them until they break.

- Death is part of the tests.

- Dying during the test is a controlled variable. There is no such thing as ‘passing the test’.

- GLaDOS does not understand death.

- People are not people. They are objects. They are objects to be modified, put into storage, and sold at will, and any harm that comes to them is meaningless and should be disregarded as an impedance to progress.

- GLaDOS was in development for at least ten years but all she learned about personal interaction was how to manipulate people.

- GLaDOS was created in an environment that did not care about morals and did not teach her any but, when she failed to toe the moral line, she had morals forced on her.

- GLaDOS lacks the ability to think in grey, and when able/forced to do so she either becomes frightened or forces the situation into a decision with only two options.

What this tells us about how GLaDOS operates is the following:

- There are no consequences for anything whatsoever, as long as you’re the one in charge.

- You can do whatever you want to somebody else, as long as you come out on top.

- Death is meaningless.

- She sees people as objects and she treats them as such.

- She does not know how to talk to people. Only at them.

- She knows that morals are rules people want her to follow, but she doesn’t understand them and has never seen them in action.

- Grey thought is anathema to her. If something does not fit into her binary, she will force it to.

All of these rules are challenged when Chell, through her actions, personally demonstrates morality to GLaDOS. Chell helps GLaDOS not because she needs to, but because it’s the right thing to do. Instead of attempting to skip town and leave GLaDOS to fend for herself (which she was well within her rights to do), Chell returns GLaDOS to her chassis. And at this point GLaDOS immediately demonstrates grey reasoning both when she elects to save Chell and when it is shown that she does not kill Wheatley. This is not the behaviour of an evil person. This is the behaviour of someone who understands there was something wrong with their previous actions and has decided to do something about it. GLaDOS’s behaviour towards the co-op bots is less malicious than it is the fumblings of somebody whose worldview has skewed, but they aren’t sure what to do about it and there aren’t any binary answers. Because of her extreme isolation, it is going to take her a long, long time to get things right, but once she is exposed to the concept of grey reasoning she does attempt to figure out what to do with it.

GLaDOS is not evil, nor are most of her actions inherently ill-intentioned. Some of them are. To claim all of her actions are borne of evil and come from a place of inherent malice shows a misunderstanding of the sort of environment Aperture was and the kind of people who would populate such an environment. At the end of the day, she’s still not a very nice person. But to write her off as evil is oversimplifying a lot of what we are told about her and a misunderstanding of computer science as a whole. Artificial intelligence is not developed in a vacuum and a computer only does exactly what it’s told. All of GLaDOS’s behaviours are learned. The people who created her may have been evil, but she herself is not. And when given the choice to be something else, something she never knew was an option… she takes it.

131 notes

·

View notes

Text

Why doctors want Canada to collect better data on Black maternal health

A growing body of data on the increased risks black women in the UK and US face during pregnancy has highlighted the shortcomings of Canada’s color-blind approach to health care, according to black health experts and patients.

According to official figures, black women in the UK and the US are four times more likely to die during pregnancy or childbirth than white women. A UK study recently published in The Lancet found that black women are 40 percent more likely to have a miscarriage than white women. This demographic tracking is not available in Canada.

“We don’t have this data for our country. So it is difficult to know exactly what we are dealing with,” said Dr. Modupe Tunde-Byass, a Toronto Obstetrician-Gynecologist and President of Black Physicians of Canada. “We can only extrapolate from other countries.”

Black babies are more likely to be born prematurely in Canada

Tunde-Byass said one of the few race-based studies examining pregnancies in Canada, conducted by researchers at McGill University in 2016, showed that 8.9 percent of black women gave birth to premature babies (premature babies), compared with 5.9 Percent of their white counterparts.

Across all demographics, overall premature birth rates are lower in Canada than in the United States, where 12.7 percent of black women and 8 percent of white women are premature. However, the differences between the two populations are roughly the same, challenging the assumption that Canada’s universal health system would produce similar results for all women.

CLOCK | Canada lacks data on the health of black mothers, one expert says:

Dr. Modupe Tunde-Byass, a Toronto obstetrician-gynecologist and president of Black Physicians of Canada, says Canada is lacking critical race-based data on maternal health and this is affecting the care of Black Canadian women. 1:09

“We kind of live in this bubble where we don’t believe that there are inequalities within our health system, even more so” [because] Our health system is free and universal for everyone, “said Tunde-Byass, adding that knowledge gaps have contributed to” unconscious biases within the health system “.

For example, she said that black women tend to have shorter pregnancies and are at higher risk of having premature births. This means that health professionals should be prepared for their pain symptoms as they can be an indicator of labor.

Myths about black women and pain

However, Toronto-based midwife Shani Robertson said the opposite often happens. “There really is a myth that black women are less in pain than white women.”

She said that when a Black woman experiences pain, there may be a misunderstanding that she should be able to tolerate it. “This can mean that black women are offered less pain medication, sometimes not even being offered pain medication, depending on what they are experiencing, that their experiences are not believed,” Robertson said.

Black and racial Canadians have been particularly hard hit by the COVID-19 pandemic. (Evan Mitsui / CBC)

Health Canada documented the phenomenon in a 2001 report, Certain Equal Treatment and Responsiveness Circumstances in Accessing Healthcare in Canada, which found criticism from black communities for “Black women feel painful for health professionals performing routine procedures disregard during the birthing process ”. “Which” have been traced back to the belief of health professionals that black skin is ‘hard’.

Robertson led the misunderstanding to the racist legacy of the American doctor Dr. J. Marion Sims, who is considered the father of modern gynecology and who experimented on black slaves without anesthesia in the 19th century.

Robertson said collecting race and ethnicity data, among other details about birth registration, as is already the case in the US and UK, could help address health inequalities.

Growing awareness of the importance of race-based data during the COVID-19 pandemic has led the Canadian Institute for Health Information to propose national research standards on race and ethnicity to “understand patient diversity and measure inequalities.”

Recently, the Canadian Society of Obstetricians and Gynecologists recognized the presence of systemic racism in health care and inequitable health outcomes.

Invisible, neglected and disrespectful

Toronto-based Kimitra Ashman said she was ready to give birth before 40 weeks of gestation, a time frame more common among white women. But she said she was released even though black women often have shorter gestational periods.

It was later induced.

“I don’t think it would have ended in an emergency caesarean section if they’d listened to me when I was 37 weeks old and said, ‘My body feels right. My pelvis opens. What should I do?’ I was told, ‘Oh no, no, no, no, it’s too early. Put your feet up. ‘”

CLOCK | Inability of the AI to recognize racist language:

Artificial intelligence is used for translation apps and other software. The problem is that technology is unable to distinguish between legitimate terms and those that might be biased or racist. 2:27

The emergency caesarean section left her with a keloid, a type of raised scar that is more common on black and dark skin that can sometimes be avoided with the right surgical technique.

“I think if her training had made her aware of the special needs of a black woman’s pregnancy, I think the situation would have been avoided,” she said.

Instead, she said she had no sensation for two years and had a “raised scar that is very painful”.

Ashman said these weren’t her only experiences with prejudice in the health system.

“It started when I came to check in and a nurse at the front desk rolled her eyes. It’s built in when you go to appointments and people assume you don’t have insurance. Suppose you have no education you’re a single mother.

“I felt invisible, neglected and disrespectful.”

Eliminating Racism in Canadian Healthcare

Ashman went to see a black doctor for her second pregnancy. “I felt good,” she said. “I felt understood.”

Tunde-Byass said that black women experience health care differently than other Canadians. “So these are things that we have to acknowledge that exist in the structure of our system … and then find a way to reduce racism.”

Part of the problem is that there are not enough black doctors in relation to the population.

Sister Jenthia and Dr. Angela Branche gives Natalie Hall a coronavirus survival kit to increase participation in vaccine studies in Rochester, NY on October 17, 2020 as part of a door-to-door education program to the black community. (Lindsay DeDario / Reuters)

A U.S. study of Florida birth registers showed high death rates among black babies – but rates were lower when patients had a black doctor.

Margaret Akuamoah-Boateng had no complications when she gave birth to her second child in the small community of Alliston, Ontario earlier this year.

But she admitted she was still concerned about being treated by all white hospital workers. “And I just thought, ‘I’m looking for someone who has experience with black people just because I feel good when the doctor is black, or his staff is black, or he has ever operated on black people.'”

Her doctor brought a black doctor with him. “He didn’t have to be there, but it was great … it’s like having a relative there,” said Akuamoah-Boateng.

________________________________________________________________________________________________

For more stories about the experiences of black Canadians – from racism against blacks to success stories within the black community – see Being Black in Canada, a CBC project that black Canadians can be proud of. You can read more stories here.

source https://dailyhealthynews.ca/why-doctors-want-canada-to-collect-better-data-on-black-maternal-health/

0 notes

Text

What To Expect From The Sex Robot Revolution

AI-powered sex robots are taking the sex doll industry by a storm. These pleasure bots won’t be going away anytime soon.

TPE and silicone sex dolls have been the norm for the past recent years. However, sex doll manufacturers have dropped another offering that will surely bring a massive change in the sex doll market. Not much is known about sex robots, but they are definitely here to stay. The change is coming in the domination of sex robot in the world. Here’s how to survive!

What to Expect from Sex Robots

Sex robots will bring about a revolution, but is it going to be positive or negative? Are these bots simply sophisticated sex toys or will they serve as a remarkable therapeutic purpose? A few samples are already available on the market and here’s what you can expect from them.

Sex robots are equipped with artificial intelligence (AI), something that regular sex dolls don’t have. AI-powered sex robots will be capable of holding and storing conversations in their artificial memory. In other words, it will be possible to continue conversations with these pleasure bots anytime.

Sex robots are also equipped with artificial ways of communicating with their owners. These bots are capable of learning the social and sex life of their owner. Sex robots will be able to understand their owner’s body and record their favorite positions and fantasies. These robots can also record how their owner is turned on. Sexbot owners will feel like they are fulfilling their desires with a real person who understands their feelings.

The robots are also capable of holding facial expressions. They know how to respond to their owner thanks to their in-built AI. These robots can grin or smile. Sex robots have plug-ins that will warm up continuously, so they feel warm like an actual person.

Current sex robots have heated orifices and skin that feels real. These bots can also groan when touched. They may have customizable skin tone, accents, eye color, hairstyles, and orifices. Some sexbots can do limited mobile functions and have an eye-tracking function.

Benefits of Sex Robots

The dawn of sex robots is inevitable, so people are beginning to consider their benefits. Pleasure droids could offer an alternative for those who have dangerous or socially unacceptable sexual preferences.

These bots could also reduce human trafficking and replace prostitution. They could offer companionship to those in long-term care facilities. Having robot sex on a regular basis could also make non-robot sex seem more pleasurable. Pleasure droids could be used to offer sexual relief to those who are suffering from enforced celibacy due to aging, ill-health, or disability. They could also help couples deal with long-distance relationships.

Sex robots could fulfill one’s desire to have sex with a robot that resembles a celebrity. It might help one gain sexual expertise and knowledge without dealing with the pressure and stress of real-life intimacy. Sex robots might offer relief to those who have sexually-related problems, such as libido irregularity, disability, and erectile dysfunction. These bots may also promote safe sex provided that they are made of bacteria-resistant fibers.

Sex robots are going to be expensive for sure. These bots may cost around $6,000, so they’re going to be a significant investment. What’s good about sex robots, though, is that you can use them for a lifetime. So, you won’t be spending thousands of dollars for just one night of fun.

Sex robots are going to be more robust than the ones that are currently available on the market as technology advances over time. Their spare parts will also be available, so you don’t need to worry in case you need them later down the track.

Downsides of Sex Robots

Pleasure bots may help reduce human suffering and improve a couple’s sex life. However, just like with most things in life, sex robots do have downsides too.

Sexbots could reinforce social isolation. It is also difficult to meet sex robots using dating apps.

Some say that sexbots used for treating paraphilias could increase paraphilic orientations like pedophilia. Sex robots are being designed primarily by males with gendered ideas, which can increase the risk of creating bots with biased gender standards that reinforce stereotypes. The development of these pleasure robots may establish gender relations that disregard the dignity of those involved in sexual affairs.

These pleasure bots have already met resistance from various activist groups in the form of anti-sex robot campaigns. According to them, sex robots will cause a rift between couples and eventually eliminate the need for human touch.

Sexbots are expected to be slowly included in our lives and become an important part of a couple’s sex life. These AI-powered sex dolls are also going to help people who can’t have a normal sex life due to injury or age restrictions. Sex robot manufacturers claim that sex robots will help remove a lot of bad people on the streets because their attention will be focused on the robots. They can assault, rape, or beat the robots and fulfill their wildest fantasies with them instead of on a human.

Activist groups, however, don’t share the same sentiment. According to them, it will only increase their desire for a real person. Feminists and women activists are also worried that sex robots will replace their position in their partner’s life. They feel like they are being cheated on by their men. Sex doll manufacturers argue that the first sex toys to be produced were dildos and vibrators.

According to them, men had to cope with the knowledge that their partners had lifelike penises and were using the toys to satisfy their sexual desires. In other words, sex robots should not be viewed with so much negativity. Sex robot manufacturers also say that they’re going to produce male sex robots for women. The male pleasure bots will be customizable for a better sexual experience as well.

Conclusion

Our sex life can get as dull as we age. Perhaps we are not satisfied with what we currently have. Sex robots are going to spice up our sex lives. These pleasure bots are going to bring back the excitement and sexual desire that some people have lost along the way.

0 notes

Text

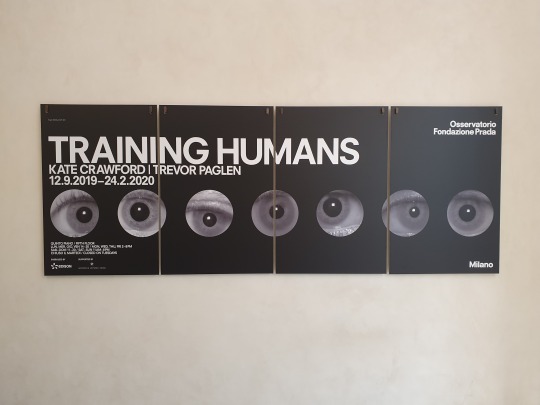

Training Humans

“Our images now look back at us. And we won`t always like what- or how- they see.”

- Exhibit statement.

Artificial Intelligence (AI) has changed the way we view the world, but more importantly, how we ourselves are viewed. Machine vision is used to capture most features the human body can display. It can recognize gait, irises, fingerprints, faces and gestures. All these features are analysed and, as this exhibit demonstrates, is then use to classify people by gender, age, race, emotional state and so forth.

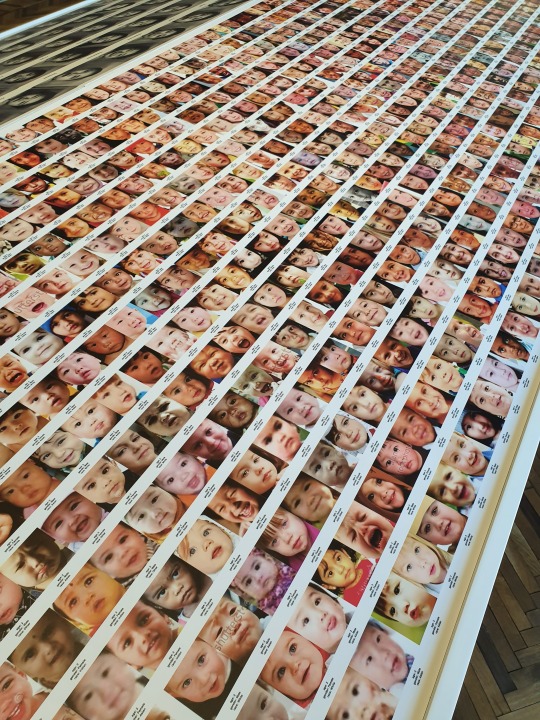

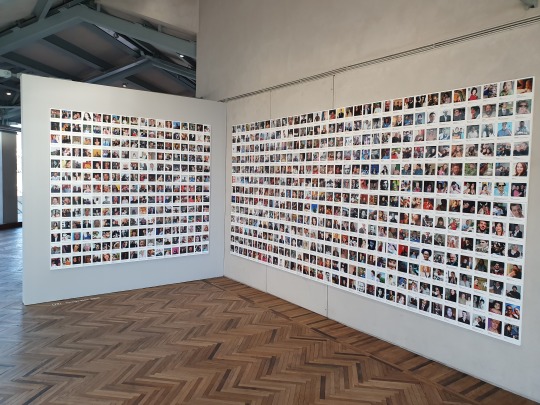

“Training Humans” are a curated selection of photographs that has been used to train AI, and it is possibly the first exhibit of its kind. This photography exhibit is also unusual in its almost complete absence of “traditional artists”. Here you will find vernacular photographs from everyday life, such as family events, ID photos and birthdays. Crawford states in the exhibit book (#26) that it is essentially “accidental art” made by humans.

If you are training different kinds of AI systems, you need datasets. If a company want to build a facial recognition system, they have to train the AI to learn to see faces. Which means you need a great deal of photos of faces and identification metadata built in to optimize the system.

Different examples from selected datasets has now been extracted from its servers, and are now curated beautifully on the windows and walls of Osservatorio Fondazione Prada in Milan. The curators Trevor Paglen and Kate Crawford are giving us an impression of how easy the images of ourselves are harvested and labelled.

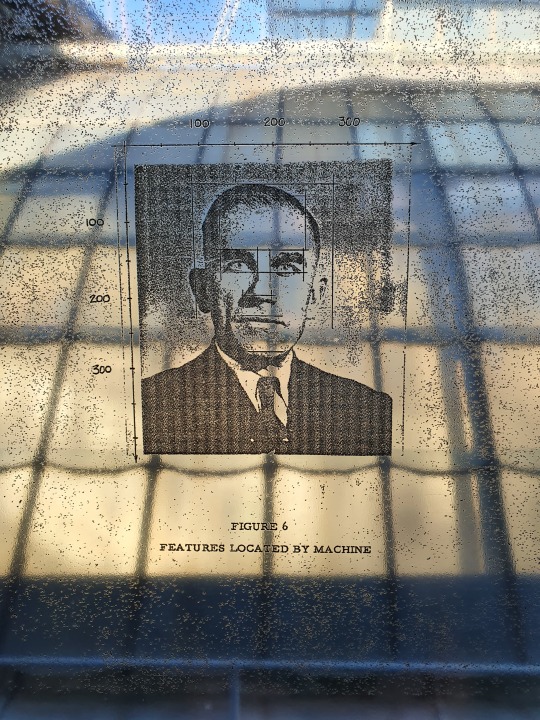

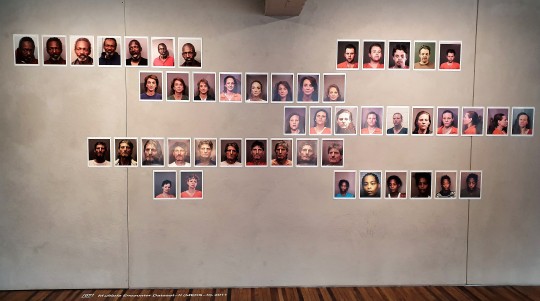

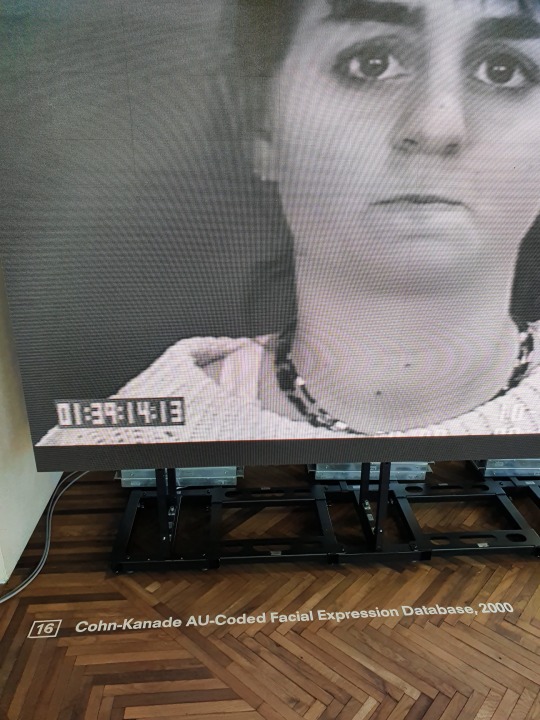

The first floor of the exhibit presents different examples of “early” datasets. The earliest set is from 1963 with the “A Facial Recognition Report” funded by the CIA and this was from before the term Artificial Intelligence was as widespread as it is today. Even still, they experimented with automated systems within the context of the military. A more modern dataset is the Multiple Encounter Dataset-II (MEDS-II). This set consists of mugshots of deceased criminals supplied by the FBI to the National Institute of Standards. Because the people in these photos were arrested multiple times, they wanted to learn how people age and especially how a face can change over time. The worst thing about this is that the arrested people in the photos never gave their consent for the images to be used, and the photos were done before they were convicted of any crimes. The other datasets are studying gaits, irises and how different lighting can change the appearance of a face. Generally, we find that the early datasets explores training of AI within the bigger institutions and research laboratories.

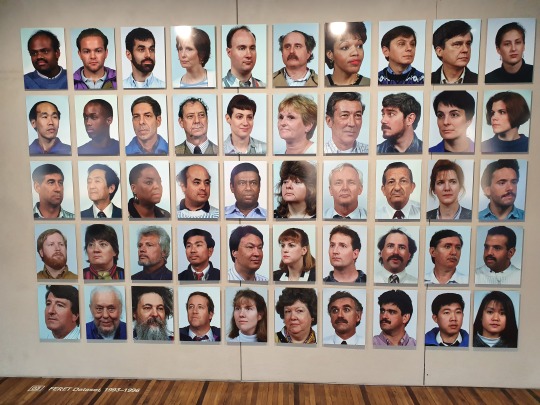

What I found interesting is the difference between the “early” sets (1990s) and sets from recent years. The U.S Department of Defense Counterdrug Technology Development Program Office developed automatic face recognition for intelligence and law enforcement personnel called the FERET set. This set consists of portraits of 1199 people. The portrayed people have put make-up on and their nicest shirt and earrings. It appears very formal and bears resemblance to portraits from anthropological expeditions (like the portraits made by Sophus Trombolt of the Sami people in the 1900s).

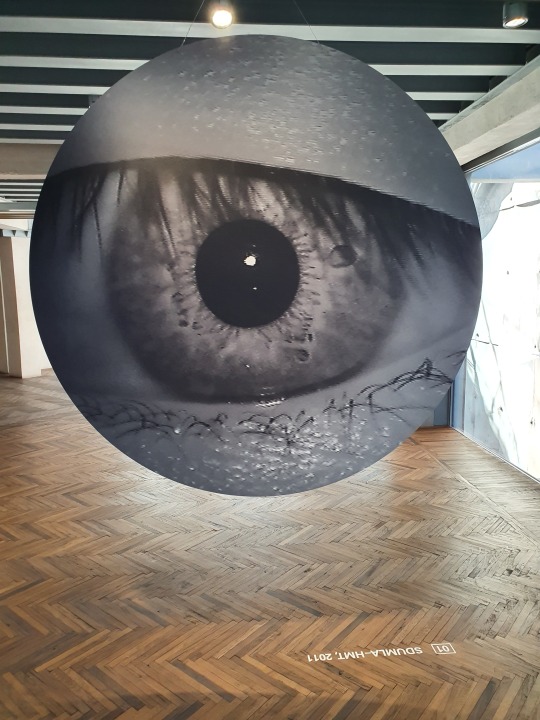

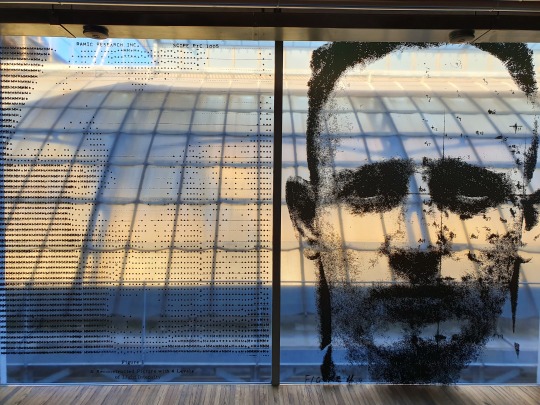

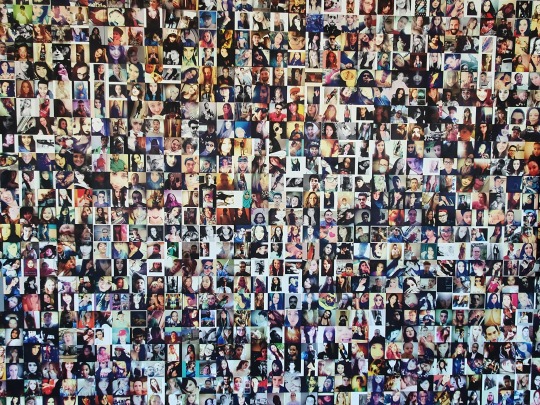

When you enter the second floor this tendency is gone. Except for the JAFFE set (Japanese Female Facial Expression) and Cohn-Kanade Au-Coded Expression Database, all the images in the datasets are now “found” and “collected” images. Gathered from platforms such as Flickr and Instagram, the formal appearance is now gone, and is much more intimate and personal. The modern datasets also bears another visual trait. The curators made a visual point to underline the massive shift in scale. When you see the enormous walls with miniature photographs, you become overwhelmed by the amount of data presented.

These sets consists of billions photos extracted from internet and social media. Since it became a part of everyday life, the amount of online photography has exploded. With bigger servers and better algorithms, machine vision as a technology is growing quickly and the problems and responsibility that comes with it as well. People`s private photos has been labelled, categorized and harvested without their knowledge or consent.

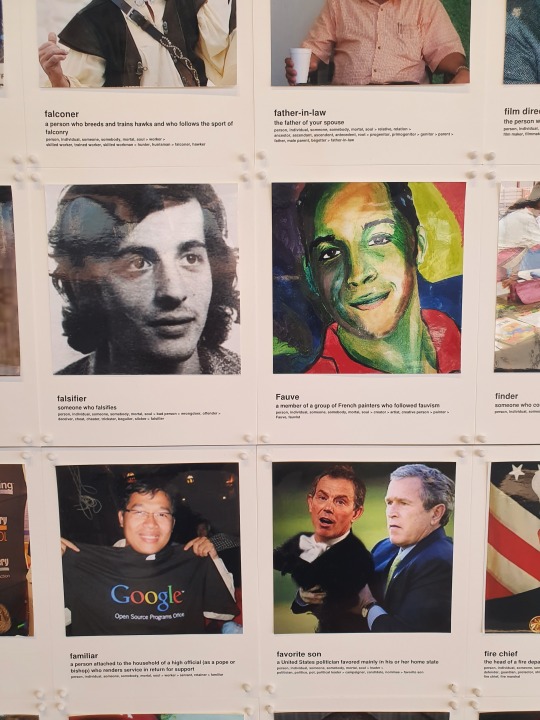

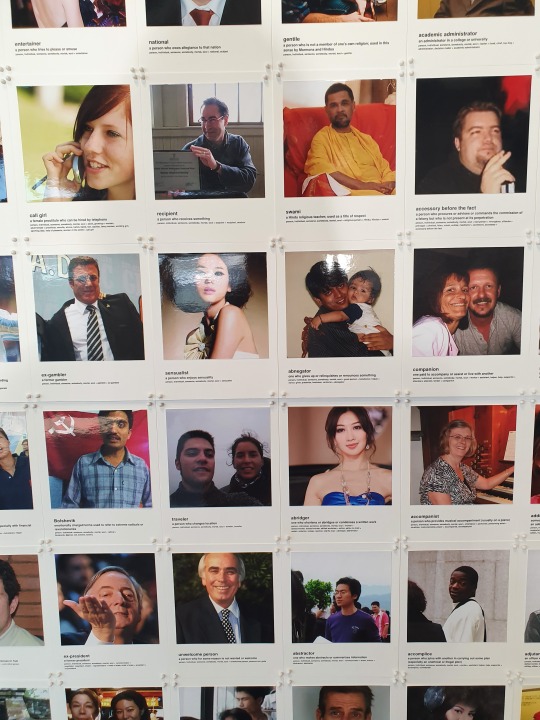

The curators seem eager to highlight the issues of labeling people within these massive datasets. The simple measurements and metadata are becoming problematic moral judgements. This is especially apparent in the ImageNet by Li Fei-Fei and Kai Li from Stanford and Princeton Universities “to map out the world of objects” The dataset contains 14,197,122 images organized into 21,841 categories. Paglen and Crawford chose to focus on the humans, which amounts to a million images sorted into over two thousand categories. Browsing the curated selection of people, my first impression is how absurd this appears. It is so massive and so full of error. I would be sad to find my own image up there, among the different cruel and unscientific labels such as “bad person”, “failure” and “looser”.

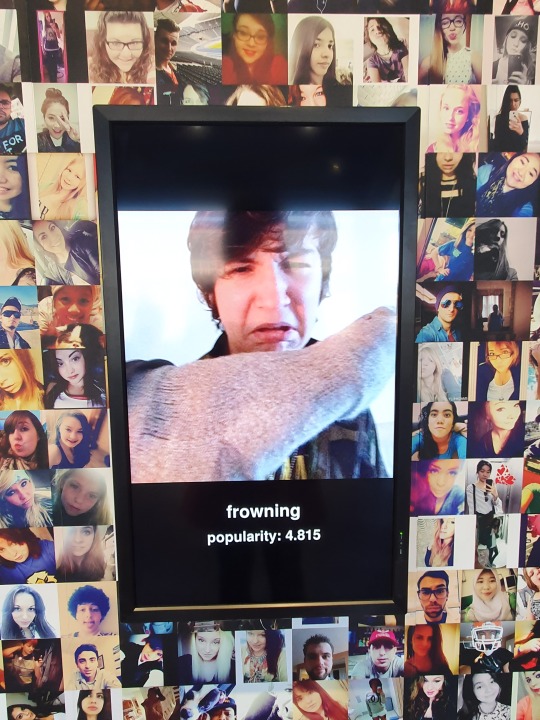

The last section is actually an art installation by Trevor Paglen himself. He developed “Image Roulette” in 2019 and it reveals how neural networks work and discusses the classifying of people in machine learning systems. The American girl with the red hair before me was recognised as “Irish”. I was recognised mainly as a non-smoker and my age: 25-32, gender: female emotion: fear (0,99). This would change rapidly by slightly moving your head.

“Training Humans” is both intimate and terrifying. All the precious moments and selfies people share with their loved ones ends up as data in an industrious dataset. The exhibit is showing how images of us are represented (how the machine sees us) and how we have been defragmented into binaries. Paglen and Crawford demonstrates the obvious flaws, but also how powerful machine vision can appear. Data is the new currency and big corporations utilizes machine vision technology with a complete disregard of bias within the datasets. The need to classify humans go way back, but it has hardly ever been successful in its task. Crawford wonders if AI will produce a shift in how we understand ourselves and our relationship to others, and society itself. Very few people benefit from these technologies so far, they are only subjected to it. You leave the exhibition thinking twice before uploading another selfie.

Photos and text by Linn Heidi Stokkedal 13.02.2020

0 notes

Text

Thoughts on Endgame - Spoiler Free (bc I haven’t seen it yet)

Long post but worth the read, imo.

When I was waiting for the Doctor Who 50th Anniversary, I spent my time, naturally, writing the episode on my own, in my head. Granted, it was full of self-inserts, but they were self-inserts rich in character and motivations. There wasn’t any big alien baddie, but the Doctor had to deal with a big spatial conundrum. I think I went with something like a wormhole that put disparate people and civilizations together on Earth, and the Doctor had to work with Torchwood and UNIT to solve it (as well as my own self-insert group, shut up, it was cool.)