#statistical mechanics

Text

Now it is our turn to study statistical mechanics...

#physics#science#thermodynamics#statistical mechanics#mechanics#insidesjoke#memes#meme#funny#student memes#humour#humor#comedy#most popular

337 notes

·

View notes

Text

Physics Friday #7: It's getting hot in here! - An explanation of Temperature, Entropy, and Heat

THE PEOPLE HAVE SPOKEN. This one was decided by poll. The E = mc^2 post will happen sometime later in the future

Preamble: Thermodynamic Systems

Education level: High School (Y11/12)

Topic: Statistical Mechanics, Thermodynamics (Physics)

You'll hear the word system being used a lot ... what does that mean? Basically a thermodynamic system is a collection of things that we think affect each other.

A container of gas is a system as the particles of gas all interact with each other.

The planet is a system because we, and all life/particles on earth all interact together.

Often, when dealing with thermodynamic systems we differentiate between open, closed, and isolated systems.

An open system is where both the particles and energy contained inside the system interact outside the system. Closed systems only allow energy to enter and exit the system (usually via a "reservoir").

We will focus mainly on isolated systems, where nothing enters or exits the system at all. Unless if we physically change what counts as the "system".

Now imagine we have a system, say, a container of gas. This container will have a temperature, pressure, volume, density, etc.

Let's make an identical copy of this container and then combine it with it's duplicate.

What happens to the temperature? Well it stays the same. Whenever you combine two objects of the same temperature they stay the same. If you pour a pot of 20 C water into another pot of 20 C water, no temperature change occurs.

The same occurs with pressure and density. While there are physically more particles in the system, the volume has also increased.

This makes things like Temperature, Pressure, and Density intensive properties of a system - they don't change when you combine systems with copies of itself. They act more like averages.

However, duplicating a system and combining it with itself causes the volume to double, it also doubles the amount of 'stuff' inside the system.

Thus things like volume are called intensive, as they appear to be altered by count and size, they act more like proportional values.

This is important in both understanding heat and temperature. The energy of a system is an intensive property, whereas temperature is intensive.

Temperature appears to be a sort of average of thermal energy, which is how we can analyse it - but this only works in the case of gasses, which isn't universal. It's useful to use a more abstract definition of temperature.

Heat, on the other hand, is much more real. It is concerned with the amount of energy transferred cased by a change in temperature. This change is driven by the second law of thermodynamics, which requires a maximisation of entropy.

But instead of tackling this from a high-end perspective, let's jump into the nitty-gritty ...

Microstates and Macrostates

The best way we can demonstrate the concept of Entropy is via the analogy of a messy room:

We can create a macrostate of the room by describing the room:

There's a shirt on the floor

The bed is unmade

There is a heater in the centre

The heater is broken

Note how in this macrostate, we can have several possible arrangements that describe the same macrostate. For example, the shirt on the floor could be red, blue, black, green.

A microstate of the room is a specific arrangement of items, to the maximum specificity we require.

For example the shirt must be green, the right heater leg is broken. If we care about even more specificity we could say a microstate of the system is:

Atom 1 is Hydrogen in position (0, 0, 0)

Atom 2 is Carbon in position (1, 0, 0)

etc.

Effectively, a macrostate is a more general description of a system, while a microstate is a specific iteration of a system.

A microstate is considered attached to a macrostate if the conditions of the macrostate are required. "Dave is wearing a shirt" and "Dave is wearing a red shirt" can both be true, but it's clear that if Dave is wearing a red shirt, he is also wearing a shirt.

What Entropy Actually is (by Definition)

The multiplicity of a microstate is the total amount of microstates attached to it. It is basically a "count" of the total permutations given the restrictions.

We give the multiplicity an algebraic number Ω.

What we define entropy as is the natural logarithm of Ω.

S = k ln Ω

Where k is Boltzmann's constant, to give the entropy units. The reason why we take the logarithm is:

Combinatorics often involve factorials and exponents a.k.a. big numbers, so we use the log function to cut it down to size

When you combine a system with a macrostate of Ω=X with a macrostate of Ω=Y, the total multiplicity is X × Y. So this logarithm makes Entropy extensive

So that's what Entropy is, a measure of the amount of possible rearrangements of a system given a set of preconditions.

Order and Chaos

So how do we end up with the popular notion that Entropy is a measure of chaos, well, consider a sand castle,

Image Credit: Wall Street Journal

A sand castle, compared the surrounding beach, is a very specific structure. It requires specific arrangements of sand particles in order to form a proper structure.

This is opposed to the beach, where any loose arrangement of sand particles can be considered a 'beach'.

In this scenario, the castle has a very low multiplicity, because the macrostate of 'sandcastle' requires a very restrictive set of microstates. Whereas a sand dune has a very large set of possible microstates.

In this way, we can see how the 'order' and 'structure' of the sand castle results in a low-entropy system.

However this doesn't explain how we can get such complex systems if energy is always meant to increase. Like how can life exist if the universe intends to make things a mess of particles, AND the universe started as a mess of particles.

The consideration to make, as we'll see, is that chaos is not the full picture, large amounts of energy can be turned into making entropy lower.

Energy Macrostates

There's still a problem with our definition. Consider two macrostates:

The room exists

Atom 1 is Hydrogen in position (0, 0, 0), Atom 2 is Carbon in position (1, 0, 0), etc.

Macrostate one has a multiplicity so large it might as well be infinite, and is so general it encapsulates all possible states of the system.

Macrostate two is so specific that it only has a multiplicity of one.

Clearly we need some standard to set macrostates to.

What we do is that we define a macrostate by one single parameter: the amount of thermal energy in the system. We can also include things like volume or the amount of particles etc. But for now, a macrostate corresponds to a specific energy of the system.

This means that the multiplicity becomes a function of thermal energy, U.

S(U) = k ln Ω(U)

The Second Law of Thermodynamics

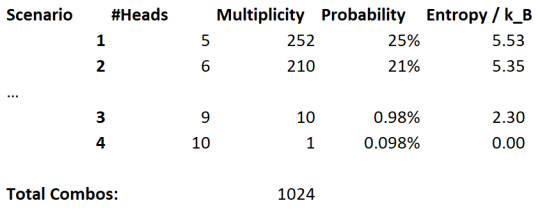

Let's consider a system which is determined by a bunch of flipped coins, say, 10 flipped coins.

H T H T H H H T T H

This may seem like a weird example, but there is a genuine usefulness to this. For example, consider atoms aligned in a magnetic field.

We can define the energy of the system as being a function of the amount of heads we see. Thus an energy macrostate would be "X coins are heads".

Let's now say that every minute, we reset the system. i.e. we flip the coins again and use the new result.

Consider the probability of ending up a certain macrostate every minute. We can use the binomial theorem to calculate this probability:

Here, the column notation gives the choose function, which accounts for duplicates, as we are not concerned with the order in which we get any two tails or heads.

The p to the power of k is the probability of landing a head (50%) to the power of the number of heads we get (k). n-k becomes the number of tails obtained.

The choose function is symmetric, so we end up with equal probabilities with k heads as with k tails.

Let's come up with some scenarios:

There are an equal amount of heads and tails flipped

There is exactly two more heads than tails (i.e. 6-4)

All coins are heads except for one

All coins are heads

And let's see these probabilities:

Clearly, it is more likely that we find an equal amount of coins, but all coins being heads is not too unlikely. Also notice that the entropy correlates with probability here. A large entropy is more likely to occur.

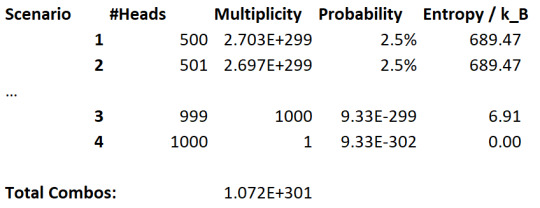

Let's now increase the number of coin flips to 1000:

Well, now we can see this probability difference much more clearly. The "all coins are heads" microstate is vanishingly unlikely, and microstates close to maximum entropy are very likely comparatively.

If we keep expanding the amount of flips, we end up pushing the limits of this relationship. And thus we get the tendency for this system to maximise the entropy, simply because it's most likely.

In real life, systems don't suddenly reset and restart. Instead we want to consider a system where every minute, one random coin is selected and then flipped.

Consider a state in maximum entropy undergoing this change. It's going to take an incredibly long amount of time to perform something incredibly unlikely.

But for a state in minimum entropy, any deviation from the norm brings the entropy higher.

Thus the system has the tendency to end up being "trapped" in the higher entropy states.

This is the second law of thermodynamics. It doesn't actually make a statement about a particularly small system. But for small systems we deal with statistics differently. For large systems, we end up ALWAYS seeing a global increase in entropy.

How we get temperature

Temperature is usually defined as the capacity to transfer thermal energy. It sort of acts like a "heat potential" in the same way we have a "gravitational potential" or "electrical potential".

Temperature and Thermal Energy

What is the mathematical meaning of temperature?

Temperature is defined as a rate of change, specifically:

(Apologies for the fucked image formatting)

(Reminder this assumes Entropy is exclusively a function of energy)

The reason we define it as a derivative of entropy as entropy is an emergent property of a system. But thermal energy is something we can personally change.

What this rule means is that a system with a very low temperature will react greatly to minor inputs in energy. A low temperature system thus is really good at drawing energy from outside.

Alternatively, a system with very high temperature will react very slightly to minor inputs in energy.

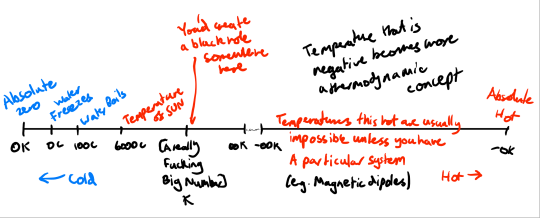

At the extremes, absolute zero is where any change to the internal energy of the system will cause the system to escape absolute zero. According to the third law of thermodynamics this is where entropy reaches a constant value, because it can't be changed any more after being changed by an infinite amount.

Infinite temperature is where it's effectively impossible to pump more heat into the system, because the system is so resistant to accepting new energy.

Negative Temperature???

Considering our coin-flipping example, let's try and define some numbers. Let's say that the thermal energy of the system is equal to the amount of heads flipped.

This gives us an entropy of:

S ≈ U ln[n/U - 1] + n ln[1 - U/n]

(hopefully this is correct)

The derivative of this is:

dS/dU = ln[n/U - 1] - 2n/(n-U)

Note how this value becomes negative if n is large enough. Implying a negative temperature!

But how is this possible? What does this mean?

Negative temperatures are nothing out of the ordinary actually, they just mean that inputting energy decreases entropy and removing energy increases entropy.

What this means is that a system at a negative temperature, when put in contact with another system, will spontaneously try to dump energy out of itself as it aims to increase entropy.

This actually means that negative temperature is beyond infinite temperature. A fully expanded temperature scale looks like this:

[The Planck temperature is the largest possible temperature that won't create a black hole at 1.4 × 10³² Kelvin]

[Note that 0 K = -273.15 C = -459.67 F and 273.15 K = 0 C = 32 F]

This implies that -0 is sort of the 'absolute hot'. A temperature so hot that the system will desperately try to bleed energy much like the absolute zero system tries to suck in energy.

Heat and the First Law of Thermodynamics

So, what do we do with this information then? How do we actually convert this into something meaningful?

Here, we start to pull in the first law of thermodynamics, which originates with the thermodynamic identity:

dU = T dS - P dV

Note that these dx parts just mean a 'tiny change' in that variable. Here, U is expanded to include situations where the thermal energy of the system has to include things like compression/expansion work done to the system.

This identity gives us the first law:

dU = Q - W

Where Q, the heat energy of the system is re-defined as being dS/dQ = 1/T.

And W, the work (mechanical) energy of the system is defined as being dU/dV.

Both heat and work describe the changes to the energy of the system. Heating a system means you are inputting an energy Q.

If no energy is entering or exiting the system, then we know that any work that is being applied must be matched by an equal change in heat energy.

Since we managed to phrase temperature as a function of thermal energy, we can now develop what's known as an equation of state of the system.

For an ideal gas made of only hydrogen, we have an energy:

U = 3/2 NkT

Where N is the number of particles and k is the boltzmann constant.

We can also define another equation of state for an ideal gas:

PV = NkT

Which is the ideal gas law.

So what is heat then?

Q is the change of heat energy in a system, whereas U is the total thermal energy.

From the ideal gas equation of state, the thermal energy is proportional to temperature. In most cases, we can often express thermal energy as being temperature scaled to size.

Thermal energy is strange. Unlike other classical forms of energy, it can be difficult to classify it as potential or kinetic.

It's a form of kinetic energy as U relies on the movement of particles and packets within the system.

It's a form of potential energy, as it has the potential to transfer it's energy to others.

Generally, the idea that thermal energy is related to temperature is related to the 'speed' at which particles move is not too far off. In fact, we often relate:

1/2 m v^2 = 3/2 N k T

When performing calculations, as it's generally useful.

Of course, temperature, as aforementioned, is a sort of potential describing the potential energy (that can be transferred) per particle.

Heat, then effectively, is the transfer of thermal energy caused by differences in temperature. Generally, we quantify the ability for a system to give off heat using it's heat capacity - note that this is different from temperature.

Temperature is about how much the system wants to give off energy, whereas heat capacity is how well it's able to do that. Heat aims to account for both.

Conclusion

This post honestly was a much nicer write-up. And I'd say the same about E = mc^2. The main reason why is because I already know about this stuff, I was taught about it all 1-4 years ago in high school or university.

My computer is busted, so I'm using a different device to work on this. And because of that I do not have access to my notes. So I don't actually know what I have planned for next week. I think I might decide to override it anyways with something else. Maybe the E = mc^2 one.

As always, feedback is very welcome. Especially because this topic is one I should be knowledgeable about at this point.

Don't forget to SMASH that subscribe button so I can continue to LARP as a youtuber. It's actually strangely fun saying "smash the like button" - but I digress. It doesn't ultimately matter if you wanna follow idc, some people just don't like this stuff weekly.

#stem#academics#physics friday#physics#stemblr#science#thermodynamics#temperature#entropy#statistical mechanics

39 notes

·

View notes

Text

42 notes

·

View notes

Text

heartbreaking: the worst subject you know just made a great proof

#i don't enjoy statistics and i'm not super interested in classical mechanics#so why is the derivation of the Gibbs distribution one of the coolest things I've seen this year#mathblr#mathematics#maths#math#physics#statistical mechanics

9 notes

·

View notes

Note

excuse me wh a t???????? im sorry i need to know more desperately

Update on this!! It was not quantum and I just misremembered! It was statistical mechanics, which is thermodynamics! Not too far off but still horrendous!

6 notes

·

View notes

Text

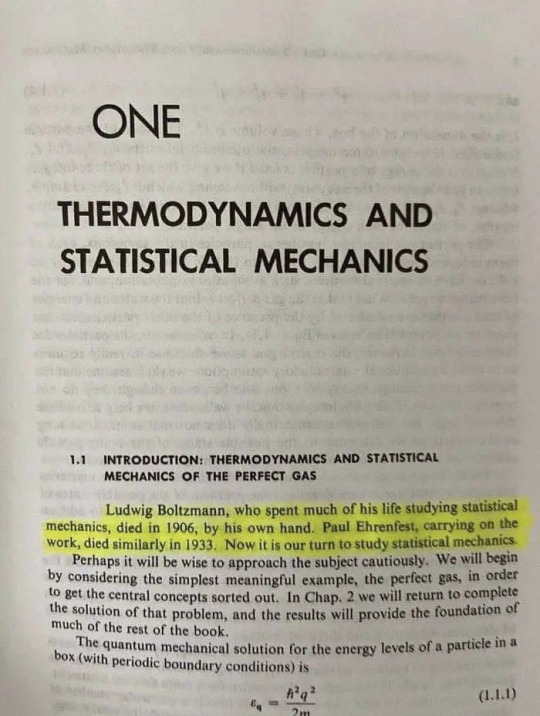

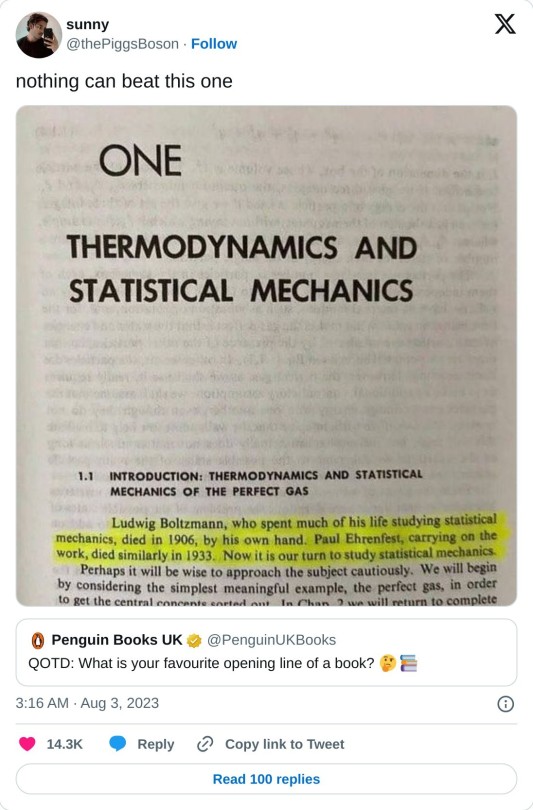

Ludwig Boltzmann, who spent much of his life studying statistical mechanics, died in 1906, by his own hand. Paul Ehrenfest, carrying on the work, died similarly in 1933. Now it is our turn to study statistical mechanics.

―David L. Goodstein, introduction to States of Matter 1975

6 notes

·

View notes

Note

Carter is special. Some people have synesthesia, and some people have photographic memories, but Carter can see microbes, and stars closeup as well with nothing but his naked eyes. What is it like to be Carter’s coworker or acquaintance, you may ask? It is fine. It is sometimes great. You should ask Carter if you really want to know, though.

(Don't forget to send me one more!)

I am as old as I feel, and my hair is a bristling mass of sun-scorched black and grey, cut short. I am middle-aged and fat and wrinkled, I wear glasses and I have a receding hairline. My name is Carter, and I want to kill a man.

I am a trained assassin. I could have retired long ago but I like killing people for money. I like being better than them. I killed people to support my parents when I was very young, I killed people to survive when I was even younger, and I have killed people in far more personal ways since then. I am not a patriotic man. I am not a religious man. I killed more people when I was younger. I am proud of that.

You may be thinking that I am evil. Or you may be thinking that I am good. Most people are evil or good in the same way as fathers are evil or good: they are either the kind who sacrifices their left kidney for you, or the kind who yells at you for tripping over the kitchen table. That is not me. I am not like that.

I used to be kind. I thought that kindness, was, in the end, the defining attribute of a human being. And in the end, all this was for kindness: I killed people so that I could eat. I killed people so I could live. I killed people for my parents, for my children, for no reason other than their bodies and their blood. When I was a child, I picked up a torch so that I could light up people's lives, a little bit. When I was still a child, I wandered through this country to find the people that needed the most help, and I asked them to help me, and I asked them to be kind to me, and they were.

(from arrt, used with permission)

3 notes

·

View notes

Text

A #zettelkasten or commonplace provides a catalytic surface to which ideas in the “solution of life” can more easily adhere to speed their reaction with ideas you’ve already seen and collected.

Once combined via linking, further thinking and writing, they can be released as novel ideas for everyone to use.

#catalysts#combinatorial creativity#commonplace books#statistical mechanics#Zettelkasten#note taking#Social Stream

2 notes

·

View notes

Text

Day 42, October 13, 2022

I succeeded in implementing my mutation into my DNA. What this means is that I can now proceed onto the next step which includes making and purifying the protein. I am now on track to tag this protein with Nanogold and hopefully the next steps go well by the end of the year too. If not then we might have to change our plan and try another method. But I feel confident this could work.

4 notes

·

View notes

Text

continually thinking about how people misunderstand Tenet as a time-travel film, when of course it's really a thermodynamics film.

And as we all know, its impossible to understand an introductory course in thermodynamics.

4 notes

·

View notes

Text

Jenann Ismael

Totality, self-reference and time (March 2021)

youtube

Friday, December 23, 2022

6 notes

·

View notes

Text

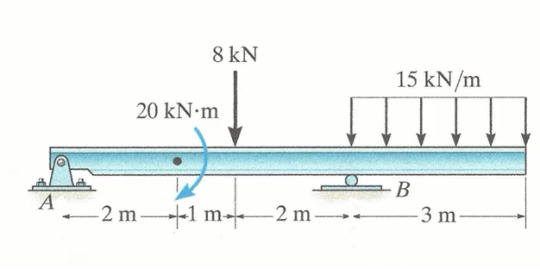

this thing is probably gonna make me cry, just look at it

#engineering#statistical mechanics#forces#moments#diagrams#I curse hibbeler and beer for making all of our kind to go trough this

2 notes

·

View notes

Photo

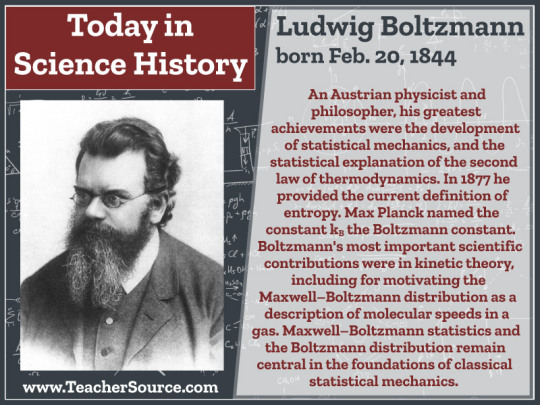

Ludwig Boltzmann was born on February 20, 1844. An Austrian physicist and philosopher, his greatest achievements were the development of statistical mechanics, and the statistical explanation of the second law of thermodynamics. In 1877 he provided the current definition of entropy. Max Planck named the constant kB the Boltzmann constant. Boltzmann's most important scientific contributions were in kinetic theory, including for motivating the Maxwell–Boltzmann distribution as a description of molecular speeds in a gas. Maxwell–Boltzmann statistics and the Boltzmann distribution remain central in the foundations of classical statistical mechanics.

#ludwig boltzmann#entropy#physics#statistical mechanics#boltzmann constant#kinetic theory#statistics#science#science history#science birthdays#on this day#on this day in science history

1 note

·

View note

Text

https://www.quantamagazine.org/physicists-trace-the-rise-in-entropy-to-quantum-information-20220526/?mc_cid=bac94ded8a&mc_eid=8d6c3e4439

#quanta magazine#entropy#quantum information#quantum mechanics#information distribution#statistical mechanics#thermodynamics

6 notes

·

View notes

Text

关于高分子的结构流变学模型

这是为许元泽先生的著作《高分子结构流变学》的第二章添加的评论。

许老师在第二章开头的这段话——

高分子稀溶液的动力学理论可追溯到40年代,Kuhn、Kramers、Debye和Kirkwood等人的工作。他们把统计力学和流体力学结合起来,构成一种“分子”理论,实际上是分子结构模型的理论。50年代由Rouse和Zimm等发展这些概念和方法导出流变学量。70年代以来得到了流变学本构方程,理论结构逐步完善,并进行了细致的实验检验,针对不足之处又有各种新的探索。这方面较完整的总结参见Bird等的专著,其中较完整地反映了当代的水平。此外还可参阅一些总结性文献。

——至今看来仍把该书出版时(1988年)已经定型的理论认识总结得很好。这些模型可称为高分子溶液的流变学性质的“经典模型”。它们都是流体动力学(hydrodynamic)模型,即明明溶质分子(对于高分子溶质则指一个链段单元)与溶剂分子的…

View On WordPress

0 notes