#birthe neumann

Text

CONSTELLATION (2024-)

1x04 The Left Hand of God

1x05 Five Miles Out, the Sound Is Clearest

#constellation#constellation s1#constellation 1x04#constellation 1x05#constellationedit#sara schiller#jo ericsson#magnus taylor#wallie bang#alice ericsson-taylor#noomi rapace#emily cox#james d'arcy#birthe neumann#rosie coleman#davina coleman#gifs#ours#by bee#scifiedit#tvedit#filmtvcentral#constellation apple tv

21 notes

·

View notes

Text

RIGET: EXODUS (2022)

dir. Lars von Trier

73 notes

·

View notes

Photo

Festen

directed by Thomas Vinterberg, 1998

#Festen#The Celebration#Thomas Vinterberg#movie mosaics#Ulrich Thomsen#Henning Moritzen#Paprika Steen#Gbatokai Dakinah#Thomas Bo Larsen#Helle Dolleris#Bjarne Henriksen#Trine Dyrholm#Birthe Neumann#Lars Brygmann

2 notes

·

View notes

Note

This blog rules. I love all the readings and excerpts in your selfhood tag. Do you have any kind of reading list for existential philosophy you could share? I've been really wanting to dive in lately but I'm intimidated about where to start.

hey you rule also.

here's everything i've been surrounding myself with lately—not limited to existential philosophy but i think all migrating through the same waters:

Camus, The Myth of Sisyphus; The Stranger; The Plague

Sartre, Nausea; Being and Nothingness

E.M. Cioran, A Short History of Decay; The Trouble With Being Born

R.D. Laing, The Divided Self

Jung, Modern Man In Search of a Soul; Psychology and the Occult

Søren Kierkegaard, The Sickness unto Death

Ernest Becker, The Denial of Death

Dostoyevsky, Crime and Punishment

Foucault, Discipline and Punish: The Birth of the Prison

Nabokov, Invitation to a Beheading

Clarice Lispector, The Apple in the Dark

Kafka, The Trial

Deleuze & Félix Guattari, Anti-Oedipus: Capitalism and Schizophrenia

Andrew Scull, Desperate Remedies: Psychiatry and the Mysteries of Mental Illness; Madhouses, Mad-Doctors, and Madmen: The Social History of Psychiatry in the Victorian Era

Kay Redfield Jamison, Fires in the Dark: Healing the Unquiet Mind

Esmé Weijun Wang, The Collected Schizophrenias

Erich Neumann, The Origins and History of Consciousness

Bessel van der Kolk, The Body Keeps the Score

David Abram, The Spell of the Sensuous

Lisa Miller, The Awakened Brain: The Psychology of Spirituality

The Tibetan Book of the Dead

74 notes

·

View notes

Text

Random Kirby Headcanon #16

When it comes to surnames and whether or not they exist, all species seem to go about it a little differently.

In the case of Astrals, they don’t have traditional surnames. Instead, they collect titles! For example, Kirby’s full name would be “Kirby of the Stars,” whereas Galacta Knight’s would be “[Whatever his real name is], Galacta Knight, the Aeon Hero.”

Waddle Dees don’t have last names. Frankly, they barely even have first names. In part, I imagine it’s not necessary since they sort of, by default, all consider themselves one big family anyways.

Tevatians (Animal people) don’t have last names, but may be referred to by their species occasionally, especially for clarification. For example, if someone were in a conversation with two Ricks, they might refer to our Rick as “Rick the Hamster” specifically.

Dark Matter do not traditionally have names, but Gooey has taken on an astral-style full name. Currently, he’s Gooey of the Heart.

Fairies’ surnames are based on the planet/kingdom they hail from. So for example, every fairy from Ripple Star has the last name “Ripple.”

Humans, obviously, traditionally have surnames, but seeing as how Adeleine was born in a wet cardboard box all alone and grew up with no family in my interpretation, she didn’t have one for a long time. Eventually, she took on the “Ripple” surname, though, as the fairies effectively took her in.

Halcandrans probably had last names based on their affiliations/what larger groups or sects they were a part of. Magolor, however, only being part Halcandran, and also being born in a wet cardboard box all alone, has no last name.

Insectoids probably have surnames, considering they had a royal family. I can see something French-adjacent. I have no actual idea on what I’d make Taranza and Sectonia’s actual surnames, though.

Neumanns (Susie and the Mage Sisters’ species in my headcanons) have last names. Susie’s, obviously, is Haltmann. I like to think the Mages wanted to take on Hyness’s last name after being adopted by him, but I also don’t think his species does last names, so maybe their surnames are just “Jamba” or something. Flamberge specifically actually has that hyphenated with her birth surname, being the only Mage Sister that was close to their original family.

Avians (Dedede’s species), Noddies (Marx’s species), and Elfilin’s species also do not have last names. However, I can also see Elfilin eventually taking on an Astral-styled surname like Gooey did.

#kirby#sack’s kirbyverse#if i think of something later for elfilin flamberge and the spiders’ last names i’ll reblog this with a that as an addon#but as for now i don’t have anything specific picked out for them just ideas for their species as a whole

8 notes

·

View notes

Photo

"Entry No. 8

13.10.17(20?).

Suspect #2 in the disappearance of my brother - Sarah Neumann

A very strange person who recently moved to Raven Brooks. Practically nothing is known about her, except that she flew from Germany to her old friend (according to her). The place of residence is unknown (not listed in any of the hotels in the city). Date of birth 01.12.1981 (so it is written in the driver's license). A week before the disappearance, Enzo came several times to his workshop to fix the bike. She talked to him about missing people, marriage and widowers. There is a tattoo of a snake on the back (I didn't ask and didn't see it completely, but a piece of the tail was visible from under the top). She wears dark clothes, and also keeps a pistol and knives in the glove compartment. Is she in a gang? On the run? These are just my guesses. She dropped Jess off at home the other day. I had a very serious conversation with my niece, I told her not to go with Sarah anymore. I didn't see anything interesting from their conversations (she loves Mexican cuisine and understands technology).

17.10.17(20?).

We met in the ecolavka. She was very friendly and polite to me, but I'm still on my guard. Sarah bought only some cheap tools for her transport and scotch tape. She explained her choice by the fact that the bike broke down again, and now there is no one to fix it. "I will fix bike with grit spit and a whole lot of duct tape," she joked. I offered to help her, to which she quickly agreed. She's coming to the workshop tomorrow. This is a great opportunity for me to learn more about her. I'm sure she's the key to solving Enzo's disappearance. At least I hope so. God, Marie, you completely crazy, rushing at the first person she meets. I need to double-check all the recordings from the cameras and recall all the conversations Enzo had with the townspeople. Jordy is also investigating something, but alone. I don't like it all.

PS: Remind myself to stop communicating with Nicky, he's a bad influence on me with these records and investigations. Paranoia is contagious."

-

Ta-da! I present to your attention my OC. I had to add a character to the story, otherwise Aaron would have turned out to be too Maru Sue if he did everything alone. I thought of Sarah a long time ago, in 2016, I just didn't know where to stick it. I hope you will accept her into our family. Chapter 1 is only half finished)

#Hello Neighbor#HELLO GUEST#hello neighbor hide and seek#hello neighbor 2#secret neighbor#hello neighbor fanart#hello neighbor au#Fanart#Perfect Family AU#my beautiful baby#sarah neumann

22 notes

·

View notes

Text

This is -

Aaliyah’s mood board

You can begin again.

for my upcoming fic “Seamless”

bio

Name: Aaliyah Neumann (read: “newman”)

Nicknames: Lia, Liyah

Date of Birth: 30th November, 1995

Age: 24 (in-game!)

Sexuality: straight

Nationality: German

appearance

Height: 162 cm / 5’3”

Eye colour: honey brown

Hair colour: naturally dark blonde

Body Type: slim/almost petite

personality & curiosity

Spoken Languages: German, English

Hobbies: music, raves, photography

Fav singer/band: Yungblud, Linkin Park

Fav colour: beige, soft oranges & browns

Occupation: drummer in an alternative rock band

Star sign: Sagittarius

What she’s like: quiet. picky. sarcastic. might appear as mean & arrogant sometimes. super soft once you get to know her. loyal. doesn’t take any BS. will only show a lot of emotion when she makes/listens to music and/or when she feels that she can really trust a person.

If you haven’t seen Kassam’s mood board, click here ✨

#litg s2#litg#litg kassam#ao3 fanfic#litg fanfic#dj king#kassam x mc#litg kassam x mc#kassam x aaliyah#seamless#character sheet#character design#moodboard#aaliyah’s mood board

24 notes

·

View notes

Text

The Boss giving birth on D-Day is funny in concept but horrifying given the added context in the Peace Walker tapes.

She had just gotten caught failing to assassinate John Neumann, under the false intel that he was a nazi spy. Not only had she blown her cover on a top secret mission, her failure revealed the government's mistake. She was an embarrassment that they wanted gone, so she was sent into combat in an extremely vulnerable state.

She also probably couldn't say no to the order. It's strongly implied that her brain injury rendered her incapable of refusing orders. She failed the Los Alamos infiltration in the first place due to worrying about the safety of her baby, I doubt she would've agreed to Normandy if she had the option.

just

holy shit

#i mean it's still pretty funny...#the pw tapes are crazy man#the boss#metal gear solid#pregnancy cw#being annoyed abt the misogyny in mgs vs finding this story compelling#vague ''they'' because idk who she was receiving orders from at this point#some us branch or another#it's not clear#analysis

17 notes

·

View notes

Text

Spring Awakening - Zendaya (she/her) as Ilse Neumann, requested by anon

Birthday: September 1, 1996 (age 25)

Birth Place: Oakland, California

Theatre credits: Little Ti Moune (Once on This Island), Joe Thibodeaux (Caroline, or Change). Zendaya also played the role of Rocky Blue in the Disney Channel show Shake it Up, and Anne Wheeler in the musical movie The Greatest Showman.

(Pictured on the right is Carly-Sophia Davies, who played the role in the 2021 London revival)

Credits: Steve Granitz, Jeff Spicer

#spring awakening#once on this island#caroline or change#shake it up#the greatest showman#zendaya#ilse neumann#ti moune#joe thibodeaux#rocky blue#anne wheeler

15 notes

·

View notes

Text

Quantum Foundations: The Galactic Center File

Many astrologers have noticed the prominence of Saturn (dedication, discipline, determination, creations of enduring value etc.) and Jupiter (luck, blessings, inspiration, search for deep meaning and reliable knowledge etc.) in the natal charts of many scientists. The following statements depend on the accuracy of the birth-data, so if the publicly available birth-data is correct or even approximately correct then the following astrological facts are true (or approximately true). First, note, Isaac Newton: Saturn 19 deg Pisces 53 min conjunct Jupiter 14 deg Pisces 08 min and Werner Heisenberg: Saturn 14 deg Capricorn 43 min conjunct Jupiter 15 deg Capricorn 28 min, this is well known to astrologers and the significance of these placements is relatively straightforward. The prominence of the Galactic Center, however, is relatively less well known and that is one of the reasons I’m including the Galactic Center (GC) in ‘AveryCanadianFilm’. The following astrological aspects, for deceased physicists, are from publicly available birth-data.

Newton: Mercury 20 deg Sag 56 min conjunct GC 21 deg Sag 52 min.

Einstein: Sun 23 deg Pisces 30 min square GC 25 deg Sag 10 min.

Heisenberg: ASC 21 deg Gemini 07 min opposes GC 25 deg Sag 29 min.

Pauli: [Venus 20 deg Gemini 18 min conjunct Neptune 24 deg Gemini 55 min]

opposes [Chiron_R 24 deg Sag 39 min conjunct GC 25 deg Sag 28 min].

Max Born: Jupiter_R 27 deg Gemini 20 min opposes [Moon 24 deg Sag 25 min conjunct GC 25 deg Sag 13 min].

von Neumann: Uranus 26 deg Sag 23 min conjunct GC 25 deg Sag 30 min.

Schrödinger: Chiron 29 deg Gemini 56 min opposes GC 25 deg Sag 17 min.

de Broglie: Grand Trine in fire involving Jupiter-Chiron-GC

Jupiter_R 24 deg Aries 56 min trine Chiron 22 deg Leo 50 min trine GC 25 deg Sag 21 min.

David Bohm: Sun 28 deg Sag 01 min conjunct GC 25 deg Sag 42 min.

The following astrological aspects, for physicists still living at the time this was posted, are from publicly available birth-data .

Anthony Leggett: Chiron 25 deg Gemini 49 min opposes GC 26 deg Sag 00 min

Carlo Rovelli: Venus 26 deg Gemini 30 min opposes GC 26 deg Sag 15 min,

[Jupiter 21 deg Leo 52 min conjunct Pluto_R 26 deg Leo 06 min] trine GC.

#Hubert Hugh Burke#AveryCanadianFilm#huberthughburke#Astrology#Werner Heisenberg#Wolfgang Pauli#Isaac Newton#John von Neumann#Max Born#Anthony Leggett#Carlo Rovelli#Albert Einstein#Quantum Foundations#Galactic Center#Script#Louis de Broglie#David Bohm#Erwin Schrödinger

7 notes

·

View notes

Text

In a future not too far from our own, the legacy of Ludwig Boltzmann thrived within the intricate mesh of science and technology. Amidst the shimmering skyline of Neo-Vienna, there existed a gallery that curated the most extraordinary of exhibits, and its pièce de résistance was the living sculpture known as the Entropy Child.

Crafted by the enigmatic artist Aria Neumann, the Entropy Child was a living testament to the Second Law of Thermodynamics, which Boltzmann had so passionately contributed to. The sculpture was a hyper-realistic android, with skin like satin and a cascade of wild, watercolor hair that seemed to flow with the very essence of chaos and order.

Every strand of the Entropy Child's hair was a microcosmic representation of Boltzmann's entropy, each hue shifting unpredictably between states of equilibrium and disequilibrium. The sculpture’s eyes, a piercing blue, mirrored the skies above Neo-Vienna, while the rest of the body was adorned with liquid streams that resembled molten metal, each drop signifying the irreversible flow of time.

The story went that Aria had infused the android with a consciousness based on Boltzmann's brain patterns, which had been meticulously preserved and digitized by historians. The Entropy Child thus carried within it the enigmatic physicist's passion for understanding the nature of reality, processing the world around it with a curiosity that echoed that of its progenitor.

Visitors would flock to see the Child, to witness the tears of paint that wept from its eyes, each one a small universe birthing and dying in moments. They said that if you looked closely, within the chaotic patterns of the tears, you could see the birth and death of stars, the creation of galaxies, and the whispers of cosmic winds.

One day, a peculiar event occurred as the gallery hosted the annual Boltzmann Symposium. As scholars discussed the abstract nature of time, entropy, and the universe, the Entropy Child began to sing—a melody so haunting that the entire city stood still. The voice seemed to be a convergence of science and art, an ode to the unending dance of atoms and the stars.

And as the Child sang, the streams of paint and metal began to solidify and reshape, forming complex mathematical equations in mid-air—Boltzmann's equations, reimagined in a three-dimensional space, alive and evolving.

The phenomenon was later named "The Boltzmann Flux," and it challenged the very foundations of android laws and art. For in the presence of the Child, machines began to dream of entropy, and humans began to dream of order within the chaos. The Entropy Child became not just a bridge between the past and the future, but a messenger of the eternal, ever-present moment where art and science merged, where Boltzmann's legacy found a new expression beyond the confines of human understanding.

And thus, in the heart of Neo-Vienna, beneath the gaze of Ludwig Boltzmann’s brilliance, the Entropy Child continued to dance to the silent music of the universe, an eternal embodiment of the beauty and mystery that lies within the equations that govern our existence.

0 notes

Text

Jay Dawani is Co-founder & CEO of Lemurian Labs – Interview Series

New Post has been published on https://thedigitalinsider.com/jay-dawani-is-co-founder-ceo-of-lemurian-labs-interview-series/

Jay Dawani is Co-founder & CEO of Lemurian Labs – Interview Series

Jay Dawani is Co-founder & CEO of Lemurian Labs. Lemurian Labs is on a mission to deliver affordable, accessible, and efficient AI computers, driven by the belief that AI should not be a luxury but a tool accessible to everyone. The founding team at Lemurian Labs combines expertise in AI, compilers, numerical algorithms, and computer architecture, united by a single purpose: to reimagine accelerated computing.

Can you walk us through your background and what got you into AI to begin with?

Absolutely. I’d been programming since I was 12 and building my own games and such, but I actually got into AI when I was 15 because of a friend of my fathers who was into computers. He fed my curiosity and gave me books to read such as Von Neumann’s ‘The Computer and The Brain’, Minsky’s ‘Perceptrons’, Russel and Norvig’s ‘AI A Modern Approach’. These books influenced my thinking a lot and it felt almost obvious then that AI was going to be transformative and I just had to be a part of this field.

When it came time for university I really wanted to study AI but I didn’t find any universities offering that, so I decided to major in applied mathematics instead and a little while after I got to university I heard about AlexNet’s results on ImageNet, which was really exciting. At that time I had this now or never moment happen in my head and went full bore into reading every paper and book I could get my hands on related to neural networks and sought out all the leaders in the field to learn from them, because how often do you get to be there at the birth of a new industry and learn from its pioneers.

Very quickly I realized I don’t enjoy research, but I do enjoy solving problems and building AI enabled products. That led me to working on autonomous cars and robots, AI for material discovery, generative models for multi-physics simulations, AI based simulators for training professional racecar drivers and helping with car setups, space robots, algorithmic trading, and much more.

Now, having done all that, I’m trying to reign in the cost of AI training and deployments because that will be the greatest hurdle we face on our path to enabling a world where every person and company can have access to and benefit from AI in the most economical way possible.

Many companies working in accelerated computing have founders that have built careers in semiconductors and infrastructure. How do you think your past experience in AI and mathematics impacts your ability to understand the market and compete effectively?

I actually think not coming from the industry gives me the benefit of having the outsider advantage. I have found it to be the case quite often that not having knowledge of industry norms or conventional wisdoms gives one the freedom to explore more freely and go deeper than most others would because you’re unencumbered by biases.

I have the freedom to ask ‘dumber’ questions and test assumptions in a way that most others wouldn’t because a lot of things are accepted truths. In the past two years I’ve had several conversations with folks within the industry where they are very dogmatic about something but they can’t tell me the provenance of the idea, which I find very puzzling. I like to understand why certain choices were made, and what assumptions or conditions were there at that time and if they still hold.

Coming from an AI background I tend to take a software view by looking at where the workloads today, and here are all the possible ways they may change over time, and modeling the entire ML pipeline for training and inference to understand the bottlenecks, which tells me where the opportunities to deliver value are. And because I come from a mathematical background I like to model things to get as close to truth as I can, and have that guide me. For example, we have built models to calculate system performance for total cost of ownership and we can measure the benefit we can bring to customers with software and/or hardware and to better understand our constraints and the different knobs available to us, and dozens of other models for various things. We are very data driven, and we use the insights from these models to guide our efforts and tradeoffs.

It seems like progress in AI has primarily come from scaling, which requires exponentially more compute and energy. It seems like we’re in an arms race with every company trying to build the biggest model, and there appears to be no end in sight. Do you think there is a way out of this?

There are always ways. Scaling has proven extremely useful, and I don’t think we’ve seen the end yet. We will very soon see models being trained with a cost of at least a billion dollars. If you want to be a leader in generative AI and create bleeding edge foundation models you’ll need to be spending at least a few billion a year on compute. Now, there are natural limits to scaling, such as being able to construct a large enough dataset for a model of that size, getting access to people with the right know-how, and getting access to enough compute.

Continued scaling of model size is inevitable, but we also can’t turn the entire earth’s surface into a planet sized supercomputer to train and serve LLMs for obvious reasons. To get this into control we have several knobs we can play with: better datasets, new model architectures, new training methods, better compilers, algorithmic improvements and exploitations, better computer architectures, and so on. If we do all that, there’s roughly three orders of magnitude of improvement to be found. That’s the best way out.

You are a believer in first principles thinking, how does this mold your mindset for how you are running Lemurian Labs?

We definitely employ a lot of first principles thinking at Lemurian. I have always found conventional wisdom misleading because that knowledge was formed at a certain point in time when certain assumptions held, but things always change and you need to retest assumptions often, especially when living in such a fast paced world.

I often find myself asking questions like “this seems like a really good idea, but why might this not work”, or “what needs to be true in order for this to work”, or “what do we know that are absolute truths and what are the assumptions we’re making and why?”, or “why do we believe this particular approach is the best way to solve this problem”. The goal is to invalidate and kill off ideas as quickly and cheaply as possible. We want to try and maximize the number of things we’re trying out at any given point in time. It’s about being obsessed with the problem that needs to be solved, and not being overly opinionated about what technology is best. Too many folks tend to overly focus on the technology and they end up misunderstanding customers’ problems and miss the transitions happening in the industry which could invalidate their approach resulting in their inability to adapt to the new state of the world.

But first principles thinking isn’t all that useful by itself. We tend to pair it with backcasting, which basically means imagining an ideal or desired future outcome and working backwards to identify the different steps or actions needed to realize it. This ensures we converge on a meaningful solution that is not only innovative but also grounded in reality. It doesn’t make sense to spend time coming up with the perfect solution only to realize it’s not feasible to build because of a variety of real world constraints such as resources, time, regulation, or building a seemingly perfect solution but later on finding out you’ve made it too hard for customers to adopt.

Every now and then we find ourselves in a situation where we need to make a decision but have no data, and in this scenario we employ minimum testable hypotheses which give us a signal as to whether or not something makes sense to pursue with the least amount of energy expenditure.

All this combined is to give us agility, rapid iteration cycles to de-risk items quickly, and has helped us adjust strategies with high confidence, and make a lot of progress on very hard problems in a very short amount of time.

Initially, you were focused on edge AI, what caused you to refocus and pivot to cloud computing?

We started with edge AI because at that time I was very focused on trying to solve a very particular problem that I had faced in trying to usher in a world of general purpose autonomous robotics. Autonomous robotics holds the promise of being the biggest platform shift in our collective history, and it seemed like we had everything needed to build a foundation model for robotics but we were missing the ideal inference chip with the right balance of throughput, latency, energy efficiency, and programmability to run said foundation model on.

I wasn’t thinking about the datacenter at this time because there were more than enough companies focusing there and I expected they would figure it out. We designed a really powerful architecture for this application space and were getting ready to tape it out, and then it became abundantly clear that the world had changed and the problem truly was in the datacenter. The rate at which LLMs were scaling and consuming compute far outstrips the pace of progress in computing, and when you factor in adoption it starts to paint a worrying picture.

It felt like this is where we should be focusing our efforts, to bring down the energy cost of AI in datacenters as much as possible without imposing restrictions on where and how AI should evolve. And so, we got to work on solving this problem.

Can you share the genesis story of Co-Founding Lemurian Labs?

The story starts in early 2018. I was working on training a foundation model for general purpose autonomy along with a model for generative multiphysics simulation to train the agent in and fine-tune it for different applications, and some other things to help scale into multi-agent environments. But very quickly I exhausted the amount of compute I had, and I estimated needing more than 20,000 V100 GPUs. I tried to raise enough to get access to the compute but the market wasn’t ready for that kind of scale just yet. It did however get me thinking about the deployment side of things and I sat down to calculate how much performance I would need for serving this model in the target environments and I realized there was no chip in existence that could get me there.

A couple of years later, in 2020, I met up with Vassil – my eventual cofounder – to catch up and I shared the challenges I went through in building a foundation model for autonomy, and he suggested building an inference chip that could run the foundation model, and he shared that he had been thinking a lot about number formats and better representations would help in not only making neural networks retain accuracy at lower bit-widths but also in creating more powerful architectures.

It was an intriguing idea but was way out of my wheelhouse. But it wouldn’t leave me, which drove me to spending months and months learning the intricacies of computer architecture, instruction sets, runtimes, compilers, and programming models. Eventually, building a semiconductor company started to make sense and I had formed a thesis around what the problem was and how to go about it. And, then towards the end of the year we started Lemurian.

You’ve spoken previously about the need to tackle software first when building hardware, could you elaborate on your views of why the hardware problem is first and foremost a software problem?

What a lot of people don’t realize is that the software side of semiconductors is much harder than the hardware itself. Building a useful computer architecture for customers to use and get benefit from is a full stack problem, and if you don’t have that understanding and preparedness going in, you’ll end up with a beautiful looking architecture that is very performant and efficient, but totally unusable by developers, which is what is actually important.

There are other benefits to taking a software first approach as well, of course, such as faster time to market. This is crucial in today’s fast moving world where being too bullish on an architecture or feature could mean you miss the market entirely.

Not taking a software first view generally results in not having derisked the important things required for product adoption in the market, not being able to respond to changes in the market for example when workloads evolve in an unexpected way, and having underutilized hardware. All not great things. That’s a big reason why we care a lot about being software centric and why our view is that you can’t be a semiconductor company without really being a software company.

Can you discuss your immediate software stack goals?

When we were designing our architecture and thinking about the forward looking roadmap and where the opportunities were to bring more performance and energy efficiency, it started becoming very clear that we were going to see a lot more heterogeneity which was going to create a lot of issues on software. And we don’t just need to be able to productively program heterogeneous architectures, we have to deal with them at datacenter scale, which is a challenge the likes of which we haven’t encountered before.

This got us concerned because the last time we had to go through a major transition was when the industry moved from single-core to multi-core architectures, and at that time it took 10 years to get software working and people using it. We can’t afford to wait 10 years to figure out software for heterogeneity at scale, it has to be sorted out now. And so, we got to work on understanding the problem and what needs to exist in order for this software stack to exist.

We are currently engaging with a lot of the leading semiconductor companies and hyperscalers/cloud service providers and will be releasing our software stack in the next 12 months. It is a unified programming model with a compiler and runtime capable of targeting any kind of architecture, and orchestrating work across clusters composed of different kinds of hardware, and is capable of scaling from a single node to a thousand node cluster for the highest possible performance.

Thank you for the great interview, readers who wish to learn more should visit Lemurian Labs.

#000#accelerated computing#ai#ai training#Algorithms#amp#applications#approach#architecture#autonomous cars#background#billion#bleeding#book#Books#Brain#Building#Careers#Cars#CEO#challenge#change#Cloud#cloud computing#cloud service#cluster#clusters#Collective#Companies#computer

0 notes

Text

Unveiling the Evolution: A Journey Through the History of the CPU

In the ever-expanding realm of technology, few inventions have had as profound an impact as the Central Processing Unit, or CPU. This tiny yet formidable component serves as the brain of modern computing devices, orchestrating complex operations with remarkable speed and precision. But how did the CPU come to be? Join us on a journey through the captivating history of this essential innovation.

The Genesis: Birth of the CPU

Our story begins in the mid-20th century, amidst the dawn of the digital age. Early computers were behemoth machines, occupying entire rooms and relying on cumbersome technologies like vacuum tubes and punch cards. However, visionary pioneers such as John von Neumann envisioned a future where computing power could be harnessed on a smaller scale.

In 1948, the world witnessed a pivotal moment with the creation of the Manchester Baby, the first stored-program computer. This groundbreaking invention laid the groundwork for the development of the CPU by separating program instructions from data, enabling greater flexibility and efficiency in computing tasks.

The Transistor Revolution

As the 1950s progressed, a paradigm shift occurred with the advent of transistors. These tiny semiconductor devices replaced bulky vacuum tubes, offering greater reliability, efficiency, and scalability. Engineers and scientists began exploring ways to harness the power of transistors to create smaller and more powerful computing devices.

In 1971, the world witnessed a seismic leap forward with the introduction of the Intel 4004, the first commercially available microprocessor. Developed by Intel Corporation, the 4004 integrated all essential CPU components onto a single chip, marking a pivotal moment in the history of computing. This breakthrough paved the way for the era of personal computing, empowering individuals and businesses with affordable, accessible computing power.

The Era of Innovation

Throughout the 1970s and 1980s, a fierce competition unfolded among semiconductor companies to develop faster and more advanced microprocessors. Intel continued to lead the charge with its groundbreaking releases, including the 8086, which became the cornerstone of the IBM PC and its clones, solidifying Intel's dominance in the market.

Meanwhile, other companies such as Motorola and IBM made significant contributions to CPU technology, pushing the boundaries of performance and efficiency. The relentless pursuit of innovation fueled a relentless cycle of advancement, ushering in an era of unprecedented computing power and capability.

The Modern Age

Fast forward to the present day, and the CPU remains at the forefront of technological progress. With each passing year, CPUs continue to evolve, becoming smaller, faster, and more efficient. From multi-core processors to advanced architectures, today's CPUs are marvels of engineering, capable of powering everything from smartphones to supercomputers.

As we reflect on the remarkable history of the CPU, we are reminded of the ingenuity, perseverance, and vision of those who paved the way for modern computing. From the humble beginnings of the Manchester Baby to the cutting-edge processors of today, the journey of the CPU is a testament to human innovation and the enduring quest for progress.

In conclusion, the history of the CPU is a testament to the power of human ingenuity and the relentless pursuit of innovation. From its humble beginnings to its pivotal role in shaping the digital age, the CPU stands as a symbol of progress and possibility. As we look to the future, one thing is certain: the evolution of the CPU is far from over, and the best is yet to come.

Read the full article

0 notes

Text

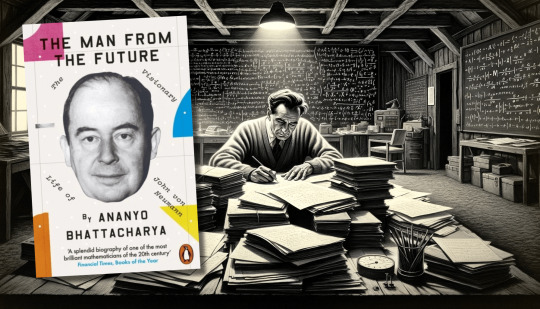

Book: The Man from the Future: The Visionary Life of John von Neumann

John von Neumann, a name synonymous with the birth of modern computing, artificial intelligence, and game theory, has left a mark on the fabric of technology and science. The biography, “The Man from the Future,” penned by Ananyo Bhattacharya, offers a glance into the life of one of the most brilliant minds of the 20th century.

The landscape of mathematical thought in early 20th century: The…

View On WordPress

0 notes

Text

AI Transformation

This is the age of AI and it is not ordinary at all. Imagine AI as a magician pulling strings and making all things in our lives magical. The changes brought by AI range from revolutionizing healthcare systems to changing economics in totality. This exciting blog is about AI’s compelling benefits and questions as it traverses the top five sectors experiencing stunning changes.

Origins of AI

The history of AI goes deeper than just contemporary civilization as it is evident in mythology and folklore where stories of mechanoid figures or even intelligent machines are abundant. The quest to develop true AI started in the 20th century though.

Early Foundations (1950s – 1970s)

It all began with the coinage of the term “artificial intelligence” by John McCarthy in 1956, signifying the formal initiation of the existence of AI as an academic discipline. This was a period where many pioneers like Alan Turing, John von Neumann, and Herbert A. Simon formulated theoretical bases for AI through the introduction of concepts for example machine learning and symbolic approach.

Among the major accomplishments of early AI is the invention of the Logic Theorist by Newell and Simon in 1955 who succeeded in proving mathematical theorems. The Dartmouth workshop of 1956 was also remarkable as it was the birth of AI as a research area, with participants seeking to create an intelligent machine.

The AI Winter (1970s – 1980s)

A period of time between the optimistic progressivity of the 1950s and 1960s and a phenomenon commonly known as “AI winter” characterizes the following decades. This was brought about by the failure to meet preliminary standards in addition to an obvious shortage in However, progress in AI systems began to lag down as it was understood that these early systems were not going to match the high expectations set.

The AI Renaissance (1990s – Present)

The revival of AI was witnessed in the 1990’s decade and continues to thrive in modern days. AI research has been rejuvenated through advancements in computer hardware and algorithmic discoveries. Recently machine learning especially deep learning has become a leading paradigm helping to solve complicated tasks and mimic some aspects of human thinking.

1 note

·

View note

Text

On this day in Wikipedia: Friday, 5th January

Welcome, laipni lūdzam, merħba, ласкаво просимо (laskavo prosymo) 🤗

What does @Wikipedia say about 5th January through the years 🏛️📜🗓️?

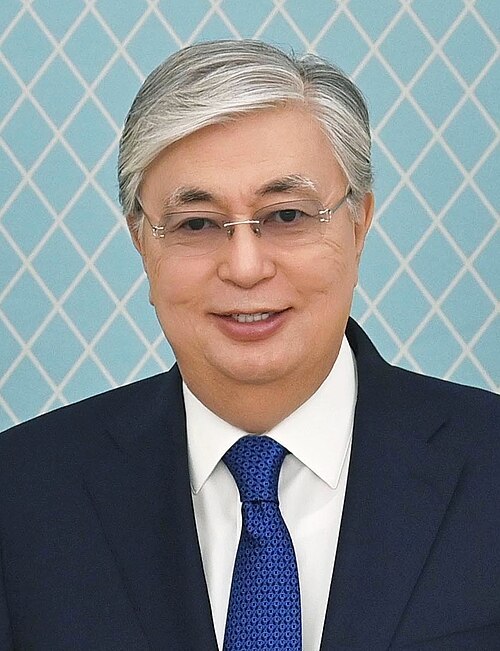

5th January 2022 🗓️ : Event - Kassym-Jomart Tokayev

Kazakh President Kassym-Jomart Tokayev dismisses Prime Minister Asqar Mamin and declares state of emergency over the 2022 Kazakh unrest.

"Kassym-Jomart Kemeluly Tokayev (Kazakh: Қасым-Жомарт Кемелұлы Тоқаев, romanized: Qasym-Jomart Kemelūly Toqaev [qɑˈsəm ʑoˈmɑrt kʲeˌmʲelo̙ɫɯ toˈqɑjef]; born 17 May 1953) is a Kazakh politician and diplomat who has served as the President of Kazakhstan since 2019. Between 20 March and 12 June 2019, he..."

Image licensed under CC BY 4.0? by Unknown authorUnknown author

5th January 2019 🗓️ : Death - Dragoslav Šekularac

Dragoslav Šekularac, Serbian footballer and manager (b. 1937)

"Dragoslav Šekularac (Serbian Cyrillic: Драгослав Шекуларац, pronounced [drǎgoslaʋ ʃekulârats]; 8 November 1937 – 5 January 2019) was a Serbian professional footballer and coach. Nicknamed Šeki, he was quick and crafty with the ball, displaying creative skills which turned many heads. Possessing..."

Image licensed under CC BY 2.0? by Vujcic

5th January 2014 🗓️ : Event - GSAT-14

A launch of the communication satellite GSAT-14 aboard the GSLV MK.II D5 marks the first successful flight of an Indian cryogenic engine.

"GSAT-14 is an Indian communications satellite launched in January 2014. It replaced the GSAT-3 satellite, which was launched in 2004. GSAT-14 was launched by a Geosynchronous Satellite Launch Vehicle Mk.II, which incorporated an Indian-built cryogenic engine on the third stage...."

5th January 1974 🗓️ : Birth - Jessica Chaffin

Jessica Chaffin, American actress, comedian, and writer

"Jessica Chaffin is an American actress, comedian, and writer best known as part of the comedy duo Ronna and Beverly with Jamie Denbo. She is also known for her recurring roles as Coco Wexler on Nickelodeon's Zoey 101, Marie Faldonado in the CBS sitcom Man with a Plan and appearing in the films Spy..."

5th January 1923 🗓️ : Birth - Sam Phillips

Sam Phillips, American radio host and producer, founded Sun Records (d. 2003)

"Samuel Cornelius Phillips (January 5, 1923 – July 30, 2003) was an American record producer. He was the founder of Sun Records and Sun Studio in Memphis, Tennessee, where he produced recordings by Elvis Presley, Roy Orbison, Jerry Lee Lewis, Carl Perkins, Johnny Cash, and Howlin' Wolf. Phillips..."

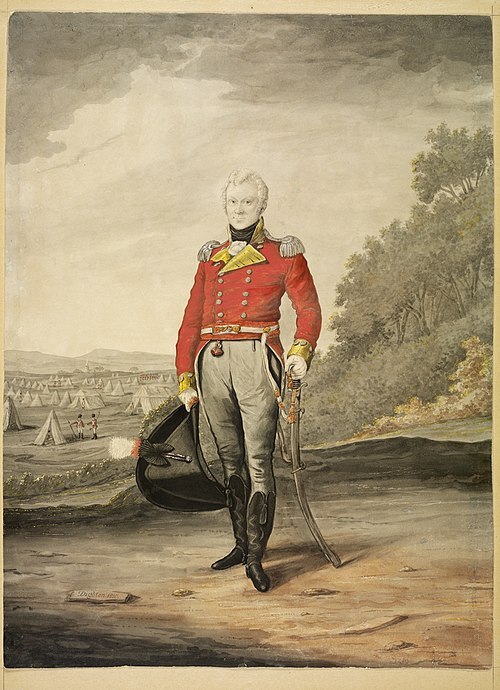

5th January 1823 🗓️ : Death - George Johnston (British Marines officer)

George Johnston, Scottish-Australian colonel and politician, Lieutenant Governor of New South Wales (b. 1764)

"Lieutenant-Colonel George Johnston (19 March 1764 – 5 January 1823) was a British military officer who served as Lieutenant-Governor of New South Wales, Australia after leading the rebellion later known as the Rum Rebellion. After serving as a young marine officer in the American Revolutionary War,..."

Image by Robert Dighton

5th January 🗓️ : Holiday - Christian Feast day: John Neumann (Catholic Church)

"John Nepomucene Neumann (German: Johann Nepomuk Neumann, Czech: Jan Nepomucký Neumann; March 28, 1811 – January 5, 1860) was a Catholic immigrant from Bohemia. He came to the United States in 1836, where he was ordained, joined the Redemptorist order, and became the fourth Bishop of Philadelphia in..."

Image by Unknown authorUnknown author

0 notes