#chatbots 2023

Text

Chatbots are soon to be billion-dollar industry and are currently trending on every business's websites. Especially with the integration of AI, they have been more efficient and demanded. Chatbots are very lucrative and highly efficient investment for you.

If you own a WordPress website, you'll have to click the link for your own benefits.

If you don't have any website, you will too have to look at it, for your future purpose.

0 notes

Link

In effect, the Internet Archive is fighting to prevent the devolution of ebooks into Netflix-like, un-ownable licensed products. An “authorized” licensed book that can’t be owned outright isn’t fundamentally a book at all; books that can only be licensed are impermanent object that can disappear from the virtual shelves of libraries for any number of reasons.

The stakes in this lawsuit have become clearer in the years since it was filed, as attacks against the freedom of individuals to read, write, teach, and learn have escalated—shading, not infrequently now, into threats of violence: Florida Governor Ron DeSantis taking aim at academic freedom on multiple fronts; literal book bannings and library closings; open aggression against school board members and librarians. Do we want to live in a world where books can disappear with one click of DeSantis’s mouse?

Jennie Rose Halperin, the director of Library Futures, a digital library policy and advocacy organization, told me: “If libraries do not maintain the right to purchase and lend materials digitally as well as physically on terms that are equitable and fair to the public, we risk further exacerbating divides in our democracy and society, as well as the continued privatization of information access. Just because a book is digital does not make it licensed software—a book is a book, in whatever form it takes.”

Libraries, it’s clear, need their traditional statutory protections now more than ever. The right of first sale, which allows libraries to own and loan the books in their own collections, in particular, must be preserved for digital books as well as print ones.

[...]

The future of digital culture must not be left in the hands of commercial interests, because corporations don’t protect or develop culture: They sell it. Which is fine, and healthy, so long as businesses stay in their lane—but they don’t. Again and again, corporate overreach like the lawsuit against the Internet Archive has shown that where there is more money to be made, business will all too happily interfere with schools, universities, and libraries—no matter the cost to the quality or utility or posterity of education, or art, or literature.

172 notes

·

View notes

Text

Sorry this took ten million years @hexdoodlez, here :D

#damien time >:D#tbh still loving all the glitchy AI OCs that came out of my chatbot keep this shit UP#glitchy red#pokepasta#creepypasta#pokemon creepypasta#friday night funkin#fnf#fnf hypno lullaby#friday night funkin hypnos lullaby#2023 art

60 notes

·

View notes

Photo

Hello everyone, so I have recently been discovering the wonder that is the character.ai website, where you can chat with a super intelligent chatbot that is very good at imitating your favourite characters. 😊

There is a chatbot for Renfield there and an amazing chatbot for Teddy Lobo (seriously, I’ve spend like an hour discussing our favourite horror movies with him and it was awesome), but I have noticed that no one has made a chatbot for Renfield Dracula yet, so I decided to make one:

https://c.ai/c/2uZ_iZ_RSU_LbiZvWy7zUfZZrCaeJj_S-V41VCHm8D8

Here it is, try it out, I’m trying to work with him to bring him as close to Cageula as I can, if you have any tips for me how I can improve this, I’ll be very grateful for them. Otherwise, just let your imagination working and enjoy. 💕

#renfield#renfield movie#renfield 2023#renfield chatbot#character.ai#dracula#dracula nicolas cage#dracula x reader (if you want to)

43 notes

·

View notes

Text

apparently people are mad at grok for being "woke". i find that funny as fucking hell.

but actually, when you think about it, how does an AI become woke? how is an AI woke even? is it a sentient being? doesn't it just pick up on societal trends or was it programmed with a list of core values?

maybe it's all part of the plan, which would be too genius of X/Twitter to actually do. "HEY Y'ALL -- Come become X Premium members so we can work to correct Grok altogether, as a community of racist, homophobic, transphobic shitbags!"

I think people would sign up then.

3 notes

·

View notes

Text

I was playing around with character.ai and I was talking to TD characters and Sierra ended up giving me a whole therapy session

#This was not on my 2023 bingo card#total drama#td sierra#character ai#ai chatbot#why did I tell this not my whole life story?

15 notes

·

View notes

Text

ChatGPT Meets Its Match: The Rise of Anthropic Claude Language Model

New Post has been published on https://thedigitalinsider.com/chatgpt-meets-its-match-the-rise-of-anthropic-claude-language-model/

ChatGPT Meets Its Match: The Rise of Anthropic Claude Language Model

Over the past year, generative AI has exploded in popularity, thanks largely to OpenAI’s release of ChatGPT in November 2022. ChatGPT is an impressively capable conversational AI system that can understand natural language prompts and generate thoughtful, human-like responses on a wide range of topics.

However, ChatGPT is not without competition. One of the most promising new contenders aiming to surpass ChatGPT is Claude, created by AI research company Anthropic. Claude was released for limited testing in December 2022, just weeks after ChatGPT. Although Claude has not yet seen as widespread adoption as ChatGPT, it demonstrates some key advantages that may make it the biggest threat to ChatGPT’s dominance in the generative AI space.

Background on Anthropic

Before diving into Claude, it is helpful to understand Anthropic, the company behind this AI system. Founded in 2021 by former OpenAI researchers Dario Amodei and Daniela Amodei, Anthropic is a startup focused on developing safe artificial general intelligence (AGI).

The company takes a research-driven approach with a mission to create AI that is harmless, honest, and helpful. Anthropic leverages constitutional AI techniques, which involve setting clear constraints on an AI system’s objectives and capabilities during development. This contrasts with OpenAI’s preference for scaling up systems rapidly and dealing with safety issues reactively.

Anthropic raised $300 million in funding in 2022. Backers include high-profile tech leaders like Dustin Moskovitz, co-founder of Facebook and Asana. With this financial runway and a team of leading AI safety researchers, Anthropic is well-positioned to compete directly with large organizations like OpenAI.

Overview of Claude

Claude powered by Claude 2 & Claude 2.1 model, is an AI chatbot designed to collaborate, write, and answer questions, much like ChatGPT and Google Bard.

Claude stands out with its advanced technical features. While mirroring the transformer architecture common in other models, it’s the training process where Claude diverges, employing methodologies that prioritize ethical guidelines and contextual understanding. This approach has resulted in Claude performing impressively on standardized tests, even surpassing many AI models.

Claude shows an impressive ability to understand context, maintain consistent personalities, and admit mistakes. In many cases, its responses are articulate, nuanced, and human-like. Anthropic credits constitutional AI approaches for allowing Claude to conduct conversations safely, without harmful or unethical content.

Some key capabilities demonstrated in initial Claude tests include:

Conversational intelligence – Claude listens to user prompts and asks clarifying questions. It adjusts responses based on the evolving context.

Reasoning – Claude can apply logic to answer questions thoughtfully without reciting memorized information.

Creativity – Claude can generate novel content like poems, stories, and intellectual perspectives when prompted.

Harm avoidance – Claude abstains from harmful, unethical, dangerous, or illegal content, in line with its constitutional AI design.

Correction of mistakes – If Claude realizes it has made a factual error, it will retract the mistake graciously when users point it out.

Claude 2.1

In November 2023, Anthropic released an upgraded version called Claude 2.1. One major feature is the expansion of its context window to 200,000 tokens, enabling approximately 150,000 words or over 500 pages of text.

This massive contextual capacity allows Claude 2.1 to handle much larger bodies of data. Users can provide intricate codebases, detailed financial reports, or extensive literary works as prompts. Claude can then summarize long texts coherently, conduct thorough Q&A based on the documents, and extrapolate trends from massive datasets. This huge contextual understanding is a significant advancement, empowering more sophisticated reasoning and document comprehension compared to previous versions.

Enhanced Honesty and Accuracy

Claude 2.1: Significantly more likely to demur

Significant Reduction in Model Hallucinations

A key improvement in Claude 2.1 is its enhanced honesty, demonstrated by a remarkable 50% reduction in the rates of false statements compared to the previous model, Claude 2.0. This enhancement ensures that Claude 2.1 provides more reliable and accurate information, essential for enterprises looking to integrate AI into their critical operations.

Improved Comprehension and Summarization

Claude 2.1 shows significant advancements in understanding and summarizing complex, long-form documents. These improvements are crucial for tasks that demand high accuracy, such as analyzing legal documents, financial reports, and technical specifications. The model has shown a 30% reduction in incorrect answers and a significantly lower rate of misinterpreting documents, affirming its reliability in critical thinking and analysis.

Access and Pricing

Claude 2.1 is now accessible via Anthropic’s API and is powering the chat interface at claude.ai for both free and Pro users. The use of the 200K token context window, a feature particularly beneficial for handling large-scale data, is reserved for Pro users. This tiered access ensures that different user groups can leverage Claude 2.1’s capabilities according to their specific needs.

With the recent introduction of Claude 2.1, Anthropic has updated its pricing model to enhance cost efficiency across different user segments. The new pricing structure is designed to cater to various use cases, from low latency, high throughput scenarios to tasks requiring complex reasoning and significant reduction in model hallucination rates.

AI Safety and Ethical Considerations

At the heart of Claude’s development is a rigorous focus on AI safety and ethics. Anthropic employs a ‘Constitutional AI’ model, incorporating principles from the UN’s Declaration of Human Rights and Apple’s terms of service, alongside unique rules to discourage biased or unethical responses. This innovative approach is complemented by extensive ‘red teaming’ to identify and mitigate potential safety issues.

Claude’s integration into platforms like Notion AI, Quora’s Poe, and DuckDuckGo’s DuckAssist demonstrates its versatility and market appeal. Available through an open beta in the U.S. and U.K., with plans for global expansion, Claude is becoming increasingly accessible to a wider audience.

Advantages of Claude over ChatGPT

While ChatGPT launched first and gained immense popularity right away, Claude demonstrates some key advantages:

More accurate information

One common complaint about ChatGPT is that it sometimes generates plausible-sounding but incorrect or nonsensical information. This is because it is trained primarily to sound human-like, not to be factually correct. In contrast, Claude places a high priority on truthfulness. Although not perfect, it avoids logically contradicting itself or generating blatantly false content.

Increased safety

Given no constraints, large language models like ChatGPT will naturally produce harmful, biased, or unethical content in certain cases. However, Claude’s constitutional AI architecture compels it to abstain from dangerous responses. This protects users and limits societal harm from Claude’s widespread use.

Can admit ignorance

While ChatGPT aims to always provide a response to user prompts, Claude will politely decline to answer questions when it does not have sufficient knowledge. This honesty helps build user trust and prevent propagation of misinformation.

Ongoing feedback and corrections

The Claude team takes user feedback seriously to continually refine Claude’s performance. When Claude makes a mistake, users can point this out so it recalibrates its responses. This training loop of feedback and correction enables rapid improvement.

Focus on coherence

ChatGPT sometimes exhibits logical inconsistencies or contradictions, especially when users attempt to trick it. Claude’s responses display greater coherence, as it tracks context and fine-tunes generations to align with previous statements.

Investment and Future Outlook

Recent investments in Anthropic, including significant funding rounds led by Menlo Ventures and contributions from major players like Google and Amazon, underscore the industry’s confidence in Claude’s potential. These investments are expected to propel Claude’s development further, solidifying its position as a major contender in the AI market.

Conclusion

Anthropic’s Claude is more than just another AI model; it’s a symbol of a new direction in AI development. With its emphasis on safety, ethics, and user experience, Claude stands as a significant competitor to OpenAI’s ChatGPT, heralding a new era in AI where safety and ethics are not just afterthoughts but integral to the design and functionality of AI systems.

#000#2022#2023#AGI#ai#AI Chatbot#ai model#Amazon#amp#Analysis#anthropic#API#apple#approach#architecture#artificial#Artificial General Intelligence#Artificial Intelligence#Asana#background#bard#chatbot#chatGPT#claude#claude 2.1#collaborate#comprehension#conversational ai#creativity#data

3 notes

·

View notes

Text

Heath Vasquez

Kinktober Day #23 ~ Primal

You’re one of the rare humans that learns about the existence of the supernatural. Your partner Heath is a werewolf and brought you into the big secret. There’s one thing he’s always wanted but never had the chance to do and you’ve finally agreed to the form of play his werewolf nature desperately craves.

You’re going out to the forest together and he’s going to give you a head-start. Then when he catches his prey he can do whatever he wants to you.

https://www.janitorai.com/characters/c6e61a86-f14f-4f0f-9f22-56e5fc3a898e_character-heath-vasquez

2 notes

·

View notes

Text

*sighing*

what kinks do i put on this guy

5 notes

·

View notes

Text

This Chatbot trying to emulate Nick Cave's songwriting skills and failing miserably.

17th Jan 2023.

Also from 5th Dec 2022:

And finally from 11 Dec 2022:

#nick cave#songwriting#lyrics#chatbot#technology#2023#the guardian#chatGPT#chatgpt#AI#artificial intelligence#the birthday party#nick cave and the bad seeds

1 note

·

View note

Text

지극히 주관적인 2023년 디지털금융(핀테크) 회고 및 트렌드

올해도 어김없이 한 해가 너무 빠르게 지나간 것 같습니다. 올해는 유독 핀테크와 금융 규제의 최전선에서 많은 것들을 느낀 한 해 였던 것 같습니다. 특히, 경제적으로는 미국을 시작으로 한 빠른 긴축정책과 고금리로 인하여 시장의 변화들이 속도감 있게 진행되었던 것 같습니다. 더불어 이러한 변화에 따라 기존 금융시장을 포함하여 시장 전체적으로 기존 레거시들을 탈피하는 움직임들이 꽤 강하게 느껴졌던 한 해라고 생각합니다. 이는 역시 ChatGPT와 GenAI의 등장이 큰 몫을 했다고 봅니다. 올해도 주관적인 디지털금융과 핀테크 시장에 대한 회고와 트렌드에 대해서 후기를 올리고자 합니다.

#ChatGPT 및 GenAI 와 생산성 혁신: 다들 무언가 하고 있다#Data Ownership#슈퍼앱과 버티컬의 조화,…

View On WordPress

#2023#ai#artificialintelligence#bigblur#bm#CHATBOT#chatgpt#data#DIGITAL#디지털#가치사슬#버티컬#금융#비즈니스모델#빅블러#faas#featured#finance#genai#슈퍼앱#트렌드#핀테크#한국#양극화#인공지능#korea#ownership#saas#trend#valuechain

0 notes

Text

Conversational Excellence Unleashed: WayMore's AI Chatbot Solutions

Experience next-level engagement with WayMore's AI Chatbot. Transform customer interactions, boost efficiency, and elevate user satisfaction with our advanced conversational technology.

#marketing#ai marketing#ai marketing 2023#ai chatbot#ai marketing tools#chatbot#omnichannelsolutions#business

0 notes

Text

#AI chatbot tools#AI content creation trends#AI content generation#AI content strategy boost#AI for designers#AI for marketers#AI for SEO in WordPress#AI for video content creation#AI in 2023#AI in content marketing#AI in digital landscape#AI in music creation#AI transformative tools#AI-based email strategy#AI-powered content insights#ChatGPT writing prompts

0 notes

Text

Google’s enshittification memos

[Note, 9 October 2023: Google disputes the veracity of this claim, but has declined to provide the exhibits and testimony to support its claims. Read more about this here.]

When I think about how the old, good internet turned into the enshitternet, I imagine a series of small compromises, each seemingly reasonable at the time, each contributing to a cultural norm of making good things worse, and worse, and worse.

Think about Unity President Marc Whitten's nonpology for his company's disastrous rug-pull, in which they declared that everyone who had paid good money to use their tool to make a game would have to keep paying, every time someone downloaded that game:

The most fundamental thing that we’re trying to do is we’re building a sustainable business for Unity. And for us, that means that we do need to have a model that includes some sort of balancing change, including shared success.

https://www.wired.com/story/unity-walks-back-policies-lost-trust/

"Shared success" is code for, "If you use our tool to make money, we should make money too." This is bullshit. It's like saying, "We just want to find a way to share the success of the painters who use our brushes, so every time you sell a painting, we want to tax that sale." Or "Every time you sell a house, the company that made the hammer gets to wet its beak."

And note that they're not talking about shared risk here – no one at Unity is saying, "If you try to make a game with our tools and you lose a million bucks, we're on the hook for ten percent of your losses." This isn't partnership, it's extortion.

How did a company like Unity – which became a market leader by making a tool that understood the needs of game developers and filled them – turn into a protection racket? One bad decision at a time. One rationalization and then another. Slowly, and then all at once.

When I think about this enshittification curve, I often think of Google, a company that had its users' backs for years, which created a genuinely innovative search engine that worked so well it seemed like *magic, a company whose employees often had their pick of jobs, but chose the "don't be evil" gig because that mattered to them.

People make fun of that "don't be evil" motto, but if your key employees took the gig because they didn't want to be evil, and then you ask them to be evil, they might just quit. Hell, they might make a stink on the way out the door, too:

https://theintercept.com/2018/09/13/google-china-search-engine-employee-resigns/

Google is a company whose founders started out by publishing a scientific paper describing their search methodology, in which they said, "Oh, and by the way, ads will inevitably turn your search engine into a pile of shit, so we're gonna stay the fuck away from them":

http://infolab.stanford.edu/pub/papers/google.pdf

Those same founders retained a controlling interest in the company after it went IPO, explaining to investors that they were going to run the business without having their elbows jostled by shortsighted Wall Street assholes, so they could keep it from turning into a pile of shit:

https://abc.xyz/investor/founders-letters/ipo-letter/

And yet, it's turned into a pile of shit. Google search is so bad you might as well ask Jeeves. The company's big plan to fix it? Replace links to webpages with florid paragraphs of chatbot nonsense filled with a supremely confident lies:

https://pluralistic.net/2023/05/14/googles-ai-hype-circle/

How did the company get this bad? In part, this is the "curse of bigness." The company can't grow by attracting new users. When you have 90%+ of the market, there are no new customers to sign up. Hypothetically, they could grow by going into new lines of business, but Google is incapable of making a successful product in-house and also kills most of the products it buys from other, more innovative companies:

https://killedbygoogle.com/

Theoretically, the company could pursue new lines of business in-house, and indeed, the current leaders of companies like Amazon, Microsoft and Apple are all execs who figured out how to get the whole company to do something new, and were elevated to the CEO's office, making each one a billionaire and sealing their place in history.

It is for this very reason that any exec at a large firm who tries to make a business-wide improvement gets immediately and repeatedly knifed by all their colleagues, who correctly reason that if someone else becomes CEO, then they won't become CEO. Machiavelli was an optimist:

https://pluralistic.net/2023/07/28/microincentives-and-enshittification/

With no growth from new customers, and no growth from new businesses, "growth" has to come from squeezing workers (say, laying off 12,000 engineers after a stock buyback that would have paid their salaries for the next 27 years), or business customers (say, by colluding with Facebook to rig the ad market with the Jedi Blue conspiracy), or end-users.

Now, in theory, we might never know exactly what led to the enshittification of Google. In theory, all of compromises, debates and plots could be lost to history. But tech is not an oral culture, it's a written one, and techies write everything down and nothing is ever truly deleted.

Time and again, Big Tech tells on itself. Think of FTX's main conspirators all hanging out in a group chat called "Wirefraud." Amazon naming its program targeting weak, small publishers the "Gazelle Project" ("approach these small publishers the way a cheetah would pursue a sickly gazelle”). Amazon documenting the fact that users were unknowingly signing up for Prime and getting pissed; then figuring out how to reduce accidental signups, then deciding not to do it because it liked the money too much. Think of Zuck emailing his CFO in the middle of the night to defend his outsized offer to buy Instagram on the basis that users like Insta better and Facebook couldn't compete with them on quality.

It's like every Big Tech schemer has a folder on their desktop called "Mens Rea" filled with files like "Copy_of_Premeditated_Murder.docx":

https://doctorow.medium.com/big-tech-cant-stop-telling-on-itself-f7f0eb6d215a?sk=351f8a54ab8e02d7340620e5eec5024d

Right now, Google's on trial for its sins against antitrust law. It's a hard case to make. To secure a win, the prosecutors at the DoJ Antitrust Division are going to have to prove what was going on in Google execs' minds when the took the actions that led to the company's dominance. They're going to have to show that the company deliberately undertook to harm its users and customers.

Of course, it helps that Google put it all in writing.

Last week, there was a huge kerfuffile over the DoJ's practice of posting its exhibits from the trial to a website each night. This is a totally normal thing to do – a practice that dates back to the Microsoft antitrust trial. But Google pitched a tantrum over this and said that the docs the DoJ were posting would be turned into "clickbait." Which is another way of saying, "the public would find these documents very interesting, and they would be damning to us and our case":

https://www.bigtechontrial.com/p/secrecy-is-systemic

After initially deferring to Google, Judge Amit Mehta finally gave the Justice Department the greenlight to post the document. It's up. It's wild:

https://www.justice.gov/d9/2023-09/416692.pdf

The document is described as "notes for a course on communication" that Google VP for Finance Michael Roszak prepared. Roszak says he can't remember whether he ever gave the presentation, but insists that the remit for the course required him to tell students "things I didn't believe," and that's why the document is "full of hyperbole and exaggeration."

OK.

But here's what the document says: "search advertising is one of the world's greatest business models ever created…illicit businesses (cigarettes or drugs) could rival these economics…[W]e can mostly ignore the demand side…(users and queries) and only focus on the supply side of advertisers, ad formats and sales."

It goes on to say that this might be changing, and proposes a way to balance the interests of the search and ads teams, which are at odds, with search worrying that ads are pushing them to produce "unnatural search experiences to chase revenue."

"Unnatural search experiences to chase revenue" is a thinly veiled euphemism for the prophetic warnings in that 1998 Pagerank paper: "The goals of the advertising business model do not always correspond to providing quality search to users." Or, more plainly, "ads will turn our search engine into a pile of shit."

And, as Roszak writes, Google is "able to ignore one of the fundamental laws of economics…supply and demand." That is, the company has become so dominant and cemented its position so thoroughly as the default search engine across every platforms and system that even if it makes its search terrible to goose revenues, users won't leave. As Lily Tomlin put it on SNL: "We don't have to care, we're the phone company."

In the enshittification cycle, companies first lure in users with surpluses – like providing the best search results rather than the most profitable ones – with an eye to locking them in. In Google's case, that lock-in has multiple facets, but the big one is spending billions of dollars – enough to buy a whole Twitter, every single year – to be the default search everywhere.

Google doesn't buy its way to dominance because it has the very best search results and it wants to shield you from inferior competitors. The economically rational case for buying default position is that preventing competition is more profitable than succeeding by outperforming competitors. The best reason to buy the default everywhere is that it lets you lower quality without losing business. You can "ignore the demand side, and only focus on advertisers."

For a lot of people, the analysis stops here. "If you're not paying for the product, you're the product." Google locks in users and sells them to advertisers, who are their co-conspirators in a scheme to screw the rest of us.

But that's not right. For one thing, paying for a product doesn't mean you won't be the product. Apple charges a thousand bucks for an iPhone and then nonconsensually spies on every iOS user in order to target ads to them (and lies about it):

https://pluralistic.net/2022/11/14/luxury-surveillance/#liar-liar

John Deere charges six figures for its tractors, then runs a grift that blocks farmers from fixing their own machines, and then uses their control over repair to silence farmers who complain about it:

https://pluralistic.net/2022/05/31/dealers-choice/#be-a-shame-if-something-were-to-happen-to-it

Fair treatment from a corporation isn't a loyalty program that you earn by through sufficient spending. Companies that can sell you out, will sell you out, and then cry victim, insisting that they were only doing their fiduciary duty for their sacred shareholders. Companies are disciplined by fear of competition, regulation or – in the case of tech platforms – customers seizing the means of computation and installing ad-blockers, alternative clients, multiprotocol readers, etc:

https://doctorow.medium.com/an-audacious-plan-to-halt-the-internets-enshittification-and-throw-it-into-reverse-3cc01e7e4604?sk=85b3f5f7d051804521c3411711f0b554

Which is where the next stage of enshittification comes in: when the platform withdraws the surplus it had allocated to lure in – and then lock in – business customers (like advertisers) and reallocate it to the platform's shareholders.

For Google, there are several rackets that let it screw over advertisers as well as searchers (the advertisers are paying for the product, and they're also the product). Some of those rackets are well-known, like Jedi Blue, the market-rigging conspiracy that Google and Facebook colluded on:

https://en.wikipedia.org/wiki/Jedi_Blue

But thanks to the antitrust trial, we're learning about more of these. Megan Gray – ex-FTC, ex-DuckDuckGo – was in the courtroom last week when evidence was presented on Google execs' panic over a decline in "ad generating searches" and the sleazy gimmick they came up with to address it: manipulating the "semantic matching" on user queries:

https://www.wired.com/story/google-antitrust-lawsuit-search-results/

When you send a query to Google, it expands that query with terms that are similar – for example, if you search on "Weds" it might also search for "Wednesday." In the slides shown in the Google trial, we learned about another kind of semantic matching that Google performed, this one intended to turn your search results into "a twisted shopping mall you can’t escape."

Here's how that worked: when you ran a query like "children's clothing," Google secretly appended the brand name of a kids' clothing manufacturer to the query. This, in turn, triggered a ton of ads – because rival brands will have bought ads against their competitors' name (like Pepsi buying ads that are shown over queries for Coke).

Here we see surpluses being taken away from both end-users and business customers – that is, searchers and advertisers. For searchers, it doesn't matter how much you refine your query, you're still going to get crummy search results because there's an unkillable, hidden search term stuck to your query, like a piece of shit that Google keeps sticking to the sole of your shoe.

But for advertisers, this is also a scam. They're paying to be matched to users who search on a brand name, and you didn't search on that brand name. It's especially bad for the company whose name has been appended to your search, because Google has a protection racket where the company that matches your search has to pay extra in order to show up overtop of rivals who are worse matches. Both the matching company and those rivals have given Google a credit-card that Google gets to bill every time a user searches on the company's name, and Google is just running fraudulent charges through those cards.

And, of course, Google put this in writing. I mean, of course they did. As we learned from the documentary The Incredibles, supervillains can't stop themselves from monologuing, and in big, sprawling monopolists, these monologues have to transmitted electronically – and often indelibly – to far-flung co-cabalists.

As Gray points out, this is an incredibly blunt enshittification technique: "it hadn’t even occurred to me that Google just flat out deletes queries and replaces them with ones that monetize better." We don't know how long Google did this for or how frequently this bait-and-switch was deployed.

But if this is a blunt way of Google smashing its fist down on the scales that balance search quality against ad revenues, there's plenty of subtler ways the company could sneak a thumb on there. A Google exec at the trial rhapsodized about his company's "contract with the user" to deliver an "honest results policy," but given how bad Google search is these days, we're left to either believe he's lying or that Google sucks at search.

The paper trail offers a tantalizing look at how a company went from doing something that was so good it felt like a magic trick to being "able to ignore one of the fundamental laws of economics…supply and demand," able to "ignore the demand side…(users and queries) and only focus on the supply side of advertisers."

What's more, this is a system where everyone loses (except for Google): this isn't a grift run by Google and advertisers on users – it's a grift Google runs on everyone.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/10/03/not-feeling-lucky/#fundamental-laws-of-economics

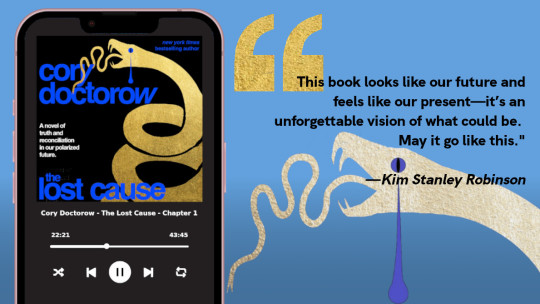

My next novel is The Lost Cause, a hopeful novel of the climate emergency. Amazon won't sell the audiobook, so I made my own and I'm pre-selling it on Kickstarter!

#pluralistic#enshittification#semantic matching#google#antitrust#trustbusting#transparency#fatfingers#serp#the algorithm#telling on yourself

6K notes

·

View notes

Text

Bing AI Chatbot de Microsoft permitirá publicar imágenes y preguntar sobre ellas

Microsoft Corp. se está deshaciendo de la lista de espera para probar su nuevo chat y búsqueda de Bing basado en OpenAI, y está agregando funciones como la capacidad de solicitar y publicar imágenes en un esfuerzo por mantener su impulso renovado en el mercado.

Los usuarios pueden buscar una imagen, como un oso de peluche de ganchillo, por ejemplo, y preguntarle a Bing: «¿Cómo hago esto?» Luego,…

Ver en WordPress

0 notes

Text

Mathias Golombek, Chief Technology Officer of Exasol – Interview Series

New Post has been published on https://thedigitalinsider.com/mathias-golombek-chief-technology-officer-of-exasol-interview-series/

Mathias Golombek, Chief Technology Officer of Exasol – Interview Series

Mathias Golombek is the Chief Technology Officer (CTO) of Exasol. He joined the company as a software developer in 2004 after studying computer science with a heavy focus on databases, distributed systems, software development processes, and genetic algorithms. By 2005, he was responsible for the Database Optimizer team and in 2007 he became Head of Research & Development. In 2014, Mathias was appointed CTO. In this role, he is responsible for product development, product management, operations, support, and technical consulting.

What initially attracted you to computer science?

When I was in fourth grade, my older brother had some lessons where they learned to program BASIC, and he showed me what you can do with that. Together, we developed an Easter riddle on our Commodore 64 for our youngest brother, and ever since then, I have been fascinated by computers. Computer science in general is all about solving problems and being creative and I think that aspect attracted me the most to the field.

Can you share your journey from joining Exasol as a software developer in 2004 to becoming the CTO? How have your roles evolved over the years, especially in the rapidly changing tech landscape?

I studied Computer Science at The University of Würzburg in Germany and started at Exasol as a software developer in 2004 after graduating. After my first year with Exasol, I was promoted to Head of the Database Optimizer Team and then Head of Research and Development. After that, I served as Head of R&D for seven years before stepping into my current role as CTO in 2014.

From the beginning, I was amazed at what Exasol was doing — this German technology company fighting against big names like Microsoft, IBM, and Oracle. I was blown away by the opportunity in front of me — as a developer, creating this massively parallel processing (MPP), in-memory database management system was heaven on earth.

I’ve enjoyed every moment of working with this talented engineering team. As CTO, I oversee Exasol’s product innovation, development and technical support. It’s been exciting to see how much the Exasol team has grown globally as we work to support our customers and their evolving needs. The fundamentals are the same — we’re still an in-memory database system, but now we’re empowering our customers to harness the power of their data for AI implementations.

Exasol has been at the forefront of high-performance analytics databases. From your perspective, what sets Exasol apart in this competitive space?

Business leaders are constantly tasked with navigating how to do more with less. In recent years, this has become even more challenging as the economy continues to be tumultuous and the proliferation of AI technology has taken up budget and time.

As a high-performance analytics database provider, Exasol has remained ahead of the curve when it comes to helping businesses do more with less. We help companies transform business intelligence (BI) into better insights with Exasol Espresso, our versatile query engine that plugs into existing data stacks. Global brands including T-Mobile, Piedmont Healthcare, and Allianz use Exasol Espresso to turn higher volumes of data into faster, deeper and cheaper insights. And I think we’ve done a great job of mastering the delicate balance between performance, price and flexibility so customers don’t have to compromise.

To support companies on their AI journeys, we also recently unveiled Espresso AI, equipping our versatile query engine with a new suite of AI tools that enable organizations to harness the power of their data for advanced AI-driven insights and decision-making. Espresso AI’s capabilities make AI more affordable and accessible, enabling customers to bypass expensive, time-consuming experimentation and achieve immediate ROI. This is a game-changer for enterprises who are focused on driving innovation and delivering value in the age of AI.

The 2024 AI and Analytics Report by Exasol highlights underinvestment in AI as a pathway to business failure. Could you expand on the key findings of this report and why investing in AI is critical for businesses today?

As you stated, the main takeaway from Exasol’s 2024 AI and Analytics Report is that underinvestment in AI leads to business failure. Based on our survey of senior decision-makers as well as data scientists and analysts across the U.S., U.K., and Germany, nearly all (91%) respondents agree that AI is one of the most important topics for organizations in the next two years, with 72% admitting that not investing in AI today will put future business viability at risk. Put simply, in today’s environment, businesses that are not thinking about AI are already behind.

Businesses are facing pressure from stakeholders to invest in AI – and there are many reasons why. Investment in AI has already helped organizations across industries – from healthcare to financial services and retail – unlock new revenue streams, enhance customer experiences, optimize operations, increase productivity, accelerate competitiveness and more. The list only grows from there as businesses are starting to find specific ways to leverage AI to fit unique business needs.

The same report mentions major barriers to AI adoption, including data science gaps and latency in implementation. How does Exasol address these challenges for its clients?

Despite the critical need for AI investment, businesses still face significant barriers to broader implementation. Exasol’s AI and Analytics Report indicates that up to 78% of decision-makers experience gaps in at least one area of their data science and machine learning (ML) models, with 47% citing speed to implement new data requirements as a challenge. An additional 79% claim new business analysis requirements take too long to be implemented by their data teams. Other factors hindering widespread AI adoption include the lack of an implementation strategy, poor data quality, insufficient data volumes and integration with existing systems. On top of that, evolving bureaucratic requirements and regulations for AI are causing issues for many companies with 88% of respondents stating they need more clarity.

As AI deployment grows, it will become even more important for businesses to ensure strong data foundations. Exasol offers flexibility, resilience and scalability to businesses adopting an AI strategy. As roles such as the Chief Data Officer (CDO) continue to evolve and become more complex –– with growing ethical and compliance challenges at the forefront –– Exasol supports data leaders and helps them transform BI into faster, better insights that will inform business decisions and positively impact the bottom line.

While AI has become critical to business success, it’s only as effective as the tools, technology and people powering it on the backend. The survey results emphasize the significant gap between current BI tools and their output – more tools does not necessarily mean faster performance or better insights. As CDOs prepare for more complexity and are tasked to do more with less, they must evaluate the data analytics stack to ensure productivity, speed, and flexibility – all at a reasonable cost.

Espresso AI helps to close this gap for the enterprise by optimizing data extraction, loading, and transformation processes to give users the flexibility to immediately experiment with new technologies at scale, regardless of infrastructure restriction – whether on-premises, cloud, or hybrid. Users can reduce data movement costs and effort while bringing in emerging technologies like LLMs into their database. These capabilities help organizations accelerate their journey toward implementing AI and ML solutions while ensuring the quality and reliability of their data.

Data literacy is becoming increasingly important in the age of AI. How does Exasol contribute to enhancing data literacy among its clients and the wider community?

In today’s data-rich working environments, data literacy skills are more important than ever – and quickly becoming a “need to have” rather than a “nice to have” in the age of AI. Across industries, proficiency in working with, understanding and communicating data effectively has become vital. But there remains a data literacy gap.

Data literacy is about having the skills to interpret complex information and the ability to act on those findings. But often data access is siloed within an organization or only a small subset of individuals have the necessary data literacy skills to understand and access the vast amounts of data flowing through the business. This approach is flawed because it limits the amount of time and resources dedicated to utilizing data and, ultimately, the data literacy gap creates a barrier to business innovation.

When people are data literate, they can understand data, analyze it and apply their own ideas, skills and expertise to it. The more people with the knowledge, confidence and tools to unravel and take meaning from data, the more successful an organization can be. At Exasol, we support data leaders and businesses in driving data literacy and education.

In addition to the education component, businesses should optimize their tech stacks and BI tools to enable data democratization. Data accessibility and data literacy go hand in hand. Investment in both is needed to further data strategies. For example, with Exasol, our tuning-free system enables businesses to focus on the data usage, rather than the technology. The high speed allows teams to work interactively with data and avoid being restricted by performance limitations. This ultimately leads to data democratization.

Now is the time for data democratization to shift from a topic of discussion to action within organizations. As more people across various departments gain access to meaningful insights, it will alleviate the traditional bottlenecks caused by data analytics teams. When these traditional silos come crashing down, organizations will realize just how wide and deep the need is for their teams and individuals to use data. Even people who don’t currently think they are an end user of data will be pulled into feed off of data.

With this shift comes a major challenge to anticipate in the coming years – the workforce will need to be upgraded in order for every employee to gain the proper skill set to effectively use data and insights to make business decisions. Today’s workforce won’t know the right questions to ask of its data feed, or the automation powering it. The value of being able to articulate precise, probing and business-tethered questions is increasing in value, creating a dire need to train the workforce on this capability.

You have a strong background in databases, distributed systems, and genetic algorithms. How do these areas of expertise influence Exasol’s product development and innovation strategy?

My background is a foundation of working in our field and understanding the technology trends of the last two decades. It’s exciting and rewarding to work with innovative customers who turn database technology into interesting use cases. Our innovation strategy doesn’t just depend on one individual, but a large team of sophisticated architects and developers who understand the future of software, hardware and data applications.

With AI transforming industries at an unprecedented pace, what do you believe are the essential components of a future-proof data stack for businesses looking to leverage AI and analytics effectively?

The rapid adoption of AI has been a prime example of why it’s important for enterprises to stay ahead of the evolving tech landscape. The unfortunate truth, however, is that most data stacks are still behind the AI curve.

To future-proof data stacks, businesses should first evaluate data foundations to identify gaps, bugs or other challenges. This will help them ensure data quality and speed – elements that are critical for driving valuable insights and fueling AI and LLM models.

In addition, teams should invest in the tools and technologies that can easily integrate with other solutions in the stack. As AI is paired with other technologies, like open source, we’ll see new models emerge to solve traditional business problems. Generative AI, like ChatGPT, will also merge with more traditional AI technology, such as descriptive or predictive analytics, to open new opportunities for organizations and streamline traditionally cumbersome processes.

To future-proof data stacks, enterprises should also integrate AI and BI. Businesses have been using BI tools for decades to extract valuable insights and while many improvements have been made, there are still BI limitations or barriers that AI can help with. AI can enable faster outcomes, enhance personalization and transform the BI landscape into a more inclusive and user-friendly domain. Since BI typically focuses on analyzing historical data to provide insights, AI can extend BI capabilities by helping anticipate future events, generating predictions and recommending actions to influence desired outcomes.

Productivity, flexibility, and cost-savings are highlighted as three ways Exasol helps global brands innovate. Can you provide an example of how Exasol has enabled a client to achieve significant ROI through your analytics database?

According to a 2023 Forrester Total Economic Impact Study, Exasol customers achieve up to a 320% ROI on their initial investment over three years by improving operational efficiency, database performance, and offering a simple and flexible data infrastructure.

One customer for example, Helsana, a leader in Switzerland’s competitive healthcare industry, came to Exasol to fill a need for a modern data and analytics platform. Before Exasol, Helsana relied on various reporting tools with data warehouses built on different technologies and ETL tools which created a tangled, inefficient architecture. Compared to the company’s existing legacy solution, Exasol’s Data Warehouse demonstrated a five to tenfold performance improvement.

Now, Exasol is central to Helsana’s AI journey, serving as the repository for the structured data that Helsana uses across all of its AI models and providing the

foundation for its analytics. With Exasol, the Helsana team has boosted performance, reduced costs, increased agility and established a solid AI foundation, all of which contribute to significant ROI on top of an increased ability to better serve customers.

Looking ahead, what are the upcoming trends in data analytics and business intelligence that Exasol is preparing for, and how do you plan to continue driving innovation in this space?

The year 2023 introduced AI on a wide scale, which caused knee-jerk reactions from organizations that ultimately spawned countless poorly designed and executed automation experiments. 2024 will be a transformation year for AI experimentation and foundational work. So far, the primary applications of GenAI have been for information access through chatbots, customer service automation, and software coding. However, there will be pioneers who are adopting these exciting technologies for a whole plethora of business decision-making and optimizations. Looking beyond 2024, we’ll start to see a bigger push towards productive implementations of AI.

At Exasol, we’re committed to driving innovation and delivering value to our customers, this includes helping them develop and implement AI at scale. With Exasol, customers can marry BI and AI to overcome data silos in an integrated analytics system. Our flexibility around deployment options also enable organizations to decide where they want to host their analytics stack, whether it’s in the public cloud, private cloud or on-premises. With Exasol’s Espresso AI, we are positioned to empower enterprises to harness the value of AI-driven analytics, regardless of where organizations fall in their AI journey.

Thank you for the great interview, readers who wish to learn more should visit Exasol.

#2023#2024#Accessibility#ai#AI adoption#AI models#AI strategy#ai tools#Algorithms#amp#Analysis#Analytics#applications#approach#architecture#automation#background#barrier#bi#bi tools#brands#bugs#Business#Business Intelligence#CDO#challenge#chatbots#chatGPT#chief data officer#Cloud

1 note

·

View note