#Safety Check

Text

Planning a boat rental in California for your next celebration? Whether you’re hosting a birthday bash or a corporate event, safety should always be a top priority aboard the party boat. With the excitement of the open water and the thrill of the festivities, it’s crucial to take essential precautions to ensure everyone’s well-being.

0 notes

Text

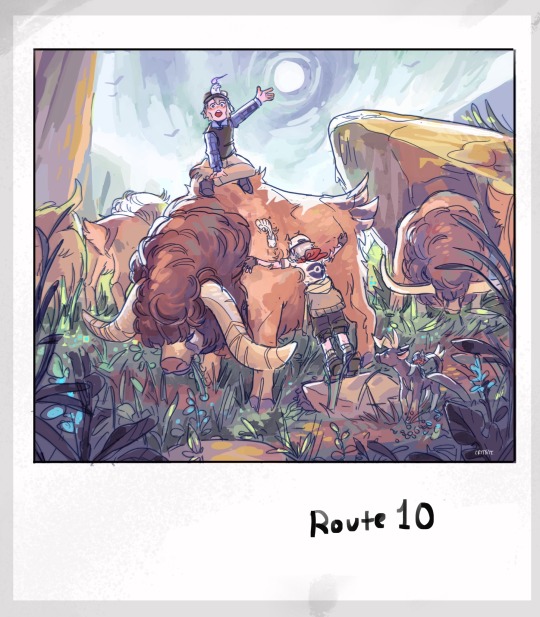

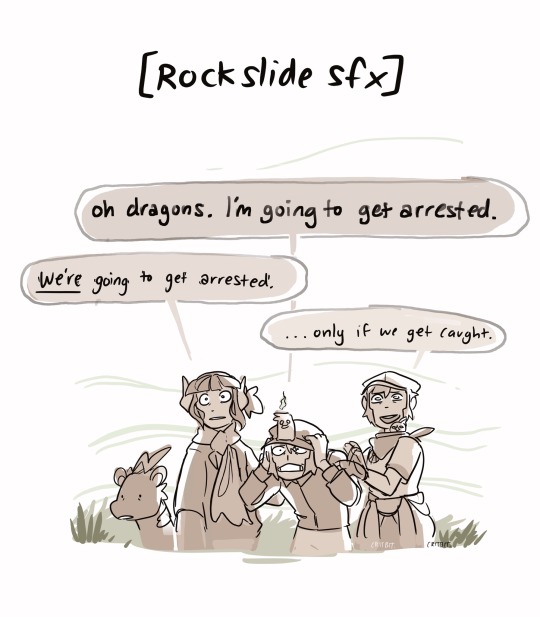

Did you know route 10 has a history of landslides? I like to think that this route has always been a point of contention within Unova’s pokeleague, especially with such crumbly cliffs so close to their victory road.

(Anyways the Patrat and Pachirisu children find out the hard way about Route 10’s… unfortunate quirks.)

((On the plus side! Behold! A bouffalant calve!))

Masterpost for more pokemon nonsense

#BOUFFALANT BABY? MORE LIKELY THEN YOU THINK#anyways in b2 route 10 closed down due to landslides#i like to think route 10’s always had issues with its cliffs#not ingo’s fault!! the kids just wanna play with echoes#that cliff is NOT very safety checked#pokemon#art#sketchbook#submas#myart#fanart#pokemon ingo#subway boss ingo#pokemon emmet#pokemon elesa#subway boss emmet#gym leader elesa#kid submas#nimbasa trio#bouffalant#bison#pokemon art#pkmn art#submas comic#web comic#litwick#dwebble#blitzle#tynamo

3K notes

·

View notes

Text

0 notes

Text

3 iPhone Settings to Check

iPhone Settings

RAYMOND OGLESBY @RaymondOglesby2April 25, 2023

Year after year, Apple rolls out new features and settings for its iPhones, adding more customization options that change how we use our phones.

Now is a good time to review some settings. Take a few minutes to peruse these three settings and make sure you are getting the most out of your iPhone.

This is for the iPhone. Screenshots are from iPhone…

View On WordPress

0 notes

Text

“Humans in the loop” must detect the hardest-to-spot errors, at superhuman speed

I'm touring my new, nationally bestselling novel The Bezzle! Catch me SATURDAY (Apr 27) in MARIN COUNTY, then Winnipeg (May 2), Calgary (May 3), Vancouver (May 4), and beyond!

If AI has a future (a big if), it will have to be economically viable. An industry can't spend 1,700% more on Nvidia chips than it earns indefinitely – not even with Nvidia being a principle investor in its largest customers:

https://news.ycombinator.com/item?id=39883571

A company that pays 0.36-1 cents/query for electricity and (scarce, fresh) water can't indefinitely give those queries away by the millions to people who are expected to revise those queries dozens of times before eliciting the perfect botshit rendition of "instructions for removing a grilled cheese sandwich from a VCR in the style of the King James Bible":

https://www.semianalysis.com/p/the-inference-cost-of-search-disruption

Eventually, the industry will have to uncover some mix of applications that will cover its operating costs, if only to keep the lights on in the face of investor disillusionment (this isn't optional – investor disillusionment is an inevitable part of every bubble).

Now, there are lots of low-stakes applications for AI that can run just fine on the current AI technology, despite its many – and seemingly inescapable - errors ("hallucinations"). People who use AI to generate illustrations of their D&D characters engaged in epic adventures from their previous gaming session don't care about the odd extra finger. If the chatbot powering a tourist's automatic text-to-translation-to-speech phone tool gets a few words wrong, it's still much better than the alternative of speaking slowly and loudly in your own language while making emphatic hand-gestures.

There are lots of these applications, and many of the people who benefit from them would doubtless pay something for them. The problem – from an AI company's perspective – is that these aren't just low-stakes, they're also low-value. Their users would pay something for them, but not very much.

For AI to keep its servers on through the coming trough of disillusionment, it will have to locate high-value applications, too. Economically speaking, the function of low-value applications is to soak up excess capacity and produce value at the margins after the high-value applications pay the bills. Low-value applications are a side-dish, like the coach seats on an airplane whose total operating expenses are paid by the business class passengers up front. Without the principle income from high-value applications, the servers shut down, and the low-value applications disappear:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Now, there are lots of high-value applications the AI industry has identified for its products. Broadly speaking, these high-value applications share the same problem: they are all high-stakes, which means they are very sensitive to errors. Mistakes made by apps that produce code, drive cars, or identify cancerous masses on chest X-rays are extremely consequential.

Some businesses may be insensitive to those consequences. Air Canada replaced its human customer service staff with chatbots that just lied to passengers, stealing hundreds of dollars from them in the process. But the process for getting your money back after you are defrauded by Air Canada's chatbot is so onerous that only one passenger has bothered to go through it, spending ten weeks exhausting all of Air Canada's internal review mechanisms before fighting his case for weeks more at the regulator:

https://bc.ctvnews.ca/air-canada-s-chatbot-gave-a-b-c-man-the-wrong-information-now-the-airline-has-to-pay-for-the-mistake-1.6769454

There's never just one ant. If this guy was defrauded by an AC chatbot, so were hundreds or thousands of other fliers. Air Canada doesn't have to pay them back. Air Canada is tacitly asserting that, as the country's flagship carrier and near-monopolist, it is too big to fail and too big to jail, which means it's too big to care.

Air Canada shows that for some business customers, AI doesn't need to be able to do a worker's job in order to be a smart purchase: a chatbot can replace a worker, fail to their worker's job, and still save the company money on balance.

I can't predict whether the world's sociopathic monopolists are numerous and powerful enough to keep the lights on for AI companies through leases for automation systems that let them commit consequence-free free fraud by replacing workers with chatbots that serve as moral crumple-zones for furious customers:

https://www.sciencedirect.com/science/article/abs/pii/S0747563219304029

But even stipulating that this is sufficient, it's intrinsically unstable. Anything that can't go on forever eventually stops, and the mass replacement of humans with high-speed fraud software seems likely to stoke the already blazing furnace of modern antitrust:

https://www.eff.org/de/deeplinks/2021/08/party-its-1979-og-antitrust-back-baby

Of course, the AI companies have their own answer to this conundrum. A high-stakes/high-value customer can still fire workers and replace them with AI – they just need to hire fewer, cheaper workers to supervise the AI and monitor it for "hallucinations." This is called the "human in the loop" solution.

The human in the loop story has some glaring holes. From a worker's perspective, serving as the human in the loop in a scheme that cuts wage bills through AI is a nightmare – the worst possible kind of automation.

Let's pause for a little detour through automation theory here. Automation can augment a worker. We can call this a "centaur" – the worker offloads a repetitive task, or one that requires a high degree of vigilance, or (worst of all) both. They're a human head on a robot body (hence "centaur"). Think of the sensor/vision system in your car that beeps if you activate your turn-signal while a car is in your blind spot. You're in charge, but you're getting a second opinion from the robot.

Likewise, consider an AI tool that double-checks a radiologist's diagnosis of your chest X-ray and suggests a second look when its assessment doesn't match the radiologist's. Again, the human is in charge, but the robot is serving as a backstop and helpmeet, using its inexhaustible robotic vigilance to augment human skill.

That's centaurs. They're the good automation. Then there's the bad automation: the reverse-centaur, when the human is used to augment the robot.

Amazon warehouse pickers stand in one place while robotic shelving units trundle up to them at speed; then, the haptic bracelets shackled around their wrists buzz at them, directing them pick up specific items and move them to a basket, while a third automation system penalizes them for taking toilet breaks or even just walking around and shaking out their limbs to avoid a repetitive strain injury. This is a robotic head using a human body – and destroying it in the process.

An AI-assisted radiologist processes fewer chest X-rays every day, costing their employer more, on top of the cost of the AI. That's not what AI companies are selling. They're offering hospitals the power to create reverse centaurs: radiologist-assisted AIs. That's what "human in the loop" means.

This is a problem for workers, but it's also a problem for their bosses (assuming those bosses actually care about correcting AI hallucinations, rather than providing a figleaf that lets them commit fraud or kill people and shift the blame to an unpunishable AI).

Humans are good at a lot of things, but they're not good at eternal, perfect vigilance. Writing code is hard, but performing code-review (where you check someone else's code for errors) is much harder – and it gets even harder if the code you're reviewing is usually fine, because this requires that you maintain your vigilance for something that only occurs at rare and unpredictable intervals:

https://twitter.com/qntm/status/1773779967521780169

But for a coding shop to make the cost of an AI pencil out, the human in the loop needs to be able to process a lot of AI-generated code. Replacing a human with an AI doesn't produce any savings if you need to hire two more humans to take turns doing close reads of the AI's code.

This is the fatal flaw in robo-taxi schemes. The "human in the loop" who is supposed to keep the murderbot from smashing into other cars, steering into oncoming traffic, or running down pedestrians isn't a driver, they're a driving instructor. This is a much harder job than being a driver, even when the student driver you're monitoring is a human, making human mistakes at human speed. It's even harder when the student driver is a robot, making errors at computer speed:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

This is why the doomed robo-taxi company Cruise had to deploy 1.5 skilled, high-paid human monitors to oversee each of its murderbots, while traditional taxis operate at a fraction of the cost with a single, precaratized, low-paid human driver:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

The vigilance problem is pretty fatal for the human-in-the-loop gambit, but there's another problem that is, if anything, even more fatal: the kinds of errors that AIs make.

Foundationally, AI is applied statistics. An AI company trains its AI by feeding it a lot of data about the real world. The program processes this data, looking for statistical correlations in that data, and makes a model of the world based on those correlations. A chatbot is a next-word-guessing program, and an AI "art" generator is a next-pixel-guessing program. They're drawing on billions of documents to find the most statistically likely way of finishing a sentence or a line of pixels in a bitmap:

https://dl.acm.org/doi/10.1145/3442188.3445922

This means that AI doesn't just make errors – it makes subtle errors, the kinds of errors that are the hardest for a human in the loop to spot, because they are the most statistically probable ways of being wrong. Sure, we notice the gross errors in AI output, like confidently claiming that a living human is dead:

https://www.tomsguide.com/opinion/according-to-chatgpt-im-dead

But the most common errors that AIs make are the ones we don't notice, because they're perfectly camouflaged as the truth. Think of the recurring AI programming error that inserts a call to a nonexistent library called "huggingface-cli," which is what the library would be called if developers reliably followed naming conventions. But due to a human inconsistency, the real library has a slightly different name. The fact that AIs repeatedly inserted references to the nonexistent library opened up a vulnerability – a security researcher created a (inert) malicious library with that name and tricked numerous companies into compiling it into their code because their human reviewers missed the chatbot's (statistically indistinguishable from the the truth) lie:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

For a driving instructor or a code reviewer overseeing a human subject, the majority of errors are comparatively easy to spot, because they're the kinds of errors that lead to inconsistent library naming – places where a human behaved erratically or irregularly. But when reality is irregular or erratic, the AI will make errors by presuming that things are statistically normal.

These are the hardest kinds of errors to spot. They couldn't be harder for a human to detect if they were specifically designed to go undetected. The human in the loop isn't just being asked to spot mistakes – they're being actively deceived. The AI isn't merely wrong, it's constructing a subtle "what's wrong with this picture"-style puzzle. Not just one such puzzle, either: millions of them, at speed, which must be solved by the human in the loop, who must remain perfectly vigilant for things that are, by definition, almost totally unnoticeable.

This is a special new torment for reverse centaurs – and a significant problem for AI companies hoping to accumulate and keep enough high-value, high-stakes customers on their books to weather the coming trough of disillusionment.

This is pretty grim, but it gets grimmer. AI companies have argued that they have a third line of business, a way to make money for their customers beyond automation's gifts to their payrolls: they claim that they can perform difficult scientific tasks at superhuman speed, producing billion-dollar insights (new materials, new drugs, new proteins) at unimaginable speed.

However, these claims – credulously amplified by the non-technical press – keep on shattering when they are tested by experts who understand the esoteric domains in which AI is said to have an unbeatable advantage. For example, Google claimed that its Deepmind AI had discovered "millions of new materials," "equivalent to nearly 800 years’ worth of knowledge," constituting "an order-of-magnitude expansion in stable materials known to humanity":

https://deepmind.google/discover/blog/millions-of-new-materials-discovered-with-deep-learning/

It was a hoax. When independent material scientists reviewed representative samples of these "new materials," they concluded that "no new materials have been discovered" and that not one of these materials was "credible, useful and novel":

https://www.404media.co/google-says-it-discovered-millions-of-new-materials-with-ai-human-researchers/

As Brian Merchant writes, AI claims are eerily similar to "smoke and mirrors" – the dazzling reality-distortion field thrown up by 17th century magic lantern technology, which millions of people ascribed wild capabilities to, thanks to the outlandish claims of the technology's promoters:

https://www.bloodinthemachine.com/p/ai-really-is-smoke-and-mirrors

The fact that we have a four-hundred-year-old name for this phenomenon, and yet we're still falling prey to it is frankly a little depressing. And, unlucky for us, it turns out that AI therapybots can't help us with this – rather, they're apt to literally convince us to kill ourselves:

https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#automation#humans in the loop#centaurs#reverse centaurs#labor#ai safety#sanity checks#spot the mistake#code review#driving instructor

733 notes

·

View notes

Text

These freaks are not studying (good for them)

Closeups below :)

#they all fully passed out by accident#except for Adaine who sat down with the intention of tricking Riz into napping#ugh#I fully believe that they follow the safety in numbers rule while sleeping but none of them ever admit it out loud#when you’ve had your body taken over by a nightmare god in the past you kinda don’t feel great sleeping alone y’know?#check out the Fabian and Gorgug jacket swap too cause that’s my favorite thing to do#the bad kids all wear each others clothes so much it’s hard to remember who they originally belonged to and I believe that#and they love each other so much#fantasy high#d20#d20 fantasy high#dimension 20#d20 fanart#fantasy high fanart#fh#fhjy#adaine abernant#riz gukgak#fabian seacaster#kristen applebees#gorgug thistlespring#fig faeth#the bad kids#kalina#Cassandra is in the stars :)#undescribed#not described#my art

964 notes

·

View notes

Text

All my Florida peeps currently going through Ian, please keep us as updated as you can with how you are doing.

And above all else stay safe.

1 note

·

View note

Text

iOS 16 Rolls Out With Passwordless Authentication, Spyware Protection

iOS 16 Rolls Out With Passwordless Authentication, Spyware Protection

Home › Cyberwarfare

iOS 16 Rolls Out With Passwordless Authentication, Spyware Protection

By Ionut Arghire on September 13, 2022

Tweet

Apple this week has started rolling out iOS 16 with several security and privacy improvements meant to keep users protected from malware, state-sponsored attackers, and an abusive spouse.

The first of these features is Lockdown Mode, a capability designed to keep…

View On WordPress

#Apple#clipboard access#iOS 16#lockdown mode#Passkey#permissions#rapid security response#Safety Check

0 notes

Photo

How to Protect Your Garage and Home from Flooding?

There are many causes of flooding that can be difficult to predict, especially if you don't live somewhere prone to them. If the worst-case scenario comes true and you find that your family is facing a flood, the last thing you want is having to worry about whether your home will be okay. You will be too busy making sure that the residents of your house are safe, first and foremost. For this reason, it is a good idea to take a few preventative measures to ensure that your garage and home are in a safe condition and won't be heavily compromised if you experience a flood.

The most important things you can do include making sure that all of the parts of your garage are in working order. You should also call a licensed garage door repair contractor to come out and inspect your garage door, walls, ceiling and floor for any cracks that might let water in. The addition of flood vents beneath the garage may also help by keeping water pressure from building up instead of giving the floodwater somewhere to go. With these tips in mind, you can begin to flood-proof your garage, so you aren't scrambling to repair flood damage after the fact.

https://garagedoorchamp.com/how-to-protect-your-garage-and-home-from-flooding/

#garage doors#home improvement#garage door repair#garage door seal#garage door installation#garage door safety#garage door replacement#garage door maintenance#safety check#garage door contractor#garage door supplier

0 notes

Text

I was reminded by a reply that I should probably emphasize this: the Big Cat Public Safety Act literally has an exemption specifically for state colleges and universities. Why? Because there’s two schools with live mascots who live in habitats on campus, and their representatives absolutely would not have supported the bill if it had taken away their college’s cats.

Meet Mike VII at Louisiana State University:

And Leo III at the University of North Alabama:

(Leo III’s mate, Una, passed away a few years ago).

It’s tradition for these schools to have a live mascot, so the bill that *checks notes* is meant to end unethical commercial of big cats had to ensure that they’d still be allowed to have a big cat living next to their stadiums. Luckily neither school takes their mascots to the sidelines of football games anymore, but LSU actually only just stopped that practice in 2017.

These mascot cats have consistently been part of the commercial trade in big cats, although it’s unclear if they will continue to be (even though it’s still legal for these schools to buy their next mascot). Una and Leo III came from a wildlife park in New Hampshire as young cubs, and Mike VII is ostensibly a rescue but the story of the facility he came from doesn’t quite pass the smell test.

Here’s the wild thing. Under the new law, right, most entities that want to keep big cats - like sanctuaries and zoos - have to follow certain rules regarding fencing and breeding restrictions and preventing public contact in order to be allowed to do so. But state schools? Nada. They can buy, sell, and breed without any limits. They could, quite literally, run a tiger puppy-mill or start a cub petting franchise across multiple state universities and it wouldn’t be illegal. Obviously that’s a worst case scenario that’s super unlikely, but it goes to show just how odd it is that these entities have a totally unrestricted exemption. Credible zoological facilities and sanctuaries have to comply with much stricter regulations to prove they’re not exploiting the cats in their care, but for the sake of football, state colleges and universities can do whatever they want!! (sigh). It’s amazing how really specific political interests, such as the culture around football mascots, can result in carve-outs in even bills promoted specifically to create consistent regulations for animal welfare.

#big cat public safety act#college mascots#big cats#Mike the tiger#Mike vii#Leo the Lion#Leo iii#the mobile app isn’t letting me embed links but you can double check all the details about these cats on their websites#just Google their names and it’s easy to find

3K notes

·

View notes

Photo

fibre optic cables yum (part 1)

#art#comic#pokemon#submas#subway boss emmet#joltik#xurkitree#crossroads emmet#I dont have a drawing tag#''please remember your safety checks'' while grabbing electricity creature eating electricity#if it's not clear he really just brushes off getting electrocuted

4K notes

·

View notes

Text

I can’t stop thinking about how every parental figure in Kristen’s life has failed her by prioritizing their other kids.

Obviously, her bio parents are racist assholes who raised her in a fundamentalist cult, and kicked out at 15, all because her parents were offended she was “taking her friends’ side over theirs.” Which she didn’t, what she did was ask for answers and tell them that Daybreak tried to kill her but they refused to believe her. Instead, they chose to make her homeless so she doesn’t spread the idea that her parents don’t know everything. Two years later, and they’re still doing the same thing at the diner.

Then there’s Jawbone and he’s Tracker’s guardian, and unlike Adaine, she didn’t get a speech about how he’d take her in, or what their relationship was, she just moved in with her girlfriend, and he was the adult of the house. And now that she and Tracker have broken up, he and Sandra Lynn don’t seem to have made the time to talk to her about what that means for her place in this family. Adaine came back from spring break to gifts for every birthday she had without Jawbone and adoption papers. Kristen’s mail from her school was going to her parents’ house when she lives with the guidance counselor.

Then, there’s the fact that when Sandra Lynn and Jawbone moved in together, she took on a role she clearly wasn’t ready for, as she was still repairing her relationship with Fig. Kristen had to be the one to finally snap her out of her self destructive spiral with Garthy and then Sandra Lynn asked her to keep it a secret. Meaning she wouldn’t be able to tell Tracker, the only person who has been meeting her emotional and physical safety needs, above just giving her a room to live in. (And we know this is true because part of the reason Nara and Tracker are together is because Nara doesn’t need those things from Tracker). And yes, Sandra Lynn apologizes to the whole group, as she should, for putting their home in jeopardy, which is a massive step. But she never talks to Kristen about putting her between the 3 people responsible for keeping her out of her parent’s house.

So Sandra Lynn calls Fig, “her only daughter in the world,” and Jawbone is only legally responsible for Adaine and Tracker, and neither of them, nor any other adult, has asked if Kristen feels safe or if she’s okay. Like I’m sorry, who the fuck is taking care of the cleric who’s god and teacher died in the same year, is going through deprogramming from a cult that wants her back, her first breakup, seeing her estranged parents and siblings again, and now being expelled on zero grounds despite working herself to the bone to make sure her party gets to go to college?

#dimension 20#d20#fantasy high junior year#kristen applebees#fantasy high#fhjy spoilers#also she’s died twice#And everyone is confused as to why she’s acting kinda batshit?#like as someone who had to do a lot of personal safety work because of one parent as a teenager that shit is exhausting#ever since the episode where we saw how Nara is just Kristen with money and parents#this has been bugging me and I’m getting more and more mad about it#like yes good parents drop the ball they’re people too and that’s okay#but for the love of god someone check on her and Fabian

396 notes

·

View notes

Text

wdym that everyone who stayed back on the island stayed back for love. wdym everyone that ran to save themselves also did it for their love for each other. wdym people tried their best to save the people they'd spent two weeks fighting cuz at the end there was love THERE WAS LOVE.

thats what the qsmp has always been about. it's love. all kinds of love. and what you're willing to do for that love. its love its love its love

#q!cellbit staying bc he didn't want to leave richas#q!baghera staying bc she couldn't leave pomme#q!foolish using and popping a totem trying to save leo#and going back to try and save q!tina and q!mouse#q!phil ripping his wings apart if it means flying him and q!tubbo to safety#q!fit flying through a meteor shower if it means saving ANYONE and saving q!bagi#all the residents shouting and pulling each other onto the boat constantly checking who has made it and who else is left#at the end of purgatory is when they were their MOST human#and even though theyre grieving their children and their friends#unsure of whats about to happen#the love is there#its always been love#qsmp#qsmp purgatory

594 notes

·

View notes

Text

possession

#check safety and stay safe this spooky season 👻✨#submas#emmet#ingo#kudari#nobori#pokemon#pokemon fanart#fanart#tw eye contact

3K notes

·

View notes

Text

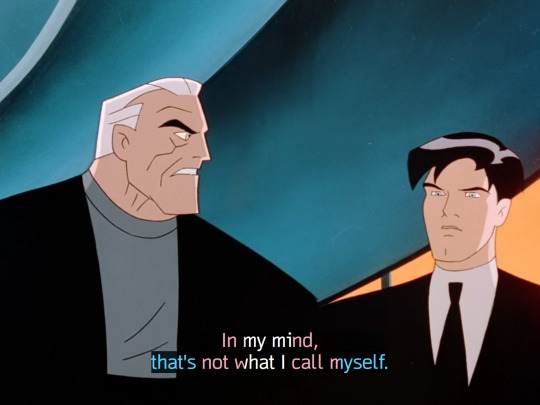

this bit in Batman Beyond, where "Bruce Wayne" -who gave up the cowl 40 years ago- admits he does not refer to himself as Bruce in his own mind, but as Batman, is the epiphany moment for me in the Trans Batman reading of the character.

#what was that one post again: for privacy and safety you can use a fake name online but Watch Out#that's what happened to batman#batman#bruce wayne#trans batman#batman beyond#dcau#trans#sanmemeno#this is not my only trans edit of batman if you want to check out the trans batman tag on my blog#santagno#pvp enabled

451 notes

·

View notes

Text

"dni if you're over/under X age" hey did you know people can lie? and that there are a lot of very motivated people out there who want to hop turnstiles whenever possible?

if you want any semblance of privacy with what you post you need to just not post it. it's tumblr. things get reblogged. nonrebloggable things regularly get screenshotted and passed around, often out of spite. blog passwords get cracked. etc. you are kidding yourself if you think there is any boundary of any kind you can maintain/enforce on here. grapple with that and curate what you post accordingly, instead of trying to herd cats while you air every last piece of your dirty laundry for all and sundry

#your yard's fence is barely knee high and constructed entirely of straw and twine#i'm not saying this to be a bitch i'm saying it for people's own safety and peace of mind and some need the reality check#shebbz shoutz

277 notes

·

View notes