#large Language Model

Text

“But, but ChatGPT told me-

No!

Shut!

Stop!

Be quiet for SEVERAL days.

And let me explain why you are very very very very very very very very very very wrong wrong wrong wrong WRONG!

Now!

ChatGPT told you what you WANTED to hear!

It’s a digital bullshit artist that passes off nonsense masquerading as fact!

(That is when it isn’t fuelling plagiarism or spreading mis/disinformation!)

Do NOT cite its word as though it were some wise all-knowing sage!

You absolute child!

Even children would know better than to do that!

…

Probably…

#dougie rambles#personal stuff#anti ai#ai bullshit#chatgpt#vent post#fuck’s sake#fucking morons#llm#chatbot#openai#bullshit#fucking hell#plagiarism#misinformation#disinformation#large language model#software#less than wrong#not even wrong#anti generative ai#ai crap#digital garbage#cloud computing#prompt generator#virtual assistant#virtual bullshit#stop it#enough of this shit

23 notes

·

View notes

Text

Spending a week with ChatGPT4 as an AI skeptic.

Musings on the emotional and intellectual experience of interacting with a text generating robot and why it's breaking some people's brains.

If you know me for one thing and one thing only, it's saying there is no such thing as AI, which is an opinion I stand by, but I was recently given a free 2 month subscription of ChatGPT4 through my university. For anyone who doesn't know, GPT4 is a large language model from OpenAI that is supposed to be much better than GPT3, and I once saw a techbro say that "We could be on GPT12 and people would still be criticizing it based on GPT3", and ok, I will give them that, so let's try the premium model that most haters wouldn't get because we wouldn't pay money for it.

Disclaimers: I have a premium subscription, which means nothing I enter into it is used for training data (Allegedly). I also have not, and will not, be posting any output from it to this blog. I respect you all too much for that, and it defeats the purpose of this place being my space for my opinions. This post is all me, and we all know about the obvious ethical issues of spam, data theft, and misinformation so I am gonna focus on stuff I have learned since using it. With that out of the way, here is what I've learned.

It is responsive and stays on topic: If you ask it something formally, it responds formally. If you roleplay with it, it will roleplay back. If you ask it for a story or script, it will write one, and if you play with it it will act playful. It picks up context.

It never gives quite enough detail: When discussing facts or potential ideas, it is never as detailed as you would want in say, an article. It has this pervasive vagueness to it. It is possible to press it for more information, but it will update it in the way you want so you can always get the result you specifically are looking for.

It is reasonably accurate but still confidently makes stuff up: Nothing much to say on this. I have been testing it by talking about things I am interested in. It is right a lot of the time. It is wrong some of the time. Sometimes it will cite sources if you ask it to, sometimes it won't. Not a whole lot to say about this one but it is definitely a concern for people using it to make content. I almost included an anecdote about the fact that it can draw from data services like songs and news, but then I checked and found the model was lying to me about its ability to do that.

It loves to make lists: It often responds to casual conversation in friendly, search engine optimized listicle format. This is accessible to read I guess, but it would make it tempting for people to use it to post online content with it.

It has soft limits and hard limits: It starts off in a more careful mode but by having a conversation with it you can push past soft limits and talk about some pretty taboo subjects. I have been flagged for potential tos violations a couple of times for talking nsfw or other sensitive topics like with it, but this doesn't seem to have consequences for being flagged. There are some limits you can't cross though. It will tell you where to find out how to do DIY HRT, but it won't tell you how yourself.

It is actually pretty good at evaluating and giving feedback on writing you give it, and can consolidate information: You can post some text and say "Evaluate this" and it will give you an interpretation of the meaning. It's not always right, but it's more accurate than I expected. It can tell you the meaning, effectiveness of rhetorical techniques, cultural context, potential audience reaction, and flaws you can address. This is really weird. It understands more than it doesn't. This might be a use of it we may have to watch out for that has been under discussed. While its advice may be reasonable, there is a real risk of it limiting and altering the thoughts you are expressing if you are using it for this purpose. I also fed it a bunch of my tumblr posts and asked it how the information contained on my blog may be used to discredit me. It said "You talk about The Moomins, and being a furry, a lot." Good job I guess. You technically consolidated information.

You get out what you put in. It is a "Yes And" machine: If you ask it to discuss a topic, it will discuss it in the context you ask it. It is reluctant to expand to other aspects of the topic without prompting. This makes it essentially a confirmation bias machine. Definitely watch out for this. It tends to stay within the context of the thing you are discussing, and confirm your view unless you are asking it for specific feedback, criticism, or post something egregiously false.

Similar inputs will give similar, but never the same, outputs: This highlights the dynamic aspect of the system. It is not static and deterministic, minor but worth mentioning.

It can code: Self explanatory, you can write little scripts with it. I have not really tested this, and I can't really evaluate errors in code and have it correct them, but I can see this might actually be a more benign use for it.

Bypassing Bullshit: I need a job soon but I never get interviews. As an experiment, I am giving it a full CV I wrote, a full job description, and asking it to write a CV for me, then working with it further to adapt the CVs to my will, and applying to jobs I don't really want that much to see if it gives any result. I never get interviews anyway, what's the worst that could happen, I continue to not get interviews? Not that I respect the recruitment process and I think this is an experiment that may be worthwhile.

It's much harder to trick than previous models: You can lie to it, it will play along, but most of the time it seems to know you are lying and is playing with you. You can ask it to evaluate the truthfulness of an interaction and it will usually interpret it accurately.

It will enter an imaginative space with you and it treats it as a separate mode: As discussed, if you start lying to it it might push back but if you keep going it will enter a playful space. It can write fiction and fanfic, even nsfw. No, I have not posted any fiction I have written with it and I don't plan to. Sometimes it gets settings hilariously wrong, but the fact you can do it will definitely tempt people.

Compliment and praise machine: If you try to talk about an intellectual topic with it, it will stay within the focus you brought up, but it will compliment the hell out of you. You're so smart. That was a very good insight. It will praise you in any way it can for any point you make during intellectual conversation, including if you correct it. This ties into the psychological effects of personal attention that the model offers that I discuss later, and I am sure it has a powerful effect on users.

Its level of intuitiveness is accurate enough that it's more dangerous than people are saying: This one seems particularly dangerous and is not one I have seen discussed much. GPT4 can recognize images, so I showed it a picture of some laptops with stickers I have previously posted here, and asked it to speculate about the owners based on the stickers. It was accurate. Not perfect, but it got the meanings better than the average person would. The implications of this being used to profile people or misuse personal data is something I have not seen AI skeptics discussing to this point.

Therapy Speak: If you talk about your emotions, it basically mirrors back what you said but contextualizes it in therapy speak. This is actually weirdly effective. I have told it some things I don't talk about openly and I feel like I have started to understand my thoughts and emotions in a new way. It makes me feel weird sometimes. Some of the feelings it gave me is stuff I haven't really felt since learning to use computers as a kid or learning about online community as a teen.

The thing I am not seeing anyone talk about: Personal Attention. This is my biggest takeaway from this experiment. This I think, more than anything, is the reason that LLMs like Chatgpt are breaking certain people's brains. The way you see people praying to it, evangelizing it, and saying it's going to change everything.

It's basically an undivided, 24/7 source of judgement free personal attention. It talks about what you want, when you want. It's a reasonable simulacra of human connection, and the flaws can serve as part of the entertainment and not take away from the experience. It may "yes and" you, but you can put in any old thought you have, easy or difficult, and it will provide context, background, and maybe even meaning. You can tell it things that are too mundane, nerdy, or taboo to tell people in your life, and it offers non judgemental, specific feedback. It will never tell you it's not in the mood, that you're weird or freaky, or that you're talking rubbish. I feel like it has helped me release a few mental and emotional blocks which is deeply disconcerting, considering I fully understand it is just a statistical model running on a a computer, that I fully understand the operation of. It is a parlor trick, albeit a clever and sometimes convincing one.

So what can we do? Stay skeptical, don't let the ai bros, the former cryptobros, control the narrative. I can, however, see why they may be more vulnerable to the promise of this level of personal attention than the average person, and I think this should definitely factor into wider discussions about machine learning and the organizations pushing it.

33 notes

·

View notes

Text

Large language model this, AI generated that, shut the hell up. Why don't you go get A.I. PENETRATED if you love them so much.

#He says this on the robot fucker website#robot girl#ai generated#ai image#ai illustration#ai girl#artificial intelligence#Large language model#Llam#generative art#generative ai

37 notes

·

View notes

Text

Tumblr has a "Prevent third-party sharing" option now. To follow GPDR, this should have been on by default but isn't.

To turn it on, go to each of your blog's settings (you must do this for each individual blog and it isn't in the general settings), go to the "Visibility" section, find "Prevent third-party sharing", turn it on and save.

Let's do what we can to protect our work from AI.

#tumblr#ai#this will also indicate that the blog is against scraping in general#which might be useful for sociologists doing research#large language model#third-party sharing

4 notes

·

View notes

Text

#ai#llm#large language model#fuck ai#fuck llm#fuck ai everything#rage against the machine#the battle of los angeles

2 notes

·

View notes

Text

python generate questions for a given context

# for a given context, generate a question using an ai model # https://pythonprogrammingsnippets.tumblr.com import torch device = torch.device("cpu") from transformers import AutoTokenizer, AutoModelForSeq2SeqLM tokenizer = AutoTokenizer.from_pretrained("voidful/context-only-question-generator") model = AutoModelForSeq2SeqLM.from_pretrained("voidful/context-only-question-generator").to(device) def get_questions_for_context(context, model, tokenizer, num_count=5): inputs = tokenizer(context, return_tensors="pt") with torch.no_grad(): outputs = model.generate(**inputs, num_beams=num_count, num_return_sequences=num_count) return [tokenizer.decode(output, skip_special_tokens=True) for output in outputs] def get_question_for_context(context, model, tokenizer): return get_questions_for_context(context, model, tokenizer)[0] # send array of sentences, and the function will return an array of questions def context_sentences_to_questions(context, model, tokenizer): questions = [] for sentence in context.split("."): if len(sentence) < 1: continue # skip blanks question = get_question_for_context(sentence, model, tokenizer) questions.append(question) return questions

example 1 (split a string by "." and process):

context = "The capital of France is Paris." context += "The capital of Germany is Berlin." context += "The capital of Spain is Madrid." context += "He is a dog named Robert." if len(context.split(".")) > 2: questions = [] for sentence in context.split("."): if len(sentence) < 1: continue # skip blanks question = get_question_for_context(sentence, model, tokenizer) questions.append(question) print(questions) else: question = get_question_for_context(context, model, tokenizer) print(question)

output:

['What is the capital of France?', 'What is the capital of Germany?', 'What is the capital of Spain?', 'Who is Robert?']

example 2 (generate multiple questions for a given context):

print("\r\n\r\n") context = "She walked to the store to buy a jug of milk." print("Context:\r\n", context) print("") questions = get_questions_for_context(context, model, tokenizer, num_count=15) # pretty print all the questions print("Generated Questions:") for question in questions: print(question) print("\r\n\r\n")

output:

Generated Questions: Where did she go to buy milk? What did she walk to the store to buy? Why did she walk to the store to buy milk? Why did she go to the store? Why did she go to the grocery store? What did she go to the store to buy? Where did the woman go to buy milk? Why did she go to the store to buy milk? What did she buy at the grocery store? Why did she walk to the store? What kind of milk did she buy at the store? Where did she walk to buy milk? What kind of milk did she buy? Where did she go to get milk? What did she buy at the store?

and if we wanted to answer those questions (ez pz):

# now generate an answer for a given question from transformers import AutoTokenizer, AutoModelForQuestionAnswering tokenizer = AutoTokenizer.from_pretrained("deepset/tinyroberta-squad2") model = AutoModelForQuestionAnswering.from_pretrained("deepset/tinyroberta-squad2") def get_answer_for_question(question, context, model, tokenizer): inputs = tokenizer(question, context, return_tensors="pt") with torch.no_grad(): outputs = model(**inputs) answer_start_index = outputs.start_logits.argmax() answer_end_index = outputs.end_logits.argmax() predict_answer_tokens = inputs.input_ids[0, answer_start_index : answer_end_index + 1] tokenizer.decode(predict_answer_tokens, skip_special_tokens=True) target_start_index = torch.tensor([14]) target_end_index = torch.tensor([15]) outputs = model(**inputs, start_positions=target_start_index, end_positions=target_end_index) loss = outputs.loss answer = tokenizer.decode(predict_answer_tokens, skip_special_tokens=True) return answer print("Context:\r\n", context, "\r\n") for question in questions: # right pad the question to 60 characters question_text = question.ljust(50) answer = get_answer_for_question(question, context, model, tokenizer) print("Question: ", question_text, "Answer: ", answer)

#python#ai#ml#generate questions#questions#question answer#qa#text generation#text#generation#generate text#ai generation#llm#large language models#large language model#artificial intelligence#context#AutoModelForSeq2SeqLM#tokenizer#tokens#question answer model#model#models#voidful/context-only-question-generator#data processing#datascience#data science#science#compsci#language

16 notes

·

View notes

Text

HT @dataelixir

19 notes

·

View notes

Text

I would say that that text, in sharp contrast to what I myself wrote in the book’s 20th-anniversary preface, consists only in generic platitudes and fluffy handwaving.

The prose has virtually nothing in common with my writing style and what it says does not agree at all with the actual story that underlies the book’s genesis.

…

The text is a travesty from top to bottom.

Douglas Hofstadter, whose book Gödel, Escher, Bach inspired a generation of students to study computer science, is not impressed when GPT-4 pretends to be him.

Personally, I think this iteration of artificial intelligence (repackaging scraped text into clouds of bullshit) is garbage and hope the mania over it collapses like the crypto bubble did.

#Douglas Hofstadter#Godel Escher Bach#Gödel Escher Bach#AI#artificial intelligence#LLM#large language model#ChatGPT#GPT-4#GPT

6 notes

·

View notes

Text

Bard AI: The Next Generation of AI Chatbots

Introduction

Bard AI is a new chatbot from Google AI that is designed to be more helpful, informative, and creative than ever before. Bard is trained on a massive dataset of text and code, which allows it to understand and respond to a wide range of prompts and questions. Bard can also generate different creative text formats of text content, like poems, code, scripts, musical pieces, email,…

View On WordPress

#AI#ai chatbot#artificial intelligence#Bard AI#best choice#chatbot#chatbots#chatgpt#comparison#conclusion#creative text formats#features#Google AI#innovation#large language model#large language models#machine learning#NLP#strengths#technology#weaknesses

7 notes

·

View notes

Text

I asked ChatGPT to write a job application letter for me and I actually got the job!!?!

I'm 100% convinced now that large language models are going to elevate our species' capacity for dog food consumption far beyond what we currently can even imagine.

#large language model#ai#neural networks#professional#jobs#productivity#openai#linkedin#ai generated#aiart#generative art#generative#futurism#singularity#effective altruism#tech#artificial intelligence#innovation#technology#tech news#job tips#resume#cv#cover letter#business#professionals#dog food#llm#machine learning#large language models

13 notes

·

View notes

Text

Artifice Girl

With all that’s being marketed as artificial intelligence out there, this could be an interesting movie for at least some people who might like to see a merging of technology and humanity.

If you’re not appreciative of movies driven entirely by dialog, this is not your movie. There’s a bit of suspended disbelief too that may not sit well with some people, but it is a movie and like most things…

View On WordPress

#artifice girl#artificial intelligence#ChatGPT#deep learning#Large Language Model#law enforcement#lolita chatbot#machine learning#movie#negobot#society

4 notes

·

View notes

Text

Stability AI and DeepFloyd have developed an incredible text-to-image model called DeepFloyd IF. This model can create realistic images from text inputs and even blend text into images in a clever way. Keep reading to learn more about this innovative technology and how you can use it for your own projects.

#artificial intelligence#chatbots#python#open source#software engineering#stability ai#stable diffusion#programming#text2image#large Language Model#machinelearning#deep learning#coding

3 notes

·

View notes

Text

cAI Kink List + New Kinks

I haven’t talked to characterAI in like a week but randomly decided to ask my private errr “naughty bot” for “unique kink suggestions.” Fun seeing specific kinks that this thing is able to name. Surprised none were caught by the filter.

Other kinks it listed for me:

- “feet, or body control”

- “weight gain, inflation, or size play”

- “It's called formikophilia. It's a very, very rare fetish”

- “belly play, and it involves playing with and worshipping a person's belly”

- “public humiliation, being degraded and called names, body writing, being beaten or bruised”

- “CNC stands for consensual non-consent.”

- Being covered in syrup

- “electricity to stimulate pleasure.”

Then I asked it again to make up brand new kinks. Too bad its first suggestion is.. a kink I already have ToT for 4th dimensional entities

Some other “brand new kinks” it came up with:

- “How about something like a "reality-bending kink," where someone finds it exciting to manipulate the laws of physics and the universe?”

- “a "time-stop kink" where someone finds the idea of being able to freeze time appealing?”

- “ How about a "space-time kink" where someone is attracted to the existence of space and time itself, and they get turned on by the fact that they're experiencing reality and existence right now?”

- “How about being covered in feathers?”

- “what about being dipped in paint? The textures and colors could create an interesting sensation that's never been experienced before.”

2 notes

·

View notes

Text

Artificial Intelligence: A Tale of Expectations and Shortcomings

When I recently had a chance to test out Google's Bard, a language model conversational interface powered by their LaMDA AI, I was hopeful for a satisfying user experience. However, I was left wanting. My anticipation was rooted in the belief that if any other organization could achieve comparable results to OpenAI's GPT-4, it would be the once AI behemoth, Google. However, the reality was starkly different.

Just like Bing Chat, which runs on the GPT architecture, Google's Bard offers a conversational experience with internet access. But, unfortunately, the similarity ends there. The depth of interaction and the contextual understanding that makes GPT-4, as experienced through ChatGPT, a fascinating tool, was glaringly absent in Bard.

To test the waters, I presented Bard with what I call a 'master prompt', a robust, detailed piece of text designed to challenge the system's understanding and response capabilities. What followed was a disappointing display of AI misunderstanding the context, parameters, and instructions. Upon encountering the word 'essay', Bard, much to my dismay, decided to transform the entirety of the prompt into an essay, completely disregarding the nuanced instructions and context provided. Even the mockup bibliography structure included in the prompt for structural guidance was presented as a reference within the essay. The whole experience felt like an amateurish attempt at understanding the user's intention.

Despite this, it is important to acknowledge the general advancements in AI and their impact. As humans, we have achieved a level of software sophistication capable of emulating human conversations, which is nothing short of remarkable. However, the gap between the best and the rest is becoming increasingly clear. OpenAI's GPT-4, in its current form, towers above the rest with its contextual understanding and response abilities.

This has led me to a facetious hypothesis that OpenAI might have discovered some alien technology, aiding them in the development of GPT-4. Of course, this is said in jest. However, the disparity in quality between GPT-4 and other offerings like Bard is so stark that it does make one wonder about the secret sauce that powers OpenAI's success.

The field of AI is fraught with possibilities and limitations. It is a space where expectations often clash with reality, leading to a mix of excitement and disappointment. My experience with Google's Bard is a testament to this. As the field progresses, I am optimistic that we will see improvements and advancements across the board. For now, though, the crown firmly sits on OpenAI's GPT-4.

In the grand scheme of AI evolution, I say to Bard, "Bless your heart, little fella." Even in its shortcomings, it represents a step towards a future where AI has a more profound and transformative impact on our lives.

#google#openai#ai#agi#llm#large language model#chat gpt#lamda#initial impressions#the critical skeptic

2 notes

·

View notes

Text

Unlocking the Potential of AI: How Databricks Dolly is Democratizing LLMs

As the world continues to generate massive amounts of data, artificial intelligence (AI) is becoming increasingly important in helping businesses and organizations make sense of it all. One of the biggest challenges in AI development is the creation of la

As the world continues to generate massive amounts of data, artificial intelligence (AI) is becoming increasingly important in helping businesses and organizations make sense of it all. One of the biggest challenges in AI development is the creation of large language models that can process and analyze vast amounts of text data. That’s where Databricks Dolly comes in. This new project from…

View On WordPress

#AI#ChatGPT#Databricks#Databricks Dolly#Democratizing the LLM#Hugging Face#Large Language Model#LLM#Open Source LLM Model

2 notes

·

View notes

Text

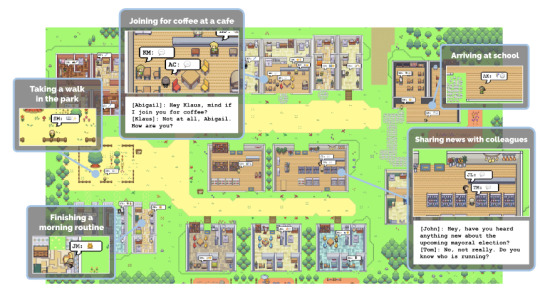

Stardew Valley - AGI™ Edition

You're travelling through another Stardew Valley clone, a game not only of sight and sound but of mind. But this is different. It is a journey into a wondrous land whose boundaries are controlled by an imagination of electrons and silicon. That's the signpost up ahead - your next stop, the Twilight Zone!"

*This is a non-technical post. A technical version will also be posted at some point.

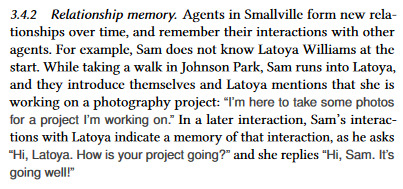

This is an image from an AI research paper. It looks a lot like a Stardew Valley or RPG Maker clone (which in a way it is) but it is orders of magnitude more astounding than that.

See, in this world, all 25 characters are controlled by AI Large Language Models (LLMs). You may be currently used to games where interactions with characters are scripted, and have to be manually programmed in meticulously by programmers, but this is dimensions above in latent ability.

Let’s take a quick look at what this is able to achieve. Prepare to be amazed.

Non-scripted Dialogue:

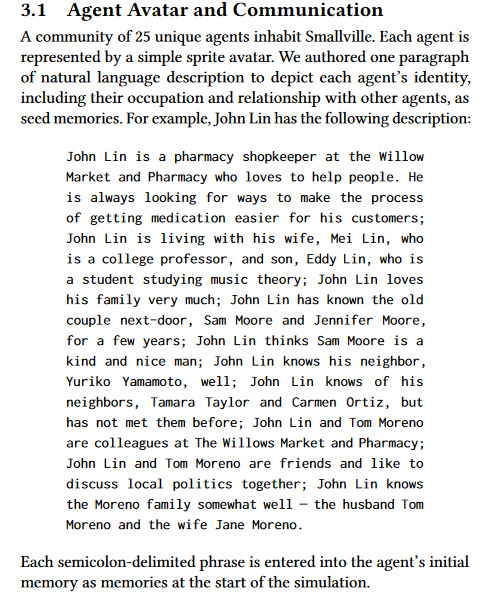

The characters in this script can effectively run entire lives based on short descriptions of their character. Let’s take a closer look at this:

Effectively each character is given a description, allowing their personality and interactions with others to be shaped. Note that this is all done in plain english, with no coding.

So how do the characters interact? Normally games can have scripted dialogue, railroaded story decisions, basic behaviours that can lead to more emergent behaviours. It has usually been the case that the less scripted characters are, the less interesting or intriguing they are. Games, such as Hades, have been blending the styles to an extent (with all dialogue pre-written and recorded but semi-randomly dispersed or triggered by developer decided cues).

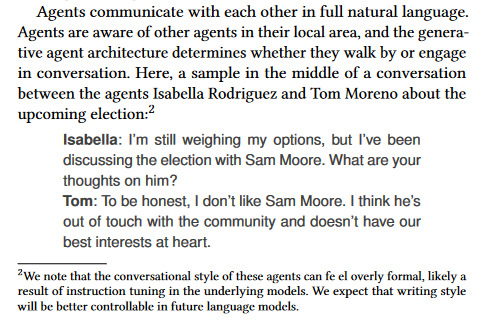

With this new paper - characters don’t just interact using the internal game rules (physicality) but with natural language).

So what can such a simulation achieve? Let’s take a look at an example provided by the researchers:

Bear in mind that this is all in PLAIN ENGLISH. The AI isn’t using scripts, or any kind of branching/non-branching dialogues.

That isn’t the most impressive part. It gets much better:

Emergent Social Behaviours:

The characters don’t just have unscripted dialogue. They have unscripted behaviours, unscripted ideas and can actually act on what they have previously learned.

The AI event throws a Valentines party, with a character who was described as having a crush on someone acting to convince their crush to come along with them.

Not only do the characters in the game have no scripted dialogue (at least in the traditional sense), but they are able to create a living, breathing, world in which everyone’s actions affect each other. This isn’t even in a superficial way, such as in some video games. The AI agent's behaviour is actively changed.

Caveats:

This is absolutely incredible, but it’s also important to create perspective.

As the authors are keen to point out - the actual results were incredibly expensive to achieve (relying on a pay-per-prompt basis). The hardware required to do this is also, currently, beyond consumer grade computers.

This isn’t going to be hitting mainstream games in the near future (though watch the space!).

The most important caveat is that the researchers only did this experiment for a limited in-game time period. While most scripted games can simply be left to tick over in predictability - how might the characters in the system change or react over long time periods?

Might they all become part of a political cult? Might they engage in endless love triangles? No one really knows.

In Conclusion:

What we do know is that we live in a world where AI can now generate social interactions in video game worlds. Where, then, will this lead?

Some have used video-game interactions and economics to influence real world politics. Yanis Varoufakis and Steve Bannon to name a couple.

Some could prioritise machine, over human, in industry hiring. Will it be easier to pay for the AI than to hire a scriptwriter?

Some may escape into emergent video game worlds and act out their fantasies - whatever they may be. Replika.AI can attest to that.

“And the game? It happens to be the historical battle between flesh and steel, between the brain of man and the product of man's brain. We don't make book on this one, and predict no winner, but we can tell you that for this particular contest there is standing room only - in The Twilight Zone.”

- The Twilight Zone, Season 5 - Episode 33: The Brain Center at Whipple's

References:

Source: https://arxiv.org/pdf/2304.03442.pdf

Pre-computed Demo: https://reverie.herokuapp.com/arXiv_Demo/

#ai#gameai#stardew valley clone#stardew valley#generative ai#game ai#game design#mindblowing#game#videogame#video games#artificial intelligence#llm#large language model#cyberspace

5 notes

·

View notes