#Carnegie Mellon University

Text

by Kaitlyn Landram

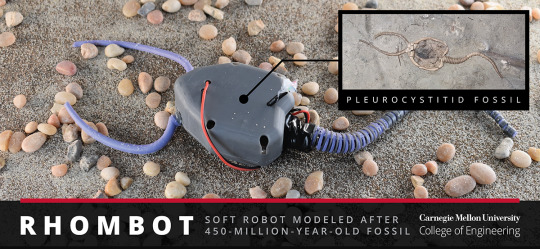

Researchers in the Department of Mechanical Engineering used fossil evidence to engineer a soft robotic replica of pleurocystitids, a marine organism that existed nearly 450 million years ago and is believed to be one of the first echinoderms capable of movement using a muscular stem.

52 notes

·

View notes

Text

bear mtn park, zoo & museum, ny - canon demi c & 400 iso color film - developed at eliz digital & scanned with minolta dimage dual iii

#35mm#bear mountain#park#zoo#museum#swan#birds#nature#animals#history#revolutionary war#museums#new york#hudson valley#hudson river#bridges#infrastructure#architecture#engineering#carnegie mellon university

16 notes

·

View notes

Text

Eco-friendly conductive ink promises to revolutionize the production of soft stretchable electronic circuits

Researchers at the Faculty of Science and Technology of Universidade de Coimbra (FCTUC) and Carnegie Mellon University have developed a water-based conductive ink tailored for producing flexible electronic circuits.

The technique, developed with Carnegie Mellon Portugal Program's (CMU Portugal) support, sidesteps the necessity of employing conventional organic solvents, renowned for their detrimental environmental impact due to pollution and toxicity. The results have been published in the journal Advanced Science.

By being water-based, this ink is more sustainable and ecological and significantly reduces the environmental impact of existing solutions. On-skin bio stickers to monitor patients' health or recyclable smart packages with integrated sensors for monitoring the safe storage of perishable foods are among the possible uses.

Manuel Reis Carneiro, a doctoral student from the CMU Portugal, is part of the team led by Mahmoud Tavakoli, which already has extensive experience developing stretchable electronic circuits efficiently, quickly, and cheaply.

Read more.

#Materials Science#Science#Ink#Conductivity#Electronics#Circuits#Wearable technology#Environment#Carnegie Mellon University

23 notes

·

View notes

Text

cmu puzzle hunt spring 2024 is a wrap :) i had a lot of fun with it as well! the team i hunted with got 8th place which is higher than any team i've been on before

i contributed the bookshelf of tchotchkes that is featured in the wrapup which i won't paste here because it's full of hunt spoilers

if you're thinking of solving it, the tiling room round is quite a nice round that shouldn't be too hard (except maybe the cryptics :/). and the diy room round was a proper doozy

now maybe i should actually get some work done

#puzzle#puzzle hunt#cmu puzzle hunt#carnegie mellon university#i love the arbitrary blue and yellow department store

4 notes

·

View notes

Photo

A very old image from my film days - Carnegie Mellon University, Porter Hall - back when it still had ivy, circa 1970. Something about that old film look - digitized from a 35mm slide, Pentax Spotmatic, some short tele 135mm?, Kodak Ektachrome 400

#carnegie mellon#cmu#film#35mm#analogue#original photography#carnegie mellon university#photographers on tumblr#lensblr#luxlit#film photography

39 notes

·

View notes

Text

42 notes

·

View notes

Text

Does it count as conflict of interest if the HR Person in charge of my hiring also gets a bonus if I’m hired? Carnegie Mellon has some weird policies

#legal#legal advice#university#carnegie mellon university#Human Resources#im steamed bc i got lied to and THEN realized that there was in fact motive#law help#university help#edit: so I was told the wrong location for my job by this hr person#which lasted until a week before my hire#no I don’t have written evidence#I’m not tryna pin them on miscommunication#im curious if conflict of interest applies when their decision making stands to benefit them financially#or if this is totally legit

3 notes

·

View notes

Text

Carnegie Mellon University

Introduction to Carnegie Mellon University

Carnegie Mellon University (CMU) is a prestigious institution known for its innovative and transformative approach to education. Nestled in Pittsburgh, PA, CMU is a private research university that consistently ranks among the top universities globally. It holds a significant place in the academic world, offering a range of programs and fostering an…

View On WordPress

2 notes

·

View notes

Text

Lipid nanoparticles engineered to specifically target pancreas in mouse model

Artistic representation of a lipid nanoparticle containing mRNA. Credit:iStock

Therapeutics that use mRNA—like some of the COVID-19 vaccines—have enormous potential for the prevention and treatment of many diseases. These therapeutics work by shuttling mRNA “instructions” into target cells, providing them with a blueprint to make specific proteins. These proteins could help tissues to…

View On WordPress

#Cancer#Carnegie Mellon University#Genesis Nanotechnology#mRNA Therapeutic s#Nano-Bio#Nanomaterials#Nanotechnology

3 notes

·

View notes

Text

exploring the CFA today.

#moodboard#mood board aesthetic#mood board#neutral mood board#neutral#my photography#my photos#my photo#academia inspo#academia aesthetic#academic#academia#fine art#fine arts#college of fine arts#musician#music student#carnegie mellon university#Carnegie Mellon#pittsburgh#university#musician aesthetic#student aesthetic#art student#art student aesthetic

7 notes

·

View notes

Text

Some Cool Details About Llama 3

New Post has been published on https://thedigitalinsider.com/some-cool-details-about-llama-3/

Some Cool Details About Llama 3

Solid performance, new tokenizer, fairly optimal training and other details about Meta AI’s new model.

Created Using Ideogram

Next Week in The Sequence:

Edge 389: In our series about autonomous agents, we discuss the concept of large action models(LAMs). We review the LAM research pioneered by the team from Rabbit and we dive into the MetaGPT framework for multi-agent systems.

Edge 390: We dive into Databricks’ new impressive model: DBRX.

You can subscribed to The Sequence below:

TheSequence is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

📝 Editorial: Some Cool Details About Llama 3

I had an editorial prepared for this week’s newsletter, but then Meta AI released Llama 3! Such are the times we live in. Generative AI is evolving on a weekly basis, and Llama 3 is one of the most anticipated releases of the past few months.

Since the debut of the original version, Llama has become one of the foundational blocks of the open source generative AI space. I prefer to use the term “open models,” given that these releases are not completely open source, but that’s just my preference.

The release of Llama 3 builds on incredible momentum within the open model ecosystem and brings its own innovations. The 8B and 70B versions of Llama 3 are available, with a 400B version currently being trained.

The Llama 3 architecture is based on a decoder-only model and includes a new, highly optimized 128k tokenizer. This is quite notable, given that, with few exceptions, most large language models simply reuse the same tokenizers. The new tokenizer leads to major performance gains. Another area of improvement in the architecture is the grouped query attention, which was already used in Llama 2 but has been enhanced for the larger models. Grouped query attention helps improve inference performance by caching key parameters. Additionally, the context window has also increased.

Training is one area in which Llama 3 drastically improves over its predecessors. The model was trained on 15 trillion tokens, making the corpus quite large for an 8B parameter model, which speaks to the level of optimization Meta achieved in this release. It’s interesting to note that only 5% of the training corpus consisted of non-English tokens. The training infrastructure utilized 16,000 GPUs, achieving a throughput of 400 TFLOPs, which is nothing short of monumental.

Llama 3 is a very welcome addition to the open model generative AI stack. The initial benchmark results are quite impressive, and the 400B version could rival GPT-4. Distribution is one area where Meta excelled in this release, making Llama 3 available on all major machine learning platforms. It’s been just a few hours, and we are already seeing open source innovations using Llama 3. The momentum in the generative AI open models space definitely continues, even if it forced me to rewrite the entire editorial. 😊

🔎 ML Research

VASA-1

Microsoft Research published a paper detailing VASA-1, a framework for generating talking faces from static images and audio clips. The model is able to generage facial gestures such as head or lip movements in a very expressive way —> Read more.

Zamba

Zyphra published a paper introducing Zamba, a 7B SSM model. Zamba introduces a new architecture that combines Mamba blocks with attention layers which leads to high performance in training and inference with lower computational resources —> Read more.

MEGALODON

AI researchers from Meta and Carnegie Mellon University published a paper introducing MEGALODON, a new architecture that can scale to virutally unlimited context windows. As it names indicates, MEGALODON is based on the MEGA architecture with an improved gated attention mechanism —> Read more.

SAMMO

Microsoft Research published a paper detailing Structure-Aware Multi-objective Metaprompt Optimization (SAMMO), a framework for prompt optimization. The framework is able to optimize prompts for scenarios such as RAG or instruction tuning —> Read more.

Infini-Attention

Google Research published a paper introducing Infini-Attention, a method to scale the context window in transformer architectures to virtually unlimited levels. The method adds a compressive memory into the attention layer which allow to build long-term and masked-local attention into a single transformer block —> Read more.

AI Agents Ethics

Google DeepMind published a paper discussing ethical considerations in AI assistants. The paper cover aspects such as safety alingment, safety and misuse —> Read more.

🤖 Cool AI Tech Releases

Llama 3

Meta AI introduced the highly anticipated Llama 3 model —> Read more.

Stable Diffusion 3

Stability AI launched the APIs for Stable Diffusion 3 as part of its developer platform —> Read more.

Reka Core

Reka, an AI startup built by former DeepMind engineers, announced its Reka Core multimodal models —> Read more.

OpenEQA

Meta AI released OpenEQA, a benchmark for visual language model in physical environments —> Read more.

Gemini Cookbook

Google open sourced the Gemini Cookbook, a series of examples for interacting with the Gemini API —> Read more.

🛠 Real World ML

AI Privacy at Slack

Slack discusses the architecture enabling privacy capabilities in its AI platform —> Read more.

📡AI Radar

Andreessen Horowitz raised $7.2 billion in new funds with a strong focus on AI.

Meta AI announced its new AI assistant based on Llama 3.

The Linux Foundation announced the Open Platform for Enterprise AI to foment enterprise AI collaboration.

Amazon Bedrock now supports Claude 3 models.

Boston Dynamics unveiled a new and impressive version of its Atlas robot.

Limitless, formerly Rewind, launched its AI-powered Pendant device.

Brave unveiled a new AI answer engine optimized for privacy.

Paraform, an AI platform that connects startups to recruiters, raised $3.6 million.

Poe unveiled multi-bot chat capabilties.

Thomson Reuters will expand its AI Co Counsel platform to other industries.

BigPanda unveiled expanded context analysis capabilities to its AIOps platform.

Evolution Equity Partners closed a $1.1 billion fund focused on AI and cybersecurity.

Salesforce rolled out Slack AI to its paying customers.

Stability AI announced that is laying off 10% of its staff.

Dataminr announced ReGenAI focused on analyzing multidimensional data events.

#000#agent#agents#ai#AI AGENTS#ai assistant#ai platform#AI-powered#Amazon#Analysis#API#APIs#architecture#attention#attention mechanism#audio#benchmark#billion#bot#Carnegie Mellon University#claude#claude 3#Collaboration#cybersecurity#data#databricks#dbrx#DeepMind#details#Developer

0 notes

Text

1 note

·

View note

Text

From 2D to 3D: MXene's path to revolutionizing energy storage and more

With a slew of impressive properties, transition metal carbides, generally referred to as MXenes, are exciting nanomaterials being explored in the energy storage sector. MXenes are two-dimensional materials that consist of flakes as thin as a few nanometers.

Their outstanding mechanical strength, ultrahigh surface-to-volume ratio, and superior electrochemical stability make them promising candidates as supercapacitors—that is, as long as they can be arranged in 3D architectures where there is a sufficient volume of nanomaterials and their large surfaces are available for reactions.

During processing, MXenes tend to restack, compromising accessibility and impeding the performance of individual flakes, thereby diminishing some of their significant advantages. To circumvent this obstacle, Rahul Panat and Burak Ozdoganlar, along with Ph.D. candidate Mert Arslanoglu, from the Mechanical Engineering Department at Carnegie Mellon University, have developed an entirely new material system that arranges 2D MXene nanosheets into a 3D structure.

Read more.

7 notes

·

View notes

Photo

(via On the bumpy bus ride of tracking myself down.) This was the day I realized that there was something very, very suspicious about how, where and to whom I was born. It began my lifelong quest to uncover some startling truths.

#adopted#pittsburgh#division of statistics#Allegheny#birth cretificate#north huntington#downtown Pittsburgh#CMU#Carnegie Mellon#Carnegie Mellon University#Hunt Library#Seventh Avenue Pittsburgh PA#Department of Commerce Building#bus ride#PAT bus#Port Authority#Port Authority Transit#Port Authority Transit of Allegheny County#sunny day#gorgeous day

0 notes

Text

The Weight of the Inside of the Body

Saskia Hamilton, 1967–2023

Last week was Pongo Poetry Project's annual gala/fundraiser, Speaking Volumes, and during the event the guest poets—Arianne True, Shin Yu Pai, and Quenton Baker—were asked to name someone who was influential to their writing and their interest in poetry.

As the poets talked, I thought about Saskia Hamilton, who died this past summer at only 56. What a terrible loss.

It's both a blessing and a curse to be less extremely online than I once was.* On the one hand, social media can be corrosive; on the other hand, social media can help one stay on top of the news. I only heard about Saskia's death a few weeks ago, when I logged into Instagram for the first time in ages (somehow I'd missed the Times obit). I was, to put it mildly, super bummed.

Saskia was the first writing teacher I really looked up to—I took a workshop with her when she was at Kenyon between 2000–2001—and though we fell out of regular touch, I eagerly read her books as soon they were published.

In addition to being a talented poet and editor, Saskia was, more importantly when I was 20/21 years old, cool: she invited our workshop to her apartment in Mt. Vernon one night, and I drank a Grolsch; she grew up with Guy Picciotto of Fugazi (my favorite band!!!) & so knew the band well & we drove from Gambier to Carnegie Mellon to see them play one Saturday morning (something I've written about previously); when she taught at Kenyon she tended to wear lots of black, effortlessly; and when I was applying to grad school, she told me to stay in NYC and not decamp for Minnesota, advice I ignored. Maybe I shouldn't have?

The older I get, the more of my heroes I say goodbye to. The good news, amen, I suppose, is that their work remains, and with it some small part of them. Here's her poem "Zwijgen," from Corridor:

I slept before a wall of books and they

calmed everything in the room, even

their contents, even me, woken

by the cold and thrill, and still

they said, like the Dutch verb for falling

silent that English has no accommodation for

in the attics and rafters of its intimacies.

...

*Thanks to Musk & Zuckerberg—erstwhile combatants—for driving me from their respective websites.

Title taken from Saskia's poem of the same name, which opens her collection Divide These.

Header image of Fugazi, mainly Ian MacKaye here, playing "Waiting Room" during the CMU show. Here's the full (short) video from which the header screencap is taken:

0 notes

Text

Trainieren Sie Roboterarme mit einem Hands-Off-Ansatz

Forscher der Carnegie Mellon University (CMU) haben eine Methode entwickelt, um Roboterarme mithilfe künstlicher Intelligenz zu trainieren. Die Doktoranden Alison Bartch und Abraham George verwendeten eine Virtual-Reality-Simulation, in der der Roboter eine einfache Aufgabe wie das Aufheben eines Blocks lernte. Die Bewegungen des Roboters wurden dabei durch “menschenähnliche” Beispiele ergänzt,…

View On WordPress

0 notes