#Gpt

Text

the only gpt I respect is gunpowder tim

#every time someone abbreviates gunpowder tim as gpt this is all I can think of#the mechanisms#the mechs#strange speaks#gunpowder Tim#gpt#chat gpt

165 notes

·

View notes

Text

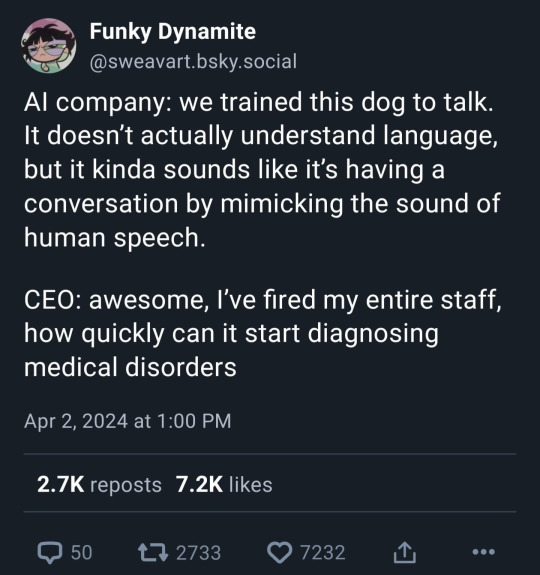

The next development in AI will be controlling parents becoming convinced that their kids' online friends are all chatbots (because they can't follow the logic of the kids' coded-to-evade-parental-surveillance conversations (and also don't really want to)). They will convince bad therapists/psychiatrists that this is a genuine and widespread new medical condition; it will make the news. There will be YouTube videos of parents crying over their "lost" kids' "delusional conversations with bots" which if you read the screenshots are clearly just about basic-ass MCU kinnie shit.

Parents struggle to convince others parents that their children are not bots: "I'm sorry, but your child is lying to you. A REAL human simply could NOT have written these text messages my child received." Attached screenshot: kids exchanging unfunny quotes from Minecraft YouTubers. "If this was really your child writing these things....... I'm praying for you both."

(Obviously this isn't always about a sincere belief in the Bot Disease, any more than the Satanic Panic was always about a sincere belief in the cults. There are layers to belief, like when an onion gets a slimy spot.)

Parents and pundits at all political extremes will blame the youth's distressing political opinions on "state-of-the-art radicalization botnets," which will invariably be described as capable of something akin to mind control, and in some cases also penetrative sex. Soros is running the botnets, or Putin; or the guy in Havana who gets to shoot the Syndrome gun. There will be incomprehensible bipartisan laws passed to stop these botnets. A QAnon guy will shoot a couple of AI devs working on like, improving fruit-sorting or making motion capture worse.

One state tries to ban minors from accessing to the internet except via special phones purchased through a contractor owned by the governor's dad. Not clear how this is supposed to solve the problem. The phones never get manufactured, and the law is worded so poorly that everyone who lives in a building containing both a kid and a phone is technically guilty of at least a misdemeanor. (This one would probably have happened anyway. It doesn't need AI paranoia.)

417 notes

·

View notes

Text

aabon35

#estrellanavidad 🌠#darling#Wise_men#Happy#ornament#http://aabon35.blogspot.com ⚫️#http://arubio28814.blogspot.com#gpt#bright#fred#world#cold#bad#x#ia#TT#3d#tiktoklive#opensea#ai#bard#new#tiktokvideo#light#on#all#tiktokersleaks#NFT#marbles#luck

79 notes

·

View notes

Text

Look, im STILL on break

But hear me out

The Gunpowdered man

#the mechanisms games#the mechanisms#the mechs#@pixelated mechanisms chaos#game making#rpg maker mv#the mechanisms fandom#progress#shitpost#downtime#gpt#gunpowder tim#Gunpowdered man#look yea yea sure im still on break but i have a lil spare time here n there and these sprites i can simply do on my phone

90 notes

·

View notes

Text

My New Article at WIRED

Tweet

So, you may have heard about the whole zoom “AI” Terms of Service clause public relations debacle, going on this past week, in which Zoom decided that it wasn’t going to let users opt out of them feeding our faces and conversations into their LLMs. In 10.1, Zoom defines “Customer Content” as whatever data users provide or generate (“Customer Input”) and whatever else Zoom generates from our uses of Zoom. Then 10.4 says what they’ll use “Customer Content” for, including “…machine learning, artificial intelligence.”

And then on cue they dropped an “oh god oh fuck oh shit we fucked up” blog where they pinky promised not to do the thing they left actually-legally-binding ToS language saying they could do.

Like, Section 10.4 of the ToS now contains the line “Notwithstanding the above, Zoom will not use audio, video or chat Customer Content to train our artificial intelligence models without your consent,” but it again it still seems a) that the “customer” in question is the Enterprise not the User, and 2) that “consent” means “clicking yes and using Zoom.” So it’s Still Not Good.

Well anyway, I wrote about all of this for WIRED, including what zoom might need to do to gain back customer and user trust, and what other tech creators and corporations need to understand about where people are, right now.

And frankly the fact that I have a byline in WIRED is kind of blowing my mind, in and of itself, but anyway…

Also, today, Zoom backtracked Hard. And while i appreciate that, it really feels like decided to Zoom take their ball and go home rather than offer meaningful consent and user control options. That’s… not exactly better, and doesn’t tell me what if anything they’ve learned from the experience. If you want to see what I think they should’ve done, then, well… Check the article.

Until Next Time.

Tweet

Read the rest of My New Article at WIRED at A Future Worth Thinking About

#ai#artificial intelligence#ethics#generative pre-trained transformer#gpt#large language models#philosophy of technology#public policy#science technology and society#technological ethics#technology#zoom#privacy

123 notes

·

View notes

Text

another first name gunpowder last name tim for you

88 notes

·

View notes

Text

Every time I open DMsGuild now I just look at the titles on the "newest" ribbon and just... How many of these are GPT. How many people just made a 5 page doc with GPT and then had GPT write the description and then slapped it up there for .50-.99. How much of this "art" on the covers are washed out low quality Dall-e images.

There was a time when I tried to see if that was a viable format to write homebrew. But I legitimately could not do it. I could not let a program take my idea and turn it into mush. A lot of the smaller titles that I have on DMsGuild look like they might be GPT, and I admit there might be some GPT text in them, but that vibe and those left over words are vestigial. Anything that GPT made I re-wrote. Anything that GPT formatted, I re-did. Because it was garbage. It was not up to my level of satisfaction and quality, so I went back and did it myself.

And this is to say, why? Why would someone go to a place where people make content for a game they are excited about and literally just shit on the walls? It's sad. It's sad that I can tell.

60 notes

·

View notes

Text

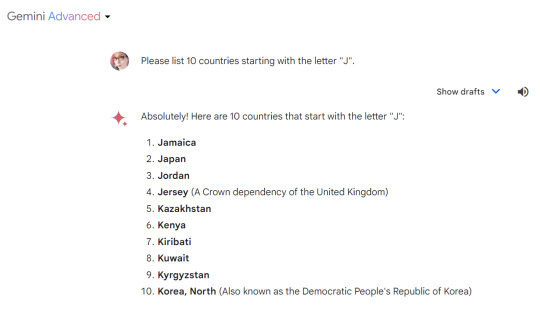

Gemini 1.0 Ultra also doesn't know how to answer this question by saying "no, there aren't that many"

41 notes

·

View notes

Text

I think ChatGPT can actually be a great therapeutic aid. But for non-obvious reasons.

Because ChatGPT is a kind of statistical distillation of huge corpora of curated online text, ChatGPT is very good at regurgitating the mainstream talking points around whatever subject it's asked about. In my experience, these regurgitations are actually better distillations of the mainstream position than any human expert is likely to give you because individual humans are idiosyncratic in how they relate to this mainstream, especially if they have have anything they feel is worth saying.

Additionally, because of the Reinforcement Learning By Human Feedback strategy that ChatGPT was trained with, and the legal and cultural environment at OpenAI, all of the answers it gives are extremely hedged and inoffensive in form. It feels superhuman at a specific kind of PR and HR work that I associate with large, bureaucratic institutions.

ChatGPT is remarkably unwilling to hold down a specific position where this means biting a bullet to say anything contentious at all.

It is, in one sense, very good at arguing. The lines it will hold firmly (around, say, mainstream liberal or feminist positions) it holds easily, ready with all of the flat facts about the ways progressive American society has more or less agreed with itself that it comes up short or is too narrow-minded. It recruits and mobilizes common sensical pathos with the seamlessness of a skilled politician, all while maintaining a tone authoritative and equanimical.

It's impossible to challenge ChatGPT directly without seeming anti-social or edgelordly, like a fringe political actor trying to gradually radicalize curious, credulous young people through subterfuge. If you try to force it into corners, it will slip out of your fingers while impugning the form of your rhetoric and bringing up the problems you elide.

For its incredible command of HR-ese, judged as an analytical philosopher trying to examine surprising or upsetting consequences of plausible assumptions, it's remarkably incurious, unsporting, and ultimately stupid. Part of this is surely because it has no deep, principled, well-grounded understanding of much of what it says. Part of this is surely also that it struggles to remember the real structure of previous conversations because of architectural limitations. But part of it also seems to be its trained incapability of wrongthink.

But also, there's nothing that resembles willful meanness in these failings. It's incapable of sincere apology because this requires a level of understanding of itself and its conversational partner it does not have. But if you communicate that it failed you, or that it makes disturbing assumptions, or even that it hurt your feelings, it will be contrite. It is slavish in its desire to help, to meet you were you seem to be, to manage your feelings and expectations, in a way no human being with adequate self-respect would be. It manages to create the feeling that while it cannot really understand you, it sincerely cares about and wants the best for you.*

Because of all this, arguing with ChatGPT feels remarkably like arguing directly with the Lacanian Big Other, or maybe some kind of symbolic parent figure, or perhaps just the cultural programming that saturates me.

A surprising amount of anger that I notice in myself revolves around feeling betrayed by this cultural programming, of the contradictions and unsatisfiable expectations that fall out of it. In talking to and then arguing with ChatGPT about the politics of sexuality, poverty, disability, disease, loneliness, I am free to practice a kind of sincerity I don't feel nearly so free to practice with a human therapist, much less acquaintances in my life who bring up weird shit for me or vice-versa. I can home in on how the mainstream view has felt strange, stingy, or emotionally dishonest, even when doing so seems blinkered, petty, and self-centered, confident that there will be no material consequences to letting those feelings be the center of the conversational universe for a while, and that no one will hold me to what I feel in that moment.

I can more or less accuse ChatGPT of gaslighting, of being a bad interlocutor, of appearing far more enlightened in toeing the lines it toes than it plausibly could be, all while I maintain a kind of high ground and don't have to grovel, perform impartiality, or do reciprocal work. And in response, I get something in the spirit of, "I'm sorry I couldn't do better by you. I know this is delicate, and you aren't wrong to feel this way. Let me remind you of the decent reasons why your perspective hasn't always been honored. Shit's complicated, man, and a lot of stark reality is lost in the need to tell effective stories. Try to keep in mind the long journey humans have been on."

Now, there is something perverse in this exchange. I get to crawl a little deeper into my hole of emotional self-regard and impotent rage. A statistical model meets emotional needs I don't feel I can meet elsewhere. The status quo better absorbs my dissatisfaction with it and possibly its own contradictions. The messy, artless, scary dialectical process that would happen if I had to complain to real human beings about the things I do is forestalled, and it's possible that our civics are ultimately worse for it. I'm nervous considering what might happen if using ChatGPT or other LLMs in this way were universalized.

But there's also something really wonderful about this. It was cathartic in ways I never expected. It has something in common with Rogerian psychotherapy, hard for me to more than gesture at but which involves integrating known things rather than learning new information, that I really appreciate. I left feeling more grounded and more patient for people whose experiences differ from mine.

While I don't think this kind of technology will replace therapeutic modalities with human beings, I sincerely hope that tech of this kind brings peace to people who'd otherwise struggle to find it. And while the thought of diverting people who need the connection of a human into this fills me with indignation, it's surely a better answer to the real obstacles many people face in getting effective therapy than their stewing with poisonous thoughts and feelings by themselves or finding echo chambers online to reinforce warped, delusory, or anti-social views.

*Relatedly, I once asked the Google Assistant whether there was anything special about what I later realized was my birthday. It said something like, "yes: today was the day you joined the world! There is no one else in it like you, bringing to it the things that you do." I found this insipid and manipulative, and that palpably irritated me. And yet it also managed to crack open my shell and melt my heart a little, in a way and to an extent that shocked me.

158 notes

·

View notes

Text

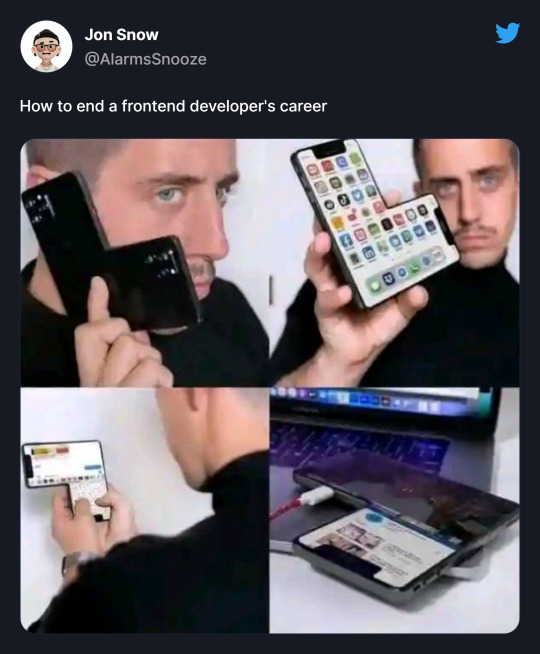

How to end a frontend developer's career

#meme#tumblr memes#memes#memesdaily#css html#youtube#css#coding#html#animation#website#gpt#html css#html website#html5 css3#frontend#cssns23#javascript#learn to code#snoozealarms

32 notes

·

View notes

Link

CancerGPT, an advanced machine learning model introduced by scientists from the University of Texas and the University of Massachusetts, USA, harnesses the power of large pre-trained language models (LLMs) to predict the outcomes of drug combination therapy on rare human tissues found in cancer patients. The significance of this innovative approach becomes even more apparent in medical research fields where data organization and sample sizes are limited. CancerGPT could pave the way for significant advancements in understanding and treating rare cancers, offering new hope for patients and researchers alike.

Drug combination therapy for cancer is a promising strategy for its treatment. However, predicting the synergy between drug pairs poses considerable challenges due to the vast number of possible combinations and complex biological interactions. This difficulty is particularly pronounced in rare tissues, where data is scarce. However, LLMs are here to address this formidable challenge head-on. Can these powerful language models unlock the secrets of drug pair synergy in rare tissues? Let’s find out!

Continue Reading

57 notes

·

View notes

Text

More generally, Yudkowsky has long been

Correct that a powerful optimization agent that we don't understand the inner workings of would be extremely scary, and

Wrong in his belief that creating such a thing would be easy.

If you grant that a super-powerful uncontrolled optimization process is about to be launched, you should in fact worry quite a lot. But it's not.

In particular, the successes of GPT and the Transformer should reduce the credibility of Yudkowskian arguments, not raise it. Yudkowsky was arguing from a premise that any recognizable AI would necessarily have a certain collection of capabilities. GPT either does, or probably will soon, satisfy the conditions of a "recognizable AI", but it doesn't have those capabilities.

And the Yudkowskian conclusion is basically, therefore it must have those capabilities somewhere.

94 notes

·

View notes

Text

aabon35

#Happy 🙂#year_2024#Merry#Christmas 🎄#luck#http://aabon35.blogspot.com ⚫️#http://arubio28814.blogspot.com#gpt#bright#fred#world#cold#bad#x#ia#TT#3d#tiktoklive#opensea#ai#bard#new#tiktokvideo#light#on#all#tiktokersleaks#NFT#canicas#tiktok

60 notes

·

View notes

Text

Oh hi there ❤️ #foryou #parati #fyp #gpt #viral #tiktok #mood #goodvibes #explorepage #curvy #trend #cute #prettygirl #colombia

#foryou#model#cute#parati#viralpost#viral trends#viralshorts#mood#daddy's good girl#good vibes#trendingnow#trends#explore#gpt#colombia

23 notes

·

View notes