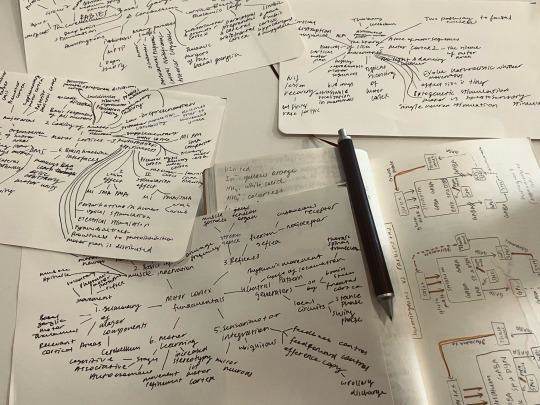

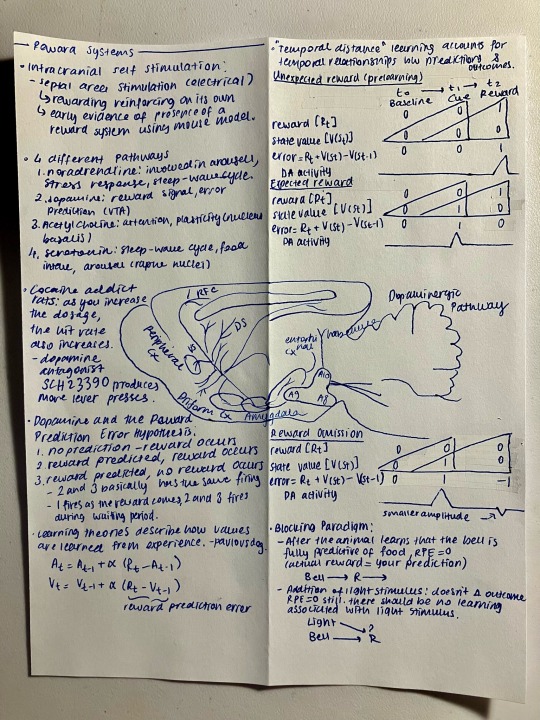

#neural science

Text

Yeah man ofc u can borrow my notes (if u can read them)

#chaotic notes#chaotic studyblr#study notes bc i cant draw often bc college is mean#stem studyblr#stem academia#stem aesthetic#stemblr#stem#study aesthetic#studyinspo#stem student#study blog#studyspo#study motivation#study notes#studyblr#chaotic academic aesthetic#chaotic academia#psychology notes#neural science#mind maps#too many mind maps#fountain pen#pencil#messy notes

509 notes

·

View notes

Text

Interesting Papers for Week 30, 2023

Adult-born neurons inhibit developmentally-born neurons during spatial learning. Ash, A. M., Regele-Blasco, E., Seib, D. R., Chahley, E., Skelton, P. D., Luikart, B. W., & Snyder, J. S. (2023). Neurobiology of Learning and Memory, 198, 107710.

Behavioral origin of sound-evoked activity in mouse visual cortex. Bimbard, C., Sit, T. P. H., Lebedeva, A., Reddy, C. B., Harris, K. D., & Carandini, M. (2023). Nature Neuroscience, 26(2), 251–258.

Exploration patterns shape cognitive map learning. Brunec, I. K., Nantais, M. M., Sutton, J. E., Epstein, R. A., & Newcombe, N. S. (2023). Cognition, 233, 105360.

Distinct contributions of ventral CA1/amygdala co-activation to the induction and maintenance of synaptic plasticity. Chong, Y. S., Wong, L.-W., Gaunt, J., Lee, Y. J., Goh, C. S., Morris, R. G. M., … Sajikumar, S. (2023). Cerebral Cortex, 33(3), 676–690.

An intrinsic oscillator underlies visual navigation in ants. Clement, L., Schwarz, S., & Wystrach, A. (2023). Current Biology, 33(3), 411-422.e5.

Not so optimal: The evolution of mutual information in potassium voltage-gated channels. Duran-Urriago, A., & Marzen, S. (2023). PLOS ONE, 18(2), e0264424.

Successor-like representation guides the prediction of future events in human visual cortex and hippocampus. Ekman, M., Kusch, S., & de Lange, F. P. (2023). eLife, 12, e78904.

Residual dynamics resolves recurrent contributions to neural computation. Galgali, A. R., Sahani, M., & Mante, V. (2023). Nature Neuroscience, 26(2), 326–338.

Dorsal attention network activity during perceptual organization is distinct in schizophrenia and predictive of cognitive disorganization. Keane, B. P., Krekelberg, B., Mill, R. D., Silverstein, S. M., Thompson, J. L., Serody, M. R., … Cole, M. W. (2023). European Journal of Neuroscience, 57(3), 458–478.

A striatal circuit balances learned fear in the presence and absence of sensory cues. Kintscher, M., Kochubey, O., & Schneggenburger, R. (2023). eLife, 12, e75703.

Hippocampal engram networks for fear memory recruit new synapses and modify pre-existing synapses in vivo. Lee, C., Lee, B. H., Jung, H., Lee, C., Sung, Y., Kim, H., … Kaang, B.-K. (2023). Current Biology, 33(3), 507-516.e3.

Neocortical synaptic engrams for remote contextual memories. Lee, J.-H., Kim, W. Bin, Park, E. H., & Cho, J.-H. (2023). Nature Neuroscience, 26(2), 259–273.

The effect of temporal expectation on the correlations of frontal neural activity with alpha oscillation and sensory-motor latency. Lee, J. (2023). Scientific Reports, 13, 2012.

Describing movement learning using metric learning. Loriette, A., Liu, W., Bevilacqua, F., & Caramiaux, B. (2023). PLOS ONE, 18(2), e0272509.

The geometry of cortical representations of touch in rodents. Nogueira, R., Rodgers, C. C., Bruno, R. M., & Fusi, S. (2023). Nature Neuroscience, 26(2), 239–250.

Contextual and pure time coding for self and other in the hippocampus. Omer, D. B., Las, L., & Ulanovsky, N. (2023). Nature Neuroscience, 26(2), 285–294.

Reshaping the full body illusion through visuo-electro-tactile sensations. Preatoni, G., Dell’Eva, F., Valle, G., Pedrocchi, A., & Raspopovic, S. (2023). PLOS ONE, 18(2), e0280628.

Experiencing sweet taste is associated with an increase in prosocial behavior. Schaefer, M., Kühnel, A., Schweitzer, F., Rumpel, F., & Gärtner, M. (2023). Scientific Reports, 13, 1954.

Cortical encoding of rhythmic kinematic structures in biological motion. Shen, L., Lu, X., Yuan, X., Hu, R., Wang, Y., & Jiang, Y. (2023). NeuroImage, 268, 119893.

Mindful self-focus–an interaction affecting Theory of Mind? Wundrack, R., & Specht, J. (2023). PLOS ONE, 18(2), e0279544.

#science#Neuroscience#computational neuroscience#Brain science#research#cognition#neurons#cognitive science#neural networks#neural computation#neurobiology#psychophysics#scientific publications

75 notes

·

View notes

Text

Tonight I am hunting down venomous and nonvenomous snake pictures that are under the creative commons of specific breeds in order to create one of the most advanced, in depth datasets of different venomous and nonvenomous snakes as well as a test set that will include snakes from both sides of all species. I love snakes a lot and really, all reptiles. It is definitely tedious work, as I have to make sure each picture is cleared before I can use it (ethically), but I am making a lot of progress! I have species such as the King Cobra, Inland Taipan, and Eyelash Pit Viper among just a few! Wikimedia Commons has been a huge help!

I'm super excited.

Hope your nights are going good. I am still not feeling good but jamming + virtual snake hunting is keeping me busy!

#programming#data science#data scientist#data analysis#neural networks#image processing#artificial intelligence#machine learning#snakes#snake#reptiles#reptile#herpetology#animals#biology#science#programming project#dataset#kaggle#coding

40 notes

·

View notes

Text

"In the oldest and most prestigious young adult science competition in the nation, 17-year-old Ellen Xu used a kind of AI to design the first diagnosis test for a rare disease that struck her sister years ago.

With a personal story driving her on, she managed an 85% rate of positive diagnoses with only a smartphone image, winning her $150,000 grand for a third-place finish.

Kawasaki disease has no existing test method, and relies on a physician’s years of training, ability to do research, and a bit of luck.

Symptoms tend to be fever-like and therefore generalized across many different conditions. Eventually if undiagnosed, children can develop long-term heart complications, such as the kind that Ellen’s sister was thankfully spared from due to quick diagnosis.

Xu decided to see if there were a way to design a diagnostic test using deep learning for her Regeneron Science Talent Search medicine and health project. Organized since 1942, every year 1,900 kids contribute adventures.

She designed what is known as a convolutional neural network, which is a form of deep-learning algorithm that mimics how our eyes work, and programmed it to analyze smartphone images for potential Kawasaki disease.

However, like our own eyes, a convolutional neural network needs a massive amount of data to be able to effectively and quickly process images against references.

For this reason, Xu turned to crowdsourcing images of Kawasaki’s disease and its lookalike conditions from medical databases around the world, hoping to gather enough to give the neural network a high success rate.

Xu has demonstrated an 85% specificity in identifying between Kawasaki and non-Kawasaki symptoms in children with just a smartphone image, a demonstration that saw her test method take third place and a $150,000 reward at the Science Talent Search."

-Good News Network, 3/24/23

#heart disease#pediatrics#healthcare#kawasaki#neural network#ai#science and technology#rare disease#medical tests#medical science#good news#hope

75 notes

·

View notes

Text

Tatyana O. Sharpee’s work in the realm of geometric cognition—a field dedicated to understanding how the brain interprets and represents spatial information—has yielded groundbreaking insights into how our minds map the world around us. Continuing her previous argument for hyperbolic geometry in neural circuits, the recent paper, co-authored with Huanqiu Zhang, P. Dylan Rich, and Albert K. Lee, sheds light on the hyperbolic geometry of hippocampal spatial representations.

The hippocampus, a crucial part of the brain involved in memory and spatial navigation, houses ‘place cells’—neurons that fire when an animal is in a particular location. The geometry of these spatial representations, however, has remained largely unknown. Breaking new ground, Sharpee and her team reveal that the hippocampus does not represent space according to a linear geometry, as might be expected. Instead, they discovered a hyperbolic representation.

We investigated whether hyperbolic geometry underlies neural networks by analyzing the responses of sets of neurons from the dorsal CA1 region of the hippocampus. This region is considered essential for spatial representation and trajectory planning.

Imagine holding a map of your city. A linear representation would be akin to the map’s scale, where an inch on paper corresponds to a fixed number of miles in reality. A hyperbolic representation, in contrast, changes the scale depending on where you are on the map. The implications of this discovery are profound. A hyperbolic representation provides more positional information than a linear one, potentially aiding in complex navigation tasks.

This hyperbolic representation isn’t static—it dynamically expands with experience. As an animal spends more time exploring its environment, the spatial representations in its brain expand. The expansion is proportional to the logarithm of time spent exploring, suggesting our brains continually refine our spatial maps based on our experiences. As we spend more time in an area, our mental map of that area becomes more detailed and expansive. This dynamic updating could be crucial for efficiently navigating familiar environments.

Continue Reading

#geometriccognition#cognitivegeometry#geometry#geometrymatters#science#research#academia#neural#hyperbolic#study

31 notes

·

View notes

Link

Scientists from the University of California San Diego School of Medicine and Rady Children’s Institute for Genomic Medicine have developed a method for identifying mosaic mutations using deep learning. The process involves training a model to analyze large amounts of genomic data and recognize patterns associated with mosaic mutations. The researchers hope that this approach will help increase our understanding of the genetic basis of disease and lead to the development of more effective treatments.

Genetic mutations can lead to a wide range of disorders that are often difficult to treat or understand. One type of mutation, called mosaic mutations, is particularly challenging to identify because it only affects a small percentage of cells. These mutations can cause a variety of disorders but have been challenging to detect due to their rarity.

Continue Reading

#bioinformatics#deep learning#ai#neural networks#cancer#autism#mutation#diseases#scicomm#meded#sciencenews#science side of tumblr

75 notes

·

View notes

Text

"I've certainly become more persuaded that there is more than just the physical reality. I do think it's quite likely that if we do survive, that there's not just one experience that everyone has; that the afterlife may be as varied as life in this world."

Jim Tucker, psychiatrist at the University of Virginia, and author of "Return to Life: Extraordinary Cases of Children who Remember Past Lives."

#jim tucker#neural behavior science#psychiatry#science#research#psychology#character#personality#memories#past lives#subconscious#dreams#reincarnation#rebirth#physical realm#spiritual life#afterlife#hereafter#spirit#soul

14 notes

·

View notes

Text

Data Science meets the Many Body Problem

Since the machine learning course I did this semester at Tsinghua university was mainly focused on typical data science applications, I was curious in how far those methods can be applied in physics. Of course it is nothing new that neural networks can in principle also be used for physical applications - however, tensor network methods still seem to be dominant in the field of numerical many body physics. Thus, I decided to dive in a little into the literature about the usage of Restrictive Boltzmann Machines (RBM) in many body physics.

What are RBMs?

Usually, RBMs are used for instance for recommendation tasks (e.g. video recommendations on video platforms) and many more. In general, it is a unsupervised learning technique which makes use of minimizing its "energy". Thus, the intuition behind RBMs is, despite their data science applications, already related to physics: We will see that it is no surprise that "Boltzmann" is part of the name of this method. An RBM utilizes input data, tries to extract meaningful features from it and wants to find the probability distribution over the input. This follows the physical intuition as follows: The RBM is a neural network with two layers, a hidden and a visible layer, where each node can adopt binary values. An example network looks like:

where the x denote the visible nodes, the h the hidden nodes and W denotes the weights between both layers. Note that there are no links in between the nodes of a single layer; this is why these networks are called restricted Boltzmann machines.

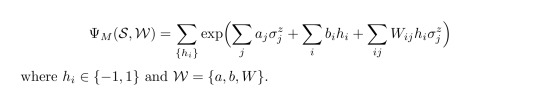

The network is governed by a corresponding energy function as:

where we also have the offsets of the single nodes (a for the visible nodes and b for the hidden nodes). Given this energy function, one can determine the probability distribution by the Boltzmann distribution where Z is a partition function, as familiar from classical statistical physics. As usual, the energy is supposed to be minimized, what is done by the learning algorithm of the RBM but this should not explained here since it would go beyond the scope of a brief blog entry. At this point I'd only like to mention that there are some difficulties determining e.g. the partition function (which is intractable in general) and that this requires some sophisticated algortihms. If you're interested in this and how the RBMs work exactly, a neat and far more rigorous introduction into RBMs can be found here.

One side note at this point: RBMs were introduced by Geoffrey Hinton after John Hopfield (a physicist) invented the so-called Hopfield networks which are also such an energy based mechanism, based on the physical intuition of Ising models.

Note that so far we only talked about the RBMs as they are used in data science - despite their physical intuition, they had so far nothing to do with neither quantum mechanics nor the many body problem. This is what comes next.

How can this be linked to condensed matter?

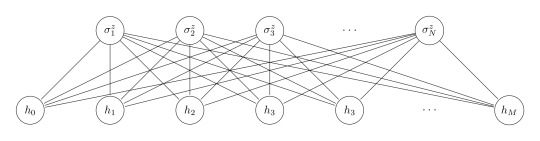

As introduced in [1], an RBM that can represent a quantum many body state would look like this:

In comparison to the previous network we changed the labels from x to σ, where the σ's denote e.g. spin 1/2 configurations, bosonic occupation numbers and so on. For them one has to choose a basis, e.g. the σ^z basis. This configuration can be summarized in the set S. Hence, the visible nodes are the N physical nodes of the system. The M hidden nodes h play the role of auxiliary (spin) variables. The authors describe the understanding of such a neural-network quantum state as follows: "The many-body wave function is a mapping of the N−dimensional set S to (exponentially many) complex numbers which fully specify the amplitude and the phase of the quantum state. The point of view we take here is to interpret the wave function as a computational black box which, given an input manybody configuration S, returns a phase and an amplitude according to Ψ(S)" [1, p.2]. Thus, one gives a certain spin configuration as input and the RBM generates the state, in the following form:

Thus, once can recognize that the form of such a neural-network quantum state adopts a similar form as the aforementioned Boltzmann distribution (exponential of energy function). However, there is additionally a sum over all possible hidden configurations which specifies the full state. After setting a state up in this form, one aim could be to find the ground state corresponding to a certain Hamiltonian, and according to the authors of [1], their RBM method gives decent results for this task!

Similarity to tensor networks

Interesting is, that this framework (even though it appears very different) has some analogous quantities as tensor network states. For example, the representational quality of a neural-network quantum state can be increased by increasing the number of hidden states: Thus, the ratio M/N plays a similar role as the bond dimension of a tensor product state! There are many more similarities, which should not be discussed here but can be found in [3].

Nevertheless, I'd like to mention an important distinction, which is also crucial for tensor network states, because some algorithms (DMRG etc.) can only handle area law states properly. While volume law states have an entanglement entropy which scales with the volume of the partitions of a state, an area law state has a scaling only proportionally with the area of the cut. Area law states can be handled better numerically, because the bond dimension of tensor network states explode for volume law states (more on tensor networks and area law can be found in [4]). According to [2] the difference between area law states and volume law states can be captured in a neat way with RBM states: While volume law states must have full connections between the hidden and physical nodes, an area law state has fewer links - this imposes locality in a sense. The RBM states thus give a neat intuition between the differenece of both kinds of states.

All in all, RBMs seem to be an interesting approach to connect both data science methods and many body physics. It may be that they have strengths which the usual tensor networks approaches lack: for instance, the authors of [2, p.888] claim that it might be possible that RBMs might be able to handle volume law states better than usual tensor network approaches do, which would be of course a major benefit. Since I haven't heard of this approach within the condensed matter framework before, I'm very curious how the importance of this method will evolve in future research!

---

References:

[1] Carleo, Troyer, Solving the Quantum Many-Body Problem with Artificial Neural Networks, arXiv:1606.02318

[2] Melco, Carleo, Carrasquilla, Cirac, Restricted Boltzmann machines in quantum physics, https://doi.org/10.1038/s41567-019-0545-1

[3] Chen, Cheng, Xie, Wang, Xiang, Equivalence of restricted Boltzmann machines and tensor network states, arXiv:1701.04831

[4] Hauschild, Pollmann, Efficient numerical simulations with Tensor Networks: Tensor Network Python (TeNPy), arXiv:1805.00055

#mysteriousquantumphysics#quantum physics#many body physics#education#studyblr#physics#physicsblr#machine learning#neural networks#artificial intelligence#women in science#tsinghua university

79 notes

·

View notes

Photo

I’ve been splicing animals with Stable Diffusion (v1.2 in an attempt to get more wildlife images and less paintings) using spherical lerping of different animal name encodings. The two animals are listed in the captions / alt text.

I do enjoy how the fluffy chicken / toad looks just as grumpy as a toad.

129 notes

·

View notes

Photo

Axon Ax-off

Like trees growing in the wild, the branches or axons of our nerve cells reach out as they develop. In the optic tract of a zebrafish, some branches of these retinal ganglion cells are ‘pruned’ to guide the accurate shape of circuits behind its eyes. Like snipping at a bonsai tree (although about 1000 times smaller), cells on one side of the tract (highlighted in red) send signals that help to cut off stray axons of cells on the other side (blue), leaving grouped or 'sorted' neurons behind (seen on the right). Researchers using CRISPR/Cas9 technology block the activity of ones of the genes involved, gpc3, leaving the optic tract in a disorganised unpruned state (left and middle). Insight into this molecular tree surgery might influence therapies for the human brain, which also uses a form of gpc3 – offering a helping hand in shaping neural circuits that develop errant branches.

Written by John Ankers

Image from work by Olivia Spead, Trevor Moreland and Cory J. Weaver, and colleagues

Department of Biological Sciences, University of South Carolina, Columbia, SC, USA

Image originally published with a Creative Commons Attribution 4.0 International (CC BY 4.0)

Published in Cell Reports, February 2023

You can also follow BPoD on Instagram, Twitter and Facebook

#science#biomedicine#axons#neurons#neuroscience#zebrafish#crispr#gene editing#retina#crispr/cas9#neural circuits#immunofluorescence

18 notes

·

View notes

Text

Should I start chronicling the insane shit I use chatgpt for here?

More specifically, should I live blog me building an AI hivemind using a bunch of stitched together open source Machine Learning algorithms?

I'm still just like, planning the hardware and shit out, so I wouldn't be doing anything with it for a while, but when I do I'll probably be showing off stuff like how the processors for each drone work and how many parts of what is effectively skynet are open source.

#maia the depressed wannabe robot girl posting#technology#artificial intelligence#science#robotics#crimes against tensor processing units#this thing is going to take so much work#did you know the reason most commercial drones don't run 12 different neural networks simultaneously is a good one?

8 notes

·

View notes

Text

3 am study session and a qifrey sketch (remember when this used to be an art account? Yeah me neither)

#study notes bc i cant draw often bc college is mean#chaotic studyblr#stem studyblr#study aesthetic#studyinspo#studyblr#study blog#studyspo#study motivation#study notes#neural science#psychology notes#brain anatomy#anatomy#biology#stem aesthetic#stem academia#stemblr#stem#stem student#psychology#procreate#witch hat atelier#witch hat fanart#witch hat qifrey#qifrey#chaotic notes#chaotic academic aesthetic#chaotic academia

51 notes

·

View notes

Text

Interesting Papers for Week 10, 2024

Children seek help based on how others learn. Bridgers, S., De Simone, C., Gweon, H., & Ruggeri, A. (2023). Child Development, 94(5), 1259–1280.

Dopamine regulates decision thresholds in human reinforcement learning in males. Chakroun, K., Wiehler, A., Wagner, B., Mathar, D., Ganzer, F., van Eimeren, T., … Peters, J. (2023). Nature Communications, 14, 5369.

Abnormal sense of agency in eating disorders. Colle, L., Hilviu, D., Boggio, M., Toso, A., Longo, P., Abbate-Daga, G., … Fossataro, C. (2023). Scientific Reports, 13, 14176.

Different time scales of common‐cause evidence shape multisensory integration, recalibration and motor adaptation. Debats, N. B., Heuer, H., & Kayser, C. (2023). European Journal of Neuroscience, 58(5), 3253–3269.

Inferential eye movement control while following dynamic gaze. Han, N. X., & Eckstein, M. P. (2023). eLife, 12, e83187.

Dissociable roles of human frontal eye fields and early visual cortex in presaccadic attention. Hanning, N. M., Fernández, A., & Carrasco, M. (2023). Nature Communications, 14, 5381.

Neural tuning instantiates prior expectations in the human visual system. Harrison, W. J., Bays, P. M., & Rideaux, R. (2023). Nature Communications, 14, 5320.

Acute exercise has specific effects on the formation process and pathway of visual perception in healthy young men. Komiyama, T., Takedomi, H., Aoyama, C., Goya, R., & Shimegi, S. (2023). European Journal of Neuroscience, 58(5), 3239–3252.

Locating causal hubs of memory consolidation in spontaneous brain network in male mice. Li, Z., Athwal, D., Lee, H.-L., Sah, P., Opazo, P., & Chuang, K.-H. (2023). Nature Communications, 14, 5399.

Development of multisensory processing in ferret parietal cortex. Medina, A. E., Foxworthy, W. A., Keum, D., & Meredith, M. A. (2023). European Journal of Neuroscience, 58(5), 3226–3238.

Optimal routing to cerebellum-like structures. Muscinelli, S. P., Wagner, M. J., & Litwin-Kumar, A. (2023). Nature Neuroscience, 26(9), 1630–1641.

In vivo ephaptic coupling allows memory network formation. Pinotsis, D. A., & Miller, E. K. (2023). Cerebral Cortex, 33(17), 9877–9895.

Sex-dependent noradrenergic modulation of premotor cortex during decision-making. Rodberg, E. M., den Hartog, C. R., Dauster, E. S., & Vazey, E. M. (2023). eLife, 12, e85590.

Propagation of activity through the cortical hierarchy and perception are determined by neural variability. Rowland, J. M., van der Plas, T. L., Loidolt, M., Lees, R. M., Keeling, J., Dehning, J., … Packer, A. M. (2023). Nature Neuroscience, 26(9), 1584–1594.

High-precision mapping reveals the structure of odor coding in the human brain. Sagar, V., Shanahan, L. K., Zelano, C. M., Gottfried, J. A., & Kahnt, T. (2023). Nature Neuroscience, 26(9), 1595–1602.

The locus of recognition memory signals in human cortex depends on the complexity of the memory representations. Sanders, D. M. W., & Cowell, R. A. (2023). Cerebral Cortex, 33(17), 9835–9849.

Velocity of conduction between columns and layers in barrel cortex reported by parvalbumin interneurons. Scheuer, K. S., Judge, J. M., Zhao, X., & Jackson, M. B. (2023). Cerebral Cortex, 33(17), 9917–9926.

Acetylcholine and noradrenaline enhance foraging optimality in humans. Sidorenko, N., Chung, H.-K., Grueschow, M., Quednow, B. B., Hayward-Könnecke, H., Jetter, A., & Tobler, P. N. (2023). Proceedings of the National Academy of Sciences, 120(36), e2305596120.

Rats adaptively seek information to accommodate a lack of information. Yuki, S., Sakurai, Y., & Yanagihara, D. (2023). Scientific Reports, 13, 14417.

Beta traveling waves in monkey frontal and parietal areas encode recent reward history. Zabeh, E., Foley, N. C., Jacobs, J., & Gottlieb, J. P. (2023). Nature Communications, 14, 5428.

#neuroscience#science#research#brain science#scientific publications#cognitive science#neurobiology#cognition#psychophysics#computational neuroscience#neural computation#neural networks#neurons

22 notes

·

View notes

Text

My absolute least favorite genre of negative response to neural net chat bots and the like is "OMG it's SENTIENT this is SCARY............WE HAVE TO DESTROY IT!!!!!!"

Like do you hear yourself? You're claiming that what's scary here is that it is allegedly SENTIENT (which it isn't)...so we have to KILL it? Your first response to a sensationalized, false report that we have created a sentient intelligent life form is "EEEK, KILL IT!!"? End a sentient, sapient life? That's your FIRST go-to? No questions? Not a moment's hesitation?

All right then, I'm sure this has absolutely no terrifying implications about your attitudes toward other things in life that are unfamiliar and scary to you...

#tech#ai tag#the only scary thing about neural nets is the profit motives behind their rushed and shoddy implementation#which dont get me wrong thats fucking terrifying#the fact that people are out here selling chatbots that will lie to and gaslight and sexually harass you is uh............bad#the fact that theyre trying to use bots to put people out of work with no contingency plans for anyone displaced is also absolute trash#but neural nets as a whole are genuinely one of the most fascinating breakthroughs in computer science#i am so happy im alive to see them#i just wish people didnt have to try to shove them into some of the most inappropriate cognitohazardous applications possible#because they HAVE to turn a PROFIT for our corporate overlords to be WORTH anything right???#fight the ceos not the bots

17 notes

·

View notes

Text

https://www.quantamagazine.org/how-the-brain-distinguishes-memories-from-perceptions-20221214

#neuroscience#cogsci#cognition#cognitive processes#memory processing#sensory processing#memories#memory#cognitive science#neural pathways#quanta magazine#quanta mag#STEM#//comment later#//i want to explain some analogies to that - hopefully my sentences won't turn into utter nonsense gibberish

49 notes

·

View notes

Text

The human brain is a complex organ responsible for various cognitive functions. Scientists from the University of Sydney and Fudan University have made a significant discovery regarding brain signals that traverse the outer layer of neural tissue and form spiral patterns. These spirals, observed during both resting and cognitive states, have been found to play a crucial role in organizing brain activity and cognitive processing.

The research study, published in Nature Human Behaviour, focuses on the identification and analysis of spiral-shaped brain signals and their implications for understanding brain dynamics and functions. The study utilized functional magnetic resonance imaging (fMRI) brain scans of 100 young adults to observe and analyze these brain signals. By adapting methods used in understanding complex wave patterns in turbulence, the researchers successfully identified and characterized the spiral patterns observed on the cortex.

Our study suggests that gaining insights into how the spirals are related to cognitive processing could significantly enhance our understanding of the dynamics and functions of the brain. —Associate Professor Pulin Gong

Continue reading

#geometriccognition#cognitivegeometry#geometry#geometrymatters#research#science#academia#neural#study

44 notes

·

View notes